Abstract

We consider elliptic random walks in i.i.d. random environments on \(\mathbb {Z}^d\). The main goal of this paper is to study under which ellipticity conditions local trapping occurs. Our main result is to exhibit an ellipticity criterion for ballistic behavior which extends previously known results. We also show that if the annealed expected exit time of a unit hypercube is infinite then the walk has zero asymptotic velocity.

Similar content being viewed by others

1 Introduction

In this paper, we consider random walks in i.i.d. random environments on \(\mathbb {Z}^d\) for \(d\ge 2\), in the specific case where the walk is directionally transient. It is expected that if the transition probabilities are uniformly elliptic then the walk is ballistic (see [20] and [23]). This conjecture has been proved under stronger transience assumptions known as Sznitman’s conditions (T), \((T')\) or \((T)_{\gamma }\) (see [18] and [19]) and more recently condition \((P)_M\) (see [1]). All those transience conditions are believed to be equivalent under uniform ellipticity (see [20] and [23]). Proving this equivalence is one of the major open problems in random walk in random environments (RWRE). We will give more details on these results in Sect. 1.2.

If we remove the uniform ellipticity assumption, the walk may become sub-ballistic even in the elliptic setting (see [12–14] and [3]). This naturally raises the following question: which ellipticity conditions characterize a ballistic behavior?

Recently, new ellipticity criteria for ballistic behavior have been proved (in [6] and [4]). In this paper, we find a criterion (see Theorem 3.2) for positive speed which extends previously known results. We believe that this criterion is close to optimal and we use it to exhibit new examples of ballistic random walks (see Proposition 4.3). We also prove, under stronger assumptions, annealed and quenched central limit theorems (see Theorem 3.3). Furthermore, we show that if the annealed expected exit time of a unit hypercube is infinite then the walk has zero asymptotic velocity (see Theorem 3.1). We think that this criterion actually characterizes the zero-speed regime.

1.1 Definition of the model

Let us now define the model more precisely. Call U the set of 2d canonical unit vectors and let

Fix some unit vector \(\ell \in S^{d-1}\) and let us enumerate U in the following manner: denote \(\nu =\{e_1,\ldots ,e_{d}\}\) an orthonormal basis of \(\mathbb {Z}^d\) such that \(e_1\cdot \ell \ge e_2 \cdot \ell \ge \cdots \ge e_d\cdot \ell \ge 0\) and set \(e_{i+d}=-e_i\) for \(i\in [1,d]\). In particular, Pythagoras’s theorem implies that

An environment \(\omega \) is an element of \(\Omega ={\mathcal {P}}^{\mathbb {Z}^d}\), which we view as a collection of transition probabilities \(p^{\omega }(x,\cdot )=(p^{\omega }(x,e))_{e\in U}\) assigned to every vertex \(x\in \mathbb {Z}^d\).

The random walk in the environment \(\omega \) started from x is the Markov chain \((X_n)_{n \ge 0}\) in \(\mathbb {Z}^d\) with the law \(P_x^{\omega }\) defined by \(P_x^{\omega }[X_0=x]=1\) and

for any \(x\in \mathbb {Z}^d\) and \(e\in U\). The law \(P_x^{\omega }\) is commonly referred to as the quenched law.

Let us consider \(\mathbf{P}\) a probability measure on the environment space \(\Omega \) which is a product measure, meaning that all random variables \(p^{\omega }(x,\cdot )\) for \(x\in \mathbb {Z}^d\) are i.i.d. under \(\mathbf{P}\). This allows us to define the averaged or annealed law of the RWRE started at x by defining \(\mathbb {P}_x=\int P_x^{\omega }d\mathbf{P}\). In the case where \(x=0\), we will abbreviate \(P_x^{\omega }\) and \(\mathbb {P}_x\) by \(P^{\omega }\) and \(\mathbb {P}\) respectively.

We say that the environment is elliptic if it verifies the following hypothesis (E)

and we call an environment uniformly elliptic if there exists \(\kappa >0\) such that

a condition commonly denoted (UE).

Given \(\ell \in S^{d-1}\), we say that a RWRE is transient in the direction \(\ell \) if \(\mathbb {P}[A_{\ell }]=1\) where \(A_{\ell }=\{{\lim _{n \rightarrow \infty }} X_n \cdot \ell =\infty \}\).

We say that a RWRE is ballistic in the direction \(\ell \) if

1.2 Former results and open questions

The case of RWRE on \(\mathbb {Z}\) is well understood. In [16], the author identifies conditions that characterize recurrence versus (directional) transience, as well as zero-speed versus positive speed regimes. In particular, a regime of directional transience with zero-speed is exhibited. The existence of this regime is due to the existence of traps slowing down the walk down (see [9] for details on trapping in RWREs on \(\mathbb {Z}\)). These traps can be formed even when transitions probabilities are uniformly elliptic.

In \(\mathbb {Z}^d\), for \(d\ge 2\), it is more difficult to create traps. Actually, one of the main open problems concerning random walks in random environments is the following conjecture (see [20] and [23]).

Conjecture 1.1

For any \(\ell \in S^{d-1}\), we consider a random walk in a uniformly elliptic i.i.d. environment in \(\mathbb {Z}^d\) for \(d\ge 2\). If it is transient in the direction \(\ell \), then it is ballistic in the direction \(\ell \).

Let us discuss this conjecture on a very basic level. We can notice that there are two main hypotheses in this conjecture.

-

(1)

The directional transience, which is a “global” hypothesis on the transition probabilities. It gives information on how the walk explores the space.

-

(2)

The uniform ellipticity, which is a “local” property for the transition probabilities. It provides us with a sufficient condition to avoid that the walk gets trapped in a small part of the environment.

The main difficulty in proving Conjecture 1.1 is to understand how the walk explores the space. Roughly speaking, we need to show that directional transience implies that the walk goes relatively directly in the direction \(\ell \), i.e. without zig-zagging on large scales. This, coupled with the fact that the walk cannot be trapped locally (because of uniform ellipticity) should imply that the walk is ballistic.

Surprisingly, it turns out to be technically difficult to show that a directional transient walk goes fairly directly in the direction \(\ell \). Conjecture 1.1 has only been proved under stronger transience assumptions, under which we are given quantitative estimates on the exit probabilities of large slabs. Let us now introduce one of these conditions known as Sznitman’s \((T)_{\gamma }^{\ell }\) (see [18]).

For any set of vertices \(A\subset \mathbb {Z}^d\), we introduce the exit time of the set A as

For any \(\ell \in S^{d-1}\) and for any \(b>0\), we define the slab

Set \(\ell \in S^{d-1}\), \(\gamma \in (0,1)\) and \(b>0\), we say that the walk verifies the condition \((T)_{\gamma }^{\ell }\) if there exists a neighborhood \(V\subset S^{d-1}\) of \(\ell \) such that for all \(\ell '\in V\), we have

Loosely speaking, this means that the probability of exiting a large slab against the asymptotic direction of the walk decays like a stretched exponential of exponent \(\gamma \) (in the size of the slab).

Condition \((T)^{\ell }\) corresponds to condition \((T)_{\gamma }^{\ell }\) in the case where \(\gamma =1\). Condition \((T')^{\ell }\) is defined as the fulfillment of condition \((T)_{\gamma }^{\ell }\) for all \(\gamma \in (0,1)\). It was proved in [19] that a random walk in i.i.d. uniformly elliptic environment satisfying \((T')^{\ell }\) is ballistic in the direction \(\ell \). It was also shown (see [19]) that if \(\gamma \in (1/2,1)\) then \((T)_{\gamma }^{\ell }\) implies \((T')\).

Subsequent works ([7, 8] and [1]) have weakened the transience conditions that we can verify to prove ballistic behavior under uniform ellipticity. At this point in time, the state of the art is a result from [1] called polynomial condition typically denoted \((P)_M\).

To define this condition, let us consider for each L, \(L'\), \(\widetilde{L}>0\) and \(\ell \in S^{d-1}\) the box

where R is the rotation of \(\mathbb {R}^d\) with center 0 which sends \(e_1\) onto \(\ell \). For \(M\ge 1\) and \(\ell \in S^{d-1}\), we say that the walk verifies condition \((P)_M^{\ell }\) if for all \(L\ge \frac{2}{3} 3^{29d}\), there exist \(L'\le \frac{5}{4} L\) and \(\widetilde{L}\le 72 L^3\) such that

This condition can be verified in a finite box, that is why it is referred to as an effective criterion. It should be noted that this condition obviously follows from tail estimates on the exit probabilities appearing in (1.4).

The main result of [1] is that for a RWRE in i.i.d. environment with uniformly elliptic transition probabilities then \((P)_M\) for \(M\ge 15d+5\) implies \((T')\). In particular, this implies ballisticity.

As we can see there has been a great deal of effort to understand under which transience assumptions we are able to prove ballistic behavior. But it is only recently that there have been developments on RWREs that are not uniformly elliptic.

It is known ([12–14] and [3]) that, in dimension \(d\ge 2\), there exist elliptic random walks which are directionally transient but are not ballistic. More recently it has been shown, in [6], that under certain ellipticity conditions the polynomial condition \((P)_M\) is equivalent to \((T)'\). To be more specific, consider a RWRE in an elliptic i.i.d. environment, we say that it verifies condition \((E)_0\) if

One of the main results (Theorem 1.1) of [6] is that if a random walk in an elliptic i.i.d. environment verifies \((P)_M^{\ell }\) for some \(M\ge 15d+5\) and \((E)_0\) then this RWRE verifies \((T')^{\ell }\). We give an exact statement of this result in Theorem 5.1. Furthermore, the authors of [6] introduce sufficient ellipticity conditions for ballistic behavior under condition \((P)_M\). Later on, the ellipticity conditions for ballistic behavior were improved in [4], providing an optimal criterion for the case of Dirichlet environments. See Sect. 4.2 for details on this ellipticity condition.

In order to understand which ellipticity criteria characterize ballistic behavior we need to understand exactly how local traps are created. This is the main focus of this paper. After investigating how traps are created, it is our belief that a walk is ballistic if, and only if, the expected annealed exit time of a unit hypercube is finite. In order to back up our belief we prove the following:

-

(1)

if the annealed exit time of a unit hypercube is infinite then the walk has zero asymptotic velocity (see Theorem 3.1),

-

(2)

we give a criterion for positive speed (see Theorem 3.2). In order to verify this criterion it is sufficient to prove that we can exit some particular unit hypercube containing the origin. As we explain in Sect. 4.1, we believe that this criterion essentially means that the exit time of a unit hypercube has finite annealed expectation.

For the aforementioned reasons, we believe that our positive speed criterion is near optimal.

One of the contribution of this work is to bring forth the idea that the smallest possible traps are contained in unit hypercubes. This is striking since, in the reversible context, it is known (see [10]) that if a walk is sub-ballistic then it can get trapped on just one edge. In Proposition 4.4, we provide an example of a sub-ballistic RWRE than cannot stay long on only one edge.

1.3 Plan of the article

Let us present how this paper is structured.

In the next section (Sect. 2), we will start by introducing some basic notations as well as facts about regeneration times. This is a central tool for determining whether or not a walk is ballistic.

After that, in Sect. 3, we will present our zero speed criterion (see Theorem 3.1) and our positive speed criterion (see Theorem 3.2). We also state annealed and quenched central limit theorems (see Theorem 3.3).

Before moving on to proofs, we discuss the intuition behind our main results in Sect. 4. In this section we try to justify why our criterion is close to optimal. We also provide a new example of ballistic walks (see Proposition 4.3) and a zero-speed random walk that can never stay long on only one edge (see Proposition 4.4).

The proof for the sufficient condition for positive speed is presented in Sect. 5. This section is divided into three parts. The first one is Sect. 5.1 in which we prove the key estimate Proposition 5.2: under our ballisticity criterion the quenched probability of reaching a point far away is lower bounded. The second section is Sect. 5.2, in which we recall some classical results from RWREs. Finally the third part is Sect. 5.3, in which we finish the proof by providing an upper bound on the tail of the first regeneration time.

Finally we present the proof of the sufficient condition for zero speed in Sect. 6. This section is essentially independent of the rest of the paper.

Before moving on to the rest of the paper, let us specify that in the course of our proofs, c and C will typically denote constants in \((0,\infty )\) whose value may change from line to line.

2 Basic notations and regeneration times

In this section, we introduce some basic notations and we summarize the facts we need about regeneration times.

Let us define the adjacency \(\sim \) such that, for \(x,y\in {\mathbb {Z}}^d\), we have \(x\sim y\) if and only if \(\Vert x-y\Vert _1=1\). Given a set V of vertices of \(\mathbb {Z}^d\), we denote by \(\left| V\right| \) its cardinality, by \(E(V)=\{ [x,y] : x\sim y,\ x,y\in V\}\) its edges and

its border. For \(A\subset \mathbb {Z}^d\) and \(x\in A\), we denote

the neighbors of x which are outside of A.

For any \(r>0\), we denote

For any set of vertices \(A\subset \mathbb {Z}^d\), we introduce the hitting times

We will use a slight abuse of notation and write x instead of \(\{x\}\) when the set is a point x.

2.1 Regeneration times

We set \(a\in (2\sqrt{d}, 10 \sqrt{d})\) and define

as well as the stopping times \(S_k\), \(k\ge 0\), \(R_k\), \(k\ge 1\), and the levels \(M_k\), \(k\ge 0\):

where \(\theta _{\cdot }\) is the shift operator.

Finally, we define the basic regeneration time

Remark 2.1

The choice of \(a\in (2\sqrt{d},10 \sqrt{d})\) is only necessary to prove the non degeneracy of the covariance matrix in Theorem 2.2.

It follows from directional transience (see for example [21]) that

this allows us to define

Then let us define the sequence \(\tau _0=0<\tau _1<\tau _2< \cdots <\tau _k< \cdots \) (these inequalities hold except if the regeneration times are infinite), via the following procedure:

That is, the \((k+1)\)-th regeneration time is the k-th such time after the first one.

The first main result is that the regeneration structure exists and is finite (see for example [21]).

Lemma 2.1

Let us consider a RWRE in an elliptic i.i.d. environment. Fix \(\ell \in S^{d-1}\) and assume that the random walk is transient in the direction \(\ell \). For any \(k\ge 1\), we have \(\mathbf{P}\)-a.s., for all \(x\in \mathbb {Z}^d\),

The fundamental renewal property is now stated (see for example [21])

Theorem 2.1

Let us consider a RWRE in an elliptic i.i.d. environment. Fix \(\ell \in S^{d-1}\) and assume that the random walk is transient in the direction \(\ell \).

Under \(\mathbb {P}\), the processes \((X_{\tau _1\wedge \cdot }), (X_{(\tau _1+\cdot )\wedge \tau _2}-X_{\tau _1}), \ldots , (X_{(\tau _k+\cdot )\wedge \tau _{k+1}}-X_{\tau _k}),\ldots \) are independent and, except for the first one, are distributed as \((X_{\tau _1\wedge \cdot } )\) under \(\mathbb {P}[~\cdot \mid 0-\text {regen}]\).

The previous results we mention imply the following Theorem (see [17, 21] and [22]).

Theorem 2.2

Let us consider a RWRE in an elliptic i.i.d. environment. Fix \(\ell \in S^{d-1}\) and assume that the random walk is transient in the direction \(\ell \). Then there exists a limiting deterministic velocity

where

even in the case where \(\mathbb {E}[\tau _1\mid 0-\text {regen}]=\infty \). In particular one can obtain that

Furthermore, if \(\mathbb {E}[\tau _1^2\mid 0-\text {regen}]<\infty \), then

converges in law under \(\mathbb {P}\) to a Brownian motion with a non-degenerate covariance matrix.

3 Results

3.1 A criterion for zero-speed

We call unit hypercube located at x the set

For simplicity we use \({\mathfrak {H}}_0={\mathfrak {H}}\). Let us denote \((H)_{\alpha }\) the following hypothesis

In the next theorem we exhibit a criterion for zero-speed. We believe that criterion to be sharp.

Theorem 3.1

Let us consider a RWRE in an elliptic i.i.d. environment. Fix \(\ell \in S^{d-1}\) and assume that the random walk is transient in the direction \(\ell \).

If \((H)_1\) is verified, then the walk has zero speed, i.e. \(v=\vec {0}\).

In the same way that we prove Theorem 3.1 (see (6.3)), we can obtain lower bound estimates on regeneration times (see Sect. 2.1 for a precise definition of regeneration times).

Remark 3.1

Let us consider a RWRE in an elliptic i.i.d. environment. Fix \(\ell \in S^{d-1}\) and assume that the random walk is transient in the direction \(\ell \). Furthermore, we assume that there exists \(\alpha >0\) such that we have \((H)_{\alpha }\). Then

We believe that this last display is equivalent to \((H)_1\) when \(\alpha =1\).

3.2 A positive speed criterion

Let \({\mathcal {C}}\) be a unit hypercube of \(\mathbb {Z}^d\) and \(y\in {\mathcal {C}}\). We denote

the highest probability leading out of \({\mathcal {C}}\) from y.

Let H be a unit hypercube of \(\mathbb {Z}^d\) and \(x\in {\mathcal {C}}\). We denote for any \(y\in {\mathcal {C}}\)

the probability starting from x to exit \({\mathcal {C}}\) via a neighbor of y before returning to x.

In order to state our main result (Theorem 3.2), which is our criterion for positive speed, we need to introduce the concept of Markovian hypercube which we define in the next section.

3.2.1 Markovian hypercube

We denote \(\overline{{\mathfrak {H}}}_0=\{{\mathfrak {H}}_x, \text { where }x\in \mathbb {Z}^d\text { and } 0\in {\mathfrak {H}}_x\}\), the sets of unit hypercubes containing 0.

Let us introduce the notion of hypercube discovered in a Markovian fashion. It is a function h from \(\Omega \) into \(\overline{{\mathfrak {H}}}_0\) constructed in a particular manner that we are going to describe below.

We will start by introducing some notations before explaining intuitively the construction of a Markovian hypercube. We construct recursively functions \(f_0,\ldots , f_{2^d-1}\) from \(\Omega \) into \(\mathbb {Z}^d\), such that

-

(1)

\(f_0(\omega )=0\) \(\mathbf{P}\)-a.s.,

-

(2)

for \(i\ge 0\), the function \(f_{i+1}\) is measurable with respect to \(\{p^{\omega }(x,\cdot ), x\in \{f_0(\omega ),\ldots , f_i(\omega )\}\}\),

-

(3)

for any \(i\ge 0\), \(f_{i+1}(\omega ) \in \partial \{f_0(\omega ),\ldots , f_i(\omega )\}\) ,

-

(4)

for any \(i\ge 0\), \(\mathbf{P}\)-a.s. there exists \(H(\omega ) \in \overline{{\mathfrak {H}}}_0\) such that we have \(\{f_0(\omega ),\ldots , f_i(\omega )\} \subset H(\omega )\).

In words, this means we start from 0 then, using the information given by the transition probabilities at 0, we choose to add a site called \(f_1(\omega )\). Then we use the information given by the transition probabilities at 0 and \(f_1(\omega )\) to add a new adjacent site called \(f_2(\omega )\). We continue this procedure recursively, with the only restriction that we can never add a point \(f_{i+1}(\omega )\) such that the points \(\{f_0(\omega ),\ldots ,f_{i+1}(\omega )\}\) would not be included in a unit hypercube.

This procedure yields a hypercube \(\{f_0(\omega ),\ldots ,f_{2^d-1}(\omega )\}\) containing 0. A hypercube constructed in this way is said to be discovered in a Markovian fashion. Given such a hypercube \(h(\omega )\), we denote \(x_0(\omega )\) the only point in \(\mathbb {Z}^d\) such that

A couple of functions \((h(\omega ),(\alpha _x(\omega ))_{x\in {\mathfrak {H}}})\) is called a marked Markovian hypercube if

-

(1)

\(h(\omega )\) is a hypercube discovered in a Markovian fashion,

-

(2)

for any \(x\in {\mathfrak {H}}\), the function \(\alpha _x(\omega )\) goes from \(\Omega \) into \(\mathbb {R}^+\),

-

(3)

for any \(x\in {\mathfrak {H}}\), the function \(\alpha _x(\omega )\) is measurable with respect to \(\{p^{\omega }(y,\cdot ), y\in h(\omega )\}\).

In a marked Markovian hypercube, we simply add, using the information given by the transition probabilities in \(h(\omega )\), certain marks in \(\mathbb {R}^+\) to every corner of the hypercube \(h(\omega )\). We can see this by associating the mark \(\alpha _x(\omega )\) to the corner \(x_0(\omega )+x\in h(\omega )\) for every \(x\in {\mathfrak {H}}\).

Remark 3.2

It can easily be seen from the definition that a marked Markovian hypercube \((h(\omega ),(\alpha _x(\omega ))_{x\in {\mathfrak {H}}})\) is measurable with respect to \(\{p^{\omega }(y,\cdot ), y\in h(\omega )\}\). This means that a marked Markovian hypercube can be determined independently of the information outside of that hypercube.

3.2.2 Criterion \((K)_\alpha \)

Recalling the definitions at (3.3) and (3.4),

Definition 3.1

We denote \((K)_{\alpha }\) the following hypothesis:

-

(1)

there exists \(\gamma _x\in \mathbb {R}^+\), for every \(x\in {\mathfrak {H}}\), such that we have

$$\begin{aligned} \mathbf{E}\Bigl [\Bigl (Q_x^{{\mathfrak {H}}}\Bigr )^{-\gamma _x}\Bigr ]<\infty \qquad \text {for all } x\in {\mathfrak {H}}, \end{aligned}$$ -

(2)

there exists a marked Markovian hypercube \((h(\omega ),(\alpha _x(\omega ))_{x\in {\mathfrak {H}}})\) such that

$$\begin{aligned} \mathbf{E}\left[ \prod _{x\in {\mathfrak {H}}} \Bigl (\widetilde{Q}_{0,x_0(\omega )+x}^{h(\omega )}\Bigr )^{-\alpha _x(\omega )}\right] <\infty , \end{aligned}$$ -

(3)

there exists \(\varepsilon >0\) such that

$$\begin{aligned} \sum _{x\in {\mathfrak {H}}} (\gamma _x \wedge \alpha _x(\omega )) \ge \alpha +\varepsilon \qquad \mathbf{P}\text {-a.s}. \end{aligned}$$

This condition may seem very complicated. This is why, in Sect. 4, we shall dedicate a few pages to explaining the meaning of this condition and how to apply it. In particular, we will justify why the conditions involving the exponents \(\gamma _i\) are verified in the positive speed regime under some regularity properties of the tails at 0 of \(Q_x^{{\mathfrak {H}}}\) for \(x\in {\mathfrak {H}}\) (see Lemma 4.1 and below).

3.2.3 Criterion for positive speed

The next result proves that, under sufficiently strong transience conditions, the condition \((K)_1\) (see Definition 3.1) and \((E)_0\) (defined at (1.6)) imply positive speed.

Theorem 3.2

Let us consider a RWRE in an elliptic i.i.d. environment that verifies conditions \((E)_0\) and \((P)_M^{\ell }\) for some \(M\ge 15d+5\) and \(\ell \in S^{d-1}\). If furthermore condition \((K)_1\) is verified, then the walk is ballistic in the direction \(\ell \), i.e.

In the course of the proof of Theorem 3.2, we obtain tail estimates on \(\tau _1\), see Proposition 5.1.

Although the criterion \((K)_1\) is very flexible, it can be a bit cumbersome to verify it. However, in concrete examples (for example, see Proposition 4.3, which exhibits new examples of ballistic walks), we can use a much simpler criterion \((\widetilde{K})_1\) defined by:

Remark 3.3

Condition \((\widetilde{K})_1\) is easily seen to imply \((K)_1\), by choosing the \(\gamma \)’s and the Markovian hypercube conveniently. Indeed, assume \((\widetilde{K})_1\) is verified and denote \(x_{\min }\in {\mathfrak {H}}\) the vertex for which the minimum is reached. Then, define, for any \(x\in {\mathfrak {H}}\), \(\alpha _x(\omega )=\gamma _x=(1+\varepsilon ){\mathbf 1}{\{x=x_{\min }\}}\) and, recalling (3.5), let \(h(\omega )\) be such that \(x_0(\omega )=-x_{\min }\).

This means that if a RWRE is in an elliptic i.i.d. environment verifies conditions \((\widetilde{K})_1\), \((E)_0\) and \((P)_M^{\ell }\) for some \(M\ge 15d+5\) and \(\ell \in S^{d-1}\), then the walk is ballistic in the direction \(\ell \).

3.2.4 Central limit theorems

Under some stronger assumptions, we prove an annealed central limit theorem, using Theorem 2.2, and also a quenched central limit theorem, using the main result of Bouchet, Sabot and dos Santos [5] (improving on previous results by Rassoul-Agha, Seppäläinen [11] and Berger, Zeitouni [2]).

Theorem 3.3

Consider a RWRE in \(\mathbb {Z}^d\) with \(d\ge 2\). Let \(\ell \in S^{d-1}\) and \(M\ge 15d+5\). Assume that the random walk satisfies conditions \((P)_M^\ell \), \((E)_0\) and \((K)_2\), then we have an annealed central limit theorem, i.e.

converges in law under \(\mathbb {P}_0\) as \(\varepsilon \rightarrow 0\) to a Brownian Motion with non-degenerate covariance matrix. Under the same conditions, we also have a quenched central limit theorem, i.e. the previous expression also converges in law under \(P_0^\omega \) as \(\varepsilon \rightarrow 0\) to a Brownian Motion with non-degenerate covariance matrix for \(\omega \)-P-a.s.

4 Discussion on the main results

Let us now address some questions the reader might have about hypothesis \((K)_{1}\).

-

(1)

What does this condition intuitively mean and why?

-

(2)

How do we apply our criterion? Why is this criterion general?

-

(3)

Why do unit hypercubes appear?

Through the course of these explanations we hope to convince the reader that the hypothesis \((K)_1\) is a near optimal criterion for positive speed.

4.1 What does this condition intuitively mean and why?

We believe that \((K)_1\) essentially means that the expected annealed exit time of a hypercube has a moment of order \(1+\varepsilon \) for some \(\varepsilon >0\). This would mean that \((K)_1\) and \((H)_1\) cover most cases of RWREs and allow us to determine whether or not the walk has positive speed under the hypotheses \((E)_0\) and \((P)_M^{\ell }\).

Let us now explain why \((K)_1\) and \((H)_1\) are close to complementary. The complementary condition \((H)_1^c\) exactly means that

4.1.1 Why part (1) of \((K)_1\) is typically verified in the positive speed regime

Recall that \(\overline{{\mathfrak {H}}}_0\) is the set of all the hypercubes containing 0.

Lemma 4.1

If \((H)_1^c\) holds, then for any hypercube \(H\in \overline{{\mathfrak {H}}}_0\), we have

The proof of this lemma is straightforward, it follows from the fact that, on any point \(y\in H\), the exit probability of H is at most \(\max _{y\in H} Q^{H}_{y}\).

For part (1) of \((K)_1\), we require that there exist \(\varepsilon >0\) and \(\gamma _y\ge 0\) for all \(y\in H\) such that

or, equivalently by the independence of \(Q^H_y\) for \(y\in H\),

with \({\sum _{y\in H}} \gamma _y \ge 1+\varepsilon \).

In most generic cases, where the tails of all \(Q^H_y\) for \(y\in H\) are sufficiently smooth, e.g. polynomial tails or Dirichlet environment, one can see that this condition is equivalent to \(\mathbf {E}[{\min _{y\in H}}( Q^{H}_{y})^{-(1+\varepsilon )}]<\infty \). This is very similar to the condition in Lemma 4.1, although slightly stronger because of the \(\varepsilon \).

Besides, note that it is easy to see that (4.2) implies that the condition in Lemma 4.1 is verified, indeed:

where we used that for any \(x\in H\) we have \(\min _{y\in H}\frac{1}{ {Q}_{y}^{H}}\le \frac{1}{ {Q}_{x}^{}}\).

Recalling Theorem 3.1, we know that \((H)_1^c\) holds whenever the speed is positive, we hope to have convinced the reader that part (1) of \((K)_1\) is typically verified in the positive speed regime.

4.1.2 How does part (2) of \((K)_1\) relate to the exit time of hypercubes

Let us now explain why \((K)_1\) and \((H)_1\) are close to complementary.

The following proposition states a condition which is equivalent to \((H)_1^c\).

Proposition 4.1

The condition \((H)_1^c\) holds if, and only if, for any hypercube \(H\in \overline{{\mathfrak {H}}}_0\), we have

Proof

It will be sufficient to show that

which, by translation invariance of the environment, can be equivalently stated in the following way: for any hypercube H containing 0, we have

For all \(x\in {\mathfrak {H}}\), we define the number of visits to x before exiting \({\mathfrak {H}}\) by

and notice that

Define also, for any \(x\in {\mathfrak {H}}\),

which verifies that for any \(y\in {\mathfrak {H}}\)

Now, for any \(x\in {\mathfrak {H}}\) and any starting point \(x_0\in {\mathfrak {H}}\) (could be the same), we get

In particular, we have, for any \(x_0\in {\mathfrak {H}}\),

where the lower bound is obtained by keeping only the term for which \(x=x_0\) in the sum in (4.4).

Thus, \((H)_1^c\), defined in (4.1), holds if, and only if,

which is also equivalent by (4.5) to (4.3). \(\square \)

For technical reasons it is difficult for us to use the condition appearing in Proposition 4.1. Indeed, we want to use large deviations which requires slightly stronger assumptions. The way we strengthen the condition in Proposition 4.1 is similar to what we did in (4.2). In this new case, the random variables \(\widetilde{Q}^{H}_{0,y}\) are correlated. For this reason, we introduce the following condition which is slightly more flexible: there exist random variables \((\alpha _x(\omega ))_{x\in H}\) such that

with \(\sum _{x\in H} \alpha _x(\omega ) \ge 1\), \(\mathbf{P}\)-almost surely. As the reader may notice that this is similar to parts (2) and (3) of condition \((K)_1\). On the one hand, we lost a constant \(\varepsilon \). On the other hand, we only require this condition (4.6) to be verified on a Markovian hypercube instead of all hypercubes containing 0. Only having to verify this property on a single Markovian hypercube gives us a lot of flexibility. The flip side of this flexibility is that we require a slightly stronger condition on the \(\alpha \)’s (see part (3) of \((K)_1\)). This will be discussed in the next section.

At first glance, allowing our exponents \(\alpha _x(\omega )\) to be random in condition \((K)_1\) may seem a bit odd. But this randomness gives us some extra flexibility and makes our condition more general. In particular, it allows us to very easily check that our new condition is more general than previous ones (see Proposition 4.2).

4.1.3 Some comments on part (3) of \((K)_1\)

The reader can easily realize that, because of part (3) of \((K)_1\) , it is useless to choose \(\alpha _x(\omega )>\gamma _x\) for any \(x\in {\mathfrak {H}}\).

Such a condition is obviously needed, since part (2) of \((K)_1\) can always be verified with \(\sum _{x\in h(\omega )} \alpha _x(\omega )\ge 1+\varepsilon \). Indeed, using only the transition probabilities at 0, we can always construct a Markovian hypercube \(h(\omega )\) from which the walker can exit in one step with probability at least 1 / (2d) through an edge \(e(\omega )\). By assigning \(\alpha _{e(\omega )}(\omega )=2\), we can verify part (2) of \((K)_1\), but part (3) is not necessarily verified.

Intuitively, part (3) of \((K)_1\) prevents us from using too strongly the conditioning provided by \(h(\omega )\). In particular, the tail at 0 of \(\widetilde{Q}_{0,x_0(\omega )+x}^{h(\omega )}\) cannot be much lighter than the one of \(Q_x^{{\mathfrak {H}}}\).

In the next section, we will explain how to choose the Markovian hypercube in order to verify \((K)_\alpha \).

4.2 How do we apply the criterion? Why is this criterion general?

To apply the criterion \((K)_{\alpha }\), we need to find an efficient way of choosing our Markovian hypercube \(h(\omega )\). Generally speaking one should try to choose the Markovian hypercube \(h(\omega )\), in such a way that we can easily move around the hypercube. This will increase the potential exit points and make it easier to verify \((K)_{\alpha }\). Surprisingly one should not choose the hypercube from which it is the easiest to exit (see Sect. 4.1.3).

The choice of \(\alpha _x(\omega )\) is supposed to reflect how easy it is to exit the hypercube \(h(\omega )\) by the corner \(x+x_0(\omega )\). The choice of \(\alpha _x(\omega )=0\) means that we essentially ignore the possibility of exiting the hypercube in that corner.

In order to illustrate how to apply the criterion \((K)_{\alpha }\), we are going to show that \((K)_{\alpha }\) is more general than the current best criterion for positive speed (see [4]). This will be done in two parts. Firstly, we take the criterion for positive speed exhibited in [4] and show that it implies \((K_1)\). Secondly, we will provide an example which verifies \((K)_1\) but no former criterion.

Extending previous results

In [4], the authors introduced the following condition called \((E')_1\): there exists \(\{\phi (e), e\in U\}\in (0,\infty )^{2d}\) such that

-

(1)

\(2\sum _{e\in U} \phi (e)-\sup _{e\in U} (\phi (e)+\phi (-e))>1\),

-

(2)

for every \(e\in U\) we have that

$$\begin{aligned} \mathbf{E}\left[ \exp \left( \sum _{e'\ne e} \phi (e')\log \frac{1}{p^{\omega }(0,e')}\right) \right] <\infty , \end{aligned}$$

It is shown (see Theorem 2 in [4]) that under \((E')_1\) the walk is ballistic provided the conditions \((P)_M\) and \((E)_0\) are verified.

Our goal here is to show that the ellipticity condition we present in this paper is more general than those of [6] and [4].

Proposition 4.2

Any random environment verifying the condition \((E')_1\) also verifies \((K)_1\).

Proof

Assume that there exists \(\{\phi (e), e\in U\}\in (0,\infty )^{2d}\) such that \((E')_1\) holds. Then there exists \(\varepsilon >0\) such that

-

(1)

we have

$$\begin{aligned} 2\sum _{e\in U} \phi (e)-\sup _{e\in U} (\phi (e)+\phi (-e))>1+\varepsilon , \end{aligned}$$(4.7) -

(2)

for every \(e\in U\) we have that

$$\begin{aligned} \mathbf{E}\left[ \exp \left( \sum _{e'\ne e} \phi (e')\log \frac{1}{p^{\omega }(0,e')}\right) \right] <\infty , \end{aligned}$$(4.8)

Let us check that we can verify the three conditions of \((K)_1\) (defined at Definition 3.1). This will prove our proposition.

First condition

The first point (1) of Definition 3.1 of \((K)_1\) holds by choosing for any \(x\in {\mathfrak {H}}\)

because of property (4.8).

The definition of the Markovian hypercube and the third condition

Now, let us construct a marked Markovian hypercube \((h(\omega ),(\alpha _x(\omega ))_{x\in {\mathfrak {H}}})\) (see Sect. 3.2.1) fulfilling condition \((K)_1\).

Recall the definition of \(\{e_1,\ldots ,e_d\}\) in page 2 and \({\mathfrak {H}}_0\) at 2. Fix \(\delta \in (0,1/(2d))\) and define the event

then recursively, for all \(k\in \{2,\ldots ,2d\}\),

so that \((A_k)_{1\le k\le 2d}\) forms a partition of \(\Omega \).

Now, we define a Markovian hypercube \(h(\omega )\) such that \(h(\omega )={\mathfrak {H}}_0\) on \(A_k\) for all \(k\in \{1,\ldots ,d\}\), and \(h(\omega )={\mathfrak {H}}_{(-1,\ldots ,-1)}\) on \(A_k\) for all \(k\in \{1+d,\ldots ,2d\}\). Recall the definition (3.5) of \(x_0(\omega )\) and notice that either \(x_0(\omega )=0\) or \(x_0(\omega )=(-1,\ldots ,-1)\).

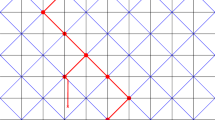

The arrows represent the different strategies for the walker to exit the hypercube efficiently under condition \((E')_1\), starting at 0. On the event \(A_k\), the bold edge can be crossed from 0 to \(e_k\) with lower bounded probability, the other arrows may be hard to cross individually but as a group they provide a sufficient accessible escape route

Let us work on the event \(A_k\), for some \(k\in \{1,\ldots ,2d\}\). We will now label some vertices of the hypercube \(h(\omega )\). Firstly, let \(v_0^{(k)}=0\) be the origin. This vertex \(v_0^{(k)}\) has d neighbors in \(h(\omega )\): let us call them \(v_1^{(k)},\ldots ,v_d^{(k)}\) such that \(v_d^{(k)}=e_k\). Notice that, on the event \(A_k\), \(p^\omega (v_0^{(k)},v_d^{(k)})=p^\omega (0,e_k)\ge \delta \).

The vertex \(v_d^{(k)}\) has also d neighbors in \(h(\omega )\), one of them is \(v_0^{(k)}\) and let us call \(u_1^{(k)},\ldots ,u_{d-1}^{(k)}\) the other neighbors (which are separate from the \(v^{(k)}\)’s). Note that, all these vertices are not random (their definition only depends on k).

Let us describe ways to exit the hypercube \(h(\omega )\) that will provide lower bounds on quantities of the type \(\widetilde{Q}_{0,x_0(\omega )+x}^{h(\omega )}\) (see Definition 3.1). First, we have to go out of the edge \(\{v_0^{(k)},v_d^{(k)}\}=\{0,e_k\}\) (recall that \(p^\omega (0,e_k)\ge \delta \) on \(A_k\)), using one of the vector that points out of this edge. There are two possibilities

-

(1)

if this vector makes us exit \(h(\omega )\), we have reached our goal (exiting \(h(\omega )\)),

-

(2)

if this vector leads us to another point of the hypercube, we just go on the same direction for one more step, which makes us exit the hypercube \(h(\omega )\) (see Fig. 1).

There are many more ways to exit the hypercube but we will not need them, indeed our lower bounds on the quantities of the type \(\widetilde{Q}_{0,x_0(\omega )+x}^{h(\omega )}\) (see Definition 3.1) will be sufficient.

This intuition will guide us in our choice of \(\alpha _x\). We labeled 2d vertices of \(h(\omega )\) among \(2^d\). For any point \(x\in h(\omega )\) such that \(x\notin \{v_0^{(k)},\ldots ,v_d^{(k)},u_1^{(k)},\ldots ,u_{d-1}^{(k)}\}\), we just choose the mark \(\alpha _{x-x_0(\omega )}(\omega )=0\), where \(x_0(\omega )\) is defined is Sect. 3.2.1.

Recalling the definition of \(\gamma _x\) at (4.9), let us define

as well as, for all \(i\in \{1,\ldots ,d-1\}\),

Notice that, if we set \(u_0^{(k)}=v_d^{(k)}(=e_k)\),

Hence, by (4.7), there exists \(\varepsilon >0\) such that for any \(k\in \{1,\ldots ,2d\}\), on the event \(A_k\),

Second condition for a Markovian hypercube

We have described how to obtain lower bounds on \(\widetilde{Q}_{0,x_0(\omega )+x}^{h(\omega )}\) for \(x\in {\mathfrak {H}}\) in the paragraph describing the intuition behind our choice of \(\alpha _x\). Thus, on the event \(A_k\) for any \(k\in \{1,\ldots ,2d\}\), using that \(p^\omega (0,e_k)=p^\omega (0,v_d^{(k)}-v_0^{(k)})\ge \delta \), and recalling that \(u_0^{(k)}=v_d^{(k)}=e_k\), we can see that

Recalling that \(\alpha _{x}(\omega )=0\) as soon as \((x_0(\omega )+x)\notin \{v_0^{(k)},\ldots ,v_d^{(k)},u_1^{(k)},\ldots ,u_{d-1}^{(k)}\}\), we deduce by regrouping the terms properly that:

We can notice on the right-hand side of the previous equations we have \(\mathbf{P}\)-independence between the terms

-

(1)

\(\prod _{e\in U, e\ne e_k} \left( p^\omega (v_0^{(k)},e)\right) ^{-\phi (e)}\),

-

(2)

\(\prod _{e\in U, e\ne -e_k} \left( p^\omega (u_0^{(k)},e)\right) ^{-\phi (e)}\),

-

(3)

\(p^\omega (v_i^{(k)},v_i^{(k)}-v_0^{(k)})\), for all \(i\in [1,d-1]\),

-

(4)

\(p^\omega (u_i^{(k)},u_i^{(k)}-u_0^{(k)})\), for all \(i\in [1,d-1]\).

Hence, for any \(k\in \{1,\ldots ,2d\}\), the annealed expectation of this previous quantity is finite, by (4.8). Thus, using translation invariance, that

This concludes the proof, together with (4.9) and (4.10) since all the three parts of the definition of \((K)_1\) are verified. \(\square \)

An example that verifies \((K)_1\) but no former criteria for ballistic behavior

We are now going to introduce an example in which we can verify \((K)_1\) but not \((E')_1\), showing that the ellipticity criterion \((K)_1\) is more general. This example also satisfies condition \((E)_0\), and we will also prove that it verifies condition (T) and thus directional transience.

Let us choose T a random variable such that \(2d+1\le T<\infty \) \({\mathbf P}\)-a.s., \(\mathbb {E}[T^{\frac{1}{4d}}]=\infty \) and \(\mathbb {E}[T^{\frac{1}{8d}}]<\infty \). Furthermore, we introduce an independent random variable \(i_0\) that is uniform on \(\{1,\ldots ,2d\}\).

Let us now define \(p^\omega (0,\cdot )\) in terms of T and \(i_0\) as this will give us the transition probabilities for this walk. Let \(\varepsilon \in (\frac{1}{2d+1},\frac{2d}{2d+1})\) and set:

Let us denote \({\mathbf P}^{\text {expl}}[\cdot ]\) the law of this environment.

Proposition 4.3

The environment \(\mathbf{P}^{\text {expl}}[\cdot ]\) verifies \((K)_1\) but does not verify \((E')_1\). Furthermore a RWRE in an environment given by \({\mathbf P}^{\text {expl}}[\cdot ]\) verifies condition (T) and \((E)_0\).

Proof

Note that \(\mathbb {E}[T^{\frac{1}{8d}}]<\infty \) ensures that \((E)_0\) holds. Let us prove that the walk is directionally transient and verifies conditions \((T')\).

The transition probabilities of \(\mathbf{P}^{\text {expl}}[\cdot ]\) are such that the walk has a strong drift toward \(\ell _0=e_1+\cdots +e_d\), as soon as \(\varepsilon \) is small enough. Indeed, we have

Thus, the process \((X_n\cdot l_0)_n\) is a random walk on \({\mathbb {Z}}\) in a deterministic environment such that it performs a jump toward the right with probability \(1-\varepsilon \). Therefore, it is now clear that the walk X is transient towards \(\ell _0\) and

Verifying condition (T)

We want to prove condition \((T)^{l_0}\) (see (1.4)), this means we need a neighborhood of \(\ell _0\). For this we consider \(\ell _0'=\ell _0+\sum _{i=1}^{d}\varepsilon _ie_i\), where, for all \(i\in \{1,\ldots ,d\}\), \(\varepsilon _i\in (-\varepsilon ,\varepsilon )\). Notice that, for all \(n\in {\mathbb {N}}\), \(X_n\cdot \ell _0'=X_n\cdot \ell _0+\sum _{i=1}^d \varepsilon _i (X_n\cdot e_i)\). Thus, obviously we have \(X_n\cdot \ell _0'\ge X_n\cdot \ell _0-\varepsilon n\).

Now, using a standard large deviation type argument, we can show that for some \(\lambda >0\) small enough, we have for all \(M>0\)

to prove this inequality we use the fact that \(\varepsilon <1/5\). Furthermore, we get:

where for \(x\in \mathbb {Z}\) we denote \(T_x^{(X_n\cdot \ell _0')}\) denotes the first hitting time of x by the walk \(X_n\cdot \ell _0'\).

We can now check easily that condition (T) is verified by choosing \(a=L\ge 1\) and \(M=bL\), for some \(b>0\), since

Verifying that condition \((E')_1\) is not satisfied

Let us prove that the condition \((E')_1\) is not satisfied. Indeed, for any family of real numbers \(\{\phi (e), e\in U\}\in (0,\infty )^{2d}\) such that

there exists \(e_0\in U\) such that \(\phi (e_0)\ge 1/4d\). Then, we have

in particular this implies that \((E')_1\) is not satisfied.

Verifying that condition \((K)_1\) is satisfied

On the other hand, let us prove that condition \((K)_1\) is verified. By the definition of \(\mathbf{P}^{\mathrm{expl}}[\cdot ]\), for any \(x\in {\mathfrak {H}}\), \(Q_x^{{\mathfrak {H}}}\ge \varepsilon /d\) \(\mathbf{P}\)-a.s. and thus has any moments. At this point one could conclude the proof by using Remark 3.3.

For illustrative purposes, we shall also verify \((K)_{\alpha }\) for any given \(\alpha >0\). We can choose, for all \(x\in {\mathfrak {H}}\), \(\gamma _x=\alpha +1\) for instance in order to verify the first property of \((K)_{\alpha }\). Then, we define a Markovian hypercube such that \(h(\omega )={\mathfrak {H}}\) \(\mathbf{P}\)-a.s. so that it is in fact deterministic and \(x_0(\omega )=0\) \(\mathbf{P}\)-a.s. Then, we choose \(\alpha _0(\omega )=\alpha +1\) \(\mathbf{P}\)-a.s. and, for any \(x\in {\mathfrak {H}}\setminus \{0\}\), we fix \(\alpha _x(\omega )=0\) \(\mathbb {P}\)-a.s. This implies that

and we conclude with

This means that \((K)_{\alpha }\) is verified for any \(\alpha >0\) (with \(\varepsilon =1\)). \(\square \)

4.3 Why do unit hypercubes appear?

Let us explain informally why traps can only exists if the walk can get trapped inside a hypercube. Intuitively, if there is a finite shape \(\mathcal {S}\) in which the walk stays trapped, then every edge getting out of this edge has an abnormally small probability of being crossed (making this edge a rare one). If the “corners” of that shape were translated onto the hypercube \({\mathfrak {H}}\), using the i.i.d. character of the environment, we could create a trap inside \({\mathfrak {H}}\) (see Fig. 2). This trap inside \({\mathfrak {H}}\) should typically be more likely to appear than the initial trap in \(\mathcal {S}\) since we have diminished the number of atypically “hard-to-cross” (thus rare) edges.

Conversely, we should also show that there are RWREs in elliptic i.i.d. environments in \(\mathbb {Z}^d\) with \(d\ge 2\) that have zero speed but cannot be trapped on only one edge.

Let us choose T a positive random variable verifying that \(\mathbf{P}[T\le \frac{1}{2}]=1\) and \(\mathbf{P}[T^{-1}\ge n]\ge cn^{-1/2^d}\). Furthermore, we introduce an independent random variable \(B_0\) which is uniform on the set of orthonormal basis of \(\mathbb {Z}^d\) (i.e. \(B_0\) is some orthonormal basis).

We are going to define \(p^{\omega }(0,\cdot )\) in terms of T and \(B_0\) as this will give us the law of the transition probabilities for our walk. We set

In this model, we typically imagine that T is small which means that the edges in \(B_0\) are hard to cross whereas the others are not. It is obvious that the walk cannot get trapped on a single edge. Indeed, from every point there are at least d edges which have probability at least 1 / (2d) of being taken.

On the other hand, the exit time of a hypercube has infinite expectation. Indeed, let us introduce the random variables \(B_0^{(x)}\), for all \(x\in {\mathbb {Z}}^d\), which are distributed like \(B_0\) such that they are all independent from each other. Similarly we introduce \(T_0^{(x)}\), for any \(x\in {\mathbb {Z}}^d\).

For \(x\in {\mathfrak {H}}\), let us consider the event \(\{B_0^{(x)}=\partial _x {\mathfrak {H}} ,\text { for all } x\in {\mathfrak {H}}\}\). This event has a positive probability (\((2^{-d})^{2^d}\)). On this event, from any point \(x\in {\mathfrak {H}}\), we know that \(P^{\omega }_x[X_1\notin {\mathfrak {H}}]\le \max _{x\in {\mathfrak {H}}} \frac{T_0^{(x)}}{d}\). This implies that

To see that the right-hand side is infinite, we simply compute the tail of \(\min _{x\in {\mathfrak {H}}} T^{(x)}\),

which is non-integrable. This means that \(\mathbb {E}[T^{\text {ex}}_{{\mathfrak {H}}}] =\infty \). This indicates that trapping occurs. If the walk was directionally transient, then we could use Theorem 3.1 and prove that the walk is sub-ballistic, even though one single edge is not enough to trap a walk. Nevertheless, since the transition probabilities are symmetric, the walk is not directionally transient.

In order to address this issue, let us introduce the following similar model. Recall the notation T and \(B_0\) defined above (4.11). We will now define a RWRE in \(\mathbb {Z}^{d+1}\). For this, let us point out that \(B_0\) is a d-dimensional basis in a \((d+1)\)-dimensional space such that a.s. \(B_0\cap \{e_{d+1},e_{2(d+1)}\}=\emptyset \). Moreover, we have that a.s. \(\{e \in U,\ e\in B_0 \text { or } -e\in B_0\}=U\setminus \{e_{d+1},e_{2(d+1)}\}\), where U is the set of the \(2(d+1)\) unit vectors of \({\mathbb {Z}}^{d+1}\). After noticing this, we can set

where C(T, d) is a normalizing constant so that \(q(0,\cdot )\) yields a probability transition. Since \(T\le 1/2\) a.s. an elementary computation shows that \(d\le C(T,d)\le 2(d+1)\).

Let us call \(\mathbf{Q}^{\text {ex}}[\cdot ]\) the law of the i.i.d. environment arising from the previous construction (Fig. 3).

Transition probability around a square \({\mathfrak {S}}\) in \({\mathbb {Z}}^3\) on the event \(\{B_0^{(x)}=\partial _x{\mathfrak {S}}\setminus \{e_{3},e_{6}\}\text {, for all } x\in {\mathfrak {S}}\}\). Bold edges are crossed with a lower bounded probability. Direction \(e_3\) is preferred among those leading out of \({\mathfrak {S}}\)

Proposition 4.4

Let \(X_n\) be the RWRE in the environment given by \(\mathbf{Q}^{\text {ex}}[\cdot ]\). For any \(d\ge 1\), it verifies that

-

(1)

\(X_n\) is transient in the direction \(e_{1+d}\).

-

(2)

\(X_n\) has zero velocity.

If furthermore \(d\ge 2\), then the walk \(X_n\) is unlikely to localize on one edge in the sense that there exists \(C(d)<\infty \) such that

Proof

The directional transience follows immediately from the fact that \(X_n\cdot e_{1+d}\) is a time-changed 2-biased random walk on \(\mathbb {Z}\).

A computation similar to (4.12), proves that the annealed exit time of a hypercube is infinite. The directional transience and Theorem 3.1 imply that the asymptotic velocity is 0.

We know that from every point there are at least d edges which have probability at least \(1/(4(d+1))\) of being taken. If \(d\ge 2\), it can then easily be show that the time spend on one edge is stochastically upper-bounded by a geometric random variable or parameter \(1/(4(d+1))\). The final point of our proposition therefore follows from a simple union bound. \(\square \)

Remark 4.1

In fact, this walk also verifies condition \((P)_M^{e_{d+1}}\) for some \(M\ge 15d+5\), see Theorem 4 of [4].

5 Proof of Theorem 3.2 and Theorem 3.3

This section is dedicated to the proof of Theorem 3.2 and Theorem 3.3 which state respectively the positive speed and central limit theorems for transient random walks in an elliptic i.i.d. environment satisfying \((E)_0\) (see (1.6)), the polynomial condition (see (1.5)) and the ellipticity conditions \((K)_{\alpha }\), defined by Definition 3.1.

The following proposition gives an estimate on the tail of the regeneration time \(\tau _1\), defined in Sect. 2.1.

Proposition 5.1

Let \(\ell \in S^{d-1}\), \(\alpha >0\) and \(M\ge 15d+5\). Assume that \((P)_M^\ell \) is satisfied and that the ellipticity conditions \((E)_0\) and \((K)_\alpha \) hold (resp. defined in (1.6) and (3.1)). Then, there exists \(\delta >0\) such that

On the one hand, this Proposition implies positive speed (see Theorem 3.2) by using Theorem 2.2. On the other hand, it also implies the central limit theorems (see Theorem 3.3), by using Theorem 2.2 for the annealed case and the main result of Bouchet, Sabot and dos Santos [5] for the quenched case. Note that, for the latter, we need to prove that condition \((T)'\) is verified under our assumptions: as we have stated in Sect. 1.2, it has been shown in [6] that under \((E)_0\) the polynomial condition \((P)_M\) is equivalent to \((T)'\). We properly state this result in Sect. 5.2 (see Theorem 5.1).

The goal is thus to prove Proposition 5.1, which is done in Sect. 5.3. The proof of Proposition 5.1 relies on two results of [6] which state that, under \((E)_0\), the polynomial condition is equivalent to condition \((T')\) (see Theorem 5.1) and that some atypical quenched exit estimates hold (see Proposition 5.3).

Another key of the proof is that, under \((K)_1\), with great probability, the walker reaches some point sufficiently away with sufficiently large quenched probability. The exact meaning of this sentence will be clarified in Proposition 5.2 and Corollary 5.1 in the following section.

These three arguments allow us to derive an estimate on the tail of the regeneration time \(\tau _1\), using arguments similar to those used by Sznitman in [18].

5.1 Attainability estimates

Let us prove the following result which is needed for the proof of Theorem 3.2 and Theorem 3.3. For this purpose, let us define the \(2^d\) following paths starting at 0 and reaching a point at distance n by visiting at most \(n+2^d\) vertices, and without coming back to 0. We are going to use the marked Markovian hypercube \((h(\omega ),(\alpha _x(\omega ))_{x\in {\mathfrak {H}}})\) associated to condition \((K)_\alpha \) and his particular corner \(x_0(\omega )\), defined in (3.5).

As we will see, these paths are not really paths but rather unions of trajectories. Indeed, we construct these objects in two steps. The first step consists in (starting at 0 and without coming back to 0) going out of the Markovian hypercube reaching some neighbor y of the hypercube. This does not define one path but a union of paths. For the second step, we construct an actual path that starts in y and goes more or less straight away from 0, without intersecting itself (see Fig. 4). We allow ourselves to misname these objects and call them paths as the important point is that they go from 0 to some point that is far away without coming back to 0. Moreover, even though we do not control the number of steps in these paths, we can upper bound the number of different points that they visit.

For any \(x\in {\mathfrak {H}}\) and \(n\in {\mathbb {N}}\), let \({\mathcal {Y}}^{(n)}_x=(y_0^{(x)},\ldots ,y_n^{(x)})\) be the path constructed as follows:

-

(1)

the path starts at \(y_0^{(x)}=0\);

-

(2)

the path goes out of the marked Markovian hypercube \((h(\omega ),(\alpha _z(\omega ))_{z\in {\mathfrak {H}}})\), without coming back to 0, and via a neighbour \(y_1^{(x)}\) of \(x_0(\omega )+x\) such that

$$\begin{aligned}&P^\omega _0\left[ T_{\partial h(\omega )}< T_0^+, X_{T_{\partial h(\omega )}}=y_1^{(x)}\right] \\&\quad =\max _{y\in \partial _{x_0(\omega )+x} h(\omega )} P^\omega _0\left[ T_{\partial h(\omega )}< T_0^+, X_{T_{\partial h(\omega )}}=y\right] , \end{aligned}$$and \(y_1^{(x)}\) is chosen arbitrarily if several vertices realize this last equality;

-

(3)

the rest of the path is a nearest-neighbour path such that, for all \(i\in \{1,\ldots n-1\}\), \(y_{i+1}^{(x)}\) is a neighbour of \(y_i^{(x)}\) such that

$$\begin{aligned} p^\omega \left( y_i^{(x)},y_{i+1}^{(x)}-y_i^{(x)}\right) =\max _{e\in U: y_i^{(x)}+e\notin {\mathfrak {H}}_{y_i^{(x)}-x}}p^\omega \left( y_i^{(x)},e\right) = Q_{y_i^{(x)}}^{{\mathfrak {H}}_{y_i^{(x)}-x}}, \end{aligned}$$where Q is defined in (3.3), \({\mathfrak {H}}_{y_i^{(x)}-x}\) is defined in (3.1), and \(y_{i+1}^{(x)}\) is chosen arbitrarily if several vertices realize this last equality.

See Fig. 4 for a scheme of this construction. Note also that, for all \(x\in {\mathfrak {H}}\), \(y_0^{(x)}\) and \(y_1^{(x)}\) are not necessarily neighbors.

Essentially, the path \({\mathcal {Y}}^{(n)}_x\) first goes out of the Markovian hypercube using the corner \(x_0(\omega )+x\) and then continues in the same global direction for \(n-1\) steps (see Fig. 4), such that it goes further from 0 at each step, using the same orthonormal basis that points out of h(w) from \(x_0(\omega )+x\). Hence, for all \(x\in {\mathfrak {H}}\), \(|y_n^{(x)}|_1\ge n\).

Notice that once the paths \(({\mathcal {Y}}^{(n)}_x)_{x\in {\mathfrak {H}}}\), are out of the Markovian hypercube, they do not intersect. That fact is very helpful in the computations of the following Proposition 5.2.

Even though \({\mathcal {Y}}^{(n)}_x\) is not a path, we call quenched probability of the path \(\pi _x^{(n)}\) of \({\mathcal {Y}}^{(n)}_x\) the following quantity

dropping the superscript “(x)” of the \(y_i\)’s for simplicity.

The next proposition states that, with high \(\mathbf {P}\)-probability, one of the paths depicted in Fig. 4 has a decent chance of being followed.

Proposition 5.2

Consider a RWRE in an elliptic environment satisfying condition \((K)_\alpha \), \(\alpha >0\). For any \(\delta >0\), there exists a constant \(\eta >0\), such that, for any u large enough, we have

where \(\lfloor \cdot \rfloor \) is the floor function, \(\pi _x^{(n)}\) is defined by (5.1) and \(\varepsilon \) comes from condition \((K)_\alpha \).

Proof

Let us emphasize again some facts about the paths \({\mathcal {Y}}_x^{(n)}\), \(x\in {\mathfrak {H}}\), \(n\in {\mathbb {N}}\), and their quenched probability \(\pi _x^{(n)}\):

-

(1)

out of the Markovian hypercube \(h(\omega )\), the paths do not intersect, i.e.

$$\begin{aligned} \bigcap _{x\in {\mathfrak {H}}} \left\{ y_i^{(x)},i\in \{1,\ldots ,n\}\right\} =\emptyset ; \end{aligned}$$ -

(2)

for any \(i\in \{1,\ldots n-1\}\), conditionally on h(w) and \(y_0^{(x)},\ldots ,y_i^{(x)}\), for all \(x\in {\mathfrak {H}}\), the quenched probabilities \(p^\omega (y_i^{(x)},y_{i+1}^{(x)}-y_i^{(x)})\) are independent and are distributed like the random variable \(Q_{x}^{{\mathfrak {H}}}\) defined in (3.3). These independence properties rely on the fact that \(h(\omega )\) is a Markovian hypercube, in particular here we use Remark 3.2.

-

(3)

using the definition (3.4) of \(\widetilde{Q}\) and the property (2) of the construction page (26), we have that, for any \(x\in {\mathfrak {H}}\),

$$\begin{aligned} P^\omega _0\left[ T_{\partial h(\omega )}< T_0^+, X_{T_{\partial h(\omega )}}=y_1^{(x)}\right] \ge \frac{1}{d} \widetilde{Q}_{0,x_0(\omega )+x}^{h(\omega )}, \end{aligned}$$thus, using (5.1),

$$\begin{aligned} \pi _x^{(n)}\ge \frac{1}{d} \widetilde{Q}_{0,x_0(\omega )+x}^{h(\omega )}\prod _{i=1}^{n-1} Q_{y_i}^{{\mathfrak {H}}_{y_i-x}}. \end{aligned}$$

Now, fix \(\eta >0\) and \(\delta >0\) and recall Definition 3.1 of the marks \((\alpha _x(\omega ))_{x\in {\mathfrak {H}}}\) of the marked Markovian hypercube \((h(\omega ),(\alpha _x(\omega ))_{x\in {\mathfrak {H}}})\). Using Markov inequality, the condition \((K)_\alpha \) and the previous remarks, we have, for all \(u>\exp (1/\eta )\),

where we used all previously mentioned independence properties. Introducing

which is finite thanks to condition \((K)_\alpha \), we can see that

Finally, for \(\eta >0\) small enough and u large enough,

\(\square \)

The proof of the following consequence is straightforward.

Corollary 5.1

Consider a RWRE in an elliptic environment satisfying condition \((K)_\alpha \), \(\alpha >0\). For any \(\delta >0\), there exists a constant \(\eta >0\), such that, for any u large enough, we have

where \(\lfloor \cdot \rfloor \) is the floor function and \(\varepsilon >0\) comes from condition \((K)_\alpha \).

5.2 Polynomial condition and atypical quenched exit estimates

In this section, we just recall two results previously obtained by Campos and Ramírez in [6]. This uses conditions \((E)_0\), \((P)_M^\ell \) and \((T')\) defined respectively at (1.6), (1.5) and (1.4).

This first theorem states that, under some light assumptions, the polynomial condition implies condition \((T')\).

Theorem 5.1

(Theorem 1.1 of Campos and Ramírez [6]) Consider a random walk in an i.i.d. environment in dimensions \(d\ge 2\). Let \(\ell \in S^{d-1}\) and \(M\ge 15d+5\). Assume that the environment satisfies the ellipticity condition \((E)_0\). Then the polynomial condition \((P)_M^\ell \) is equivalent to \((T')^\ell \).

The following proposition allow us to compute atypical quenched exit estimates. Before stating the results, we need some definitions, similar to those introduced in [4, 6].

Let us consider a RWRE in an elliptic i.i.d. environment and \(\ell \in S^{d-1}\), then \((P)_M^\ell \) implies that the walk has an asymptotic direction (see [15]), i.e. the following limit exists:

There exists \(i_0\in [1,2d]\) such that \(\hat{v}\cdot e_{i_0}>0\). Assume also that \(e_{i_0}\) is the vector of the canonical basis which is the nearest of \(\hat{v}\), so that the angle between v and \(e_{i_0}\) is upper-bounded and we have

Moreover, for any \(z\in {\mathbb {Z}}^d\), let P(z) be the projection of z on v along the hyperplane \(H=\{x\in \mathbb {R}^d:x\cdot e_{i_0}=0\}\), defined by

and let Q(z) be the projection of z on H along \(\hat{v}\) so that

For \(x\in {\mathbb {Z}}^d\), \(\beta >0\) and \(L>0\), we define the tilted boxes with respect to the asymptotic direction \(\hat{v}\) by:

and their front boundary by

Remark 5.1

An elementary geometric computation (see Fig. 5) shows for \(x_1,x_2\in \mathbb {Z}^d\) such that \((x_1-x_2)\cdot \hat{v} \ge 0\) and \(x_2\in B_{\beta ,L}(x_1)\), we have \(\left| \left| x_1-x_2\right| \right| _{\infty }\le (1+\sqrt{d})L^{\beta }\) since \(\hat{v}\cdot e_{i_0}\ge \frac{1}{\sqrt{d}}\).

Now, we have the following result from [6] that will be useful for us to show that it is extremely unlikely (super-exponential) that the environment typically sends the walker against \(\hat{v}\) for a long distance.

Proposition 5.3

(Proposition 4.1 of Campos and Ramírez [6]) Assume that \((E)_0\) holds and that \((P)_M^\ell \) is also satisfied for some \(M\ge 15d+5\). Let \(\beta _0\in (1/2,1)\), \(\beta \in (\frac{\beta _0+1}{2},1)\) and \(\zeta \in (0,\beta _0)\). Then, for each \(\gamma >0\), we have that

where \(g(\beta _0,\beta ,\zeta )=\min \left\{ \beta +\zeta , 3\beta -2+(d-1)(\beta -\beta _0)\right\} \).

In the next section, we will also need the following result that gives an equivalent criterion for \((T)_\gamma \), defined in (1.4). For this purpose, define, for any \(n\ge 1\), the n-th regeneration radius by

where the \(\tau _n\)’s are defined in (2.4).

The next result from Sznitman shows that if a walk verifies condition \((T)_\gamma ^\ell \) then the trajectory of the walk goes fairly directly in the direction \(\ell \), more precisely it is shown in [19] that the space explored between two regeneration times has good tails.

Proposition 5.4

(Sznitman [19]) Consider a RWRE in an elliptic i.i.d. environment. Let \(\gamma \in (0,1)\) and \(\ell \in S^{d-1}\). Assume that \((T)_\gamma ^\ell \) holds. Then, there exists a constant c such that, for every L and \(n\ge 1\), we have that

5.3 Estimates on the tail of \(\tau _1\): proof of Proposition 5.1

Here, we prove Proposition 5.1, which concludes the proof of Theorem 3.2 and Theorem 3.3.

Proof of Proposition 5.1

We want to give an estimate on the tail of \(\tau _1\). For this purpose, we define, for \(u>0\), the scale

where \(c_1\in (0,1)\), \(\eta >0\) and \(\beta \in (0,1)\) are constant which will be described later on. We also define the box

using the definition (5.2) of \(\hat{v}\) and recalling \(\hat{v}\cdot e_{i_0}\ge 1/\sqrt{d}\).

Now, notice that

where \(T_{C_L}^{\mathrm{ex}}\) is the first time the walker is out of \(C_L\). Now, we want to give an upper bound for both of these quantities. For the first one, we will use condition \((T)_\gamma \) and we will use, for the second one, the atypical quenched exit estimates of Proposition 5.3.

Upper bound for the first term of the right-hand side of (5.6)

First, we can give an estimate for the first quantity using Theorem 5.1 and Proposition 5.4, so that for any \(\gamma \in (\beta ,1)\),

Upper bound for the second term of the right-hand side of (5.6)

Now, let us give an estimate for the second quantity of the right-hand side of (5.6). The general strategy is first to notice that, on the event \(\{T_{C_L}^{\text {ex}}>u\}\), there exists some vertex \(x\in C_L\) such that the probability starting from that point x to come back to it before exiting \(C_L\) is not too small. On the other hand, Corollary 5.1 implies that there exists another point y, sufficiently far away from x, such that the probability to go from x to y without coming back to x is great enough. These two facts together will imply that the quenched probability to exit a tilted box (see (5.3)) by the sides or the backside is large: this is an atypical quenched exit estimate, whose \(\mathbf{P}\)-probability is upper bounded by Proposition 5.3.

On the event \(\{T_{C_L}^{\text {ex}}>u\}\), there is a.s.a random \(x_1\in C_L\) such that

which means that

Note that, for any \(x\in C_L\), if the walk starts from x, then \({\mathcal {N}}_x\) is a geometric random variable of parameter \(P_x^\omega \left( T_{C_L}^{\text {ex}}<T_x^+\right) \), hence we get

Using the two last equations, we see that

Let \({\mathcal {A}}\) be the event on which there exists \(x_1\in C_L\) and a vertex \(x_2\in \mathbb {Z}^d\) such that \(\left| x_2-x_1\right| _1= \lfloor \eta \log (u)\rfloor \) and

-

(1)

the following inequality holds

$$\begin{aligned} P_{x_1}^\omega \left[ T_{C_L}^{\text {ex}}<T_{x_1}^+\right] <2 \frac{|C_L|}{u}L; \end{aligned}$$ -

(2)

and the following inequality holds

$$\begin{aligned} P^\omega _{x_1}\left[ T_{x_2}<T^+_{x_1}\right] \ge u^{-\frac{\alpha +2\delta }{\alpha +\varepsilon }}. \end{aligned}$$

We can see that on \({\mathcal {A}}\) we have for u large enough,

which, in particular, implies that \(x_2\in C_L\).

Besides, recall that \((K)_\alpha \) holds and that some \(\varepsilon >0\) is associated with that condition. Then, fixing \(\delta \in (0,\varepsilon /4)\), by Corollary 5.1 there exists \(\eta >0\) small enough such that, as soon as u is large enough,

Using (5.9) and (5.11), we get the upper bound:

Note also that, as soon as u is large enough and using (5.5),

recalling that \(c_1\in (0,1)\) is a constant that we will fix later on.

Now, let us consider the tilted box \(B_{\beta ,L}(x_1)\) defined in (5.3) with \(c_1<(4d^2)^{-1}\) and distinguish two cases on the event \({\mathcal {A}}\).

First case: on the event \({\mathcal {A}}\cap \left\{ x_2\in B_{\beta ,L}(x_1)\right\} \)

First, if \(x_2\in B_{\beta ,L}(x_1)\), we will prove that \(x_1\notin B_{\beta ,L}(x_2)\).

Let us assume by contradiction that \(x_1\in B_{\beta ,L}(x_2)\), we can see that by Remark 5.1 (which can always be applied since \((x_1-x_2)\cdot \hat{v} \ge 0\) or \((x_2-x_1)\cdot \hat{v} \ge 0\), and \(x_1\) and \(x_2\) play symmetric roles for this computation)

The previous equation contradicts (5.13) since we just chose \(c_1<(4d^2)^{-1}\). Hence \(x_1\notin B_{\beta ,L}(x_2)\).

Moreover, one has that

where we used the fact that we are on \({\mathcal {A}}\) (see (5.10)). Furthermore, the definition of \({\mathcal {A}}\), then implies

This last inequality implies that the probability, starting from \(x_2\), to exit \(C_L\) before visiting \(x_1\) is very small. This fact implies that the probability to exit \(B_{\beta ,L}(x_2)\) through its front boundary is very small as well (see Fig. 6).

Second case: on the event \({\mathcal {A}}\cap \left\{ x_2\notin B_{\beta ,L}(x_1)\right\} \)

If \(x_2\) does not belong to \(B_{\beta ,L}(x_1)\), then it is obvious that the walker cannot visit \(x_2\) without exiting the tilted box (see Fig. 7). This means that

The walker cannot visit too many times \(x_1\) before exiting \(B_{\beta ,L}(x_1)\), indeed the walker goes relatively easily from \(x_1\) to \(x_2\) and \(x_2\) can only be reached by exiting \(B_{\beta ,L}(x_1)\).

More precisely, recalling the Definition (5.8) of \({\mathcal {N}}_{x_1}\) and using the previous equation in the third line, we see that on \({\mathcal {A}}\)

Atypical quenched exit estimates on \({\mathcal {A}}\)

By (5.14) and (5.15), we have that, on \({\mathcal {A}}\), there a.s. exists \(x\in C_L\) such that, for some positive constant \(c_3\), we have that, as soon as L is large enough,

Let us stress that the constants \(c_1\) and \(\eta \) have been fixed, so we will not emphasize them in the following computations. By using Proposition 5.3, we obtain a function \(g(\beta _0,\beta ,\zeta )\) such that

where we recall that L was defined at (5.5).

An elementary computation shows that \(g(\beta _0,\beta ,\zeta )>\beta \) for \(\beta \) close to 1, \(\beta _0\) close to 1 / 2 and \(\zeta >0\). Thus, for such a choice of constants, there exists \(\varepsilon '>0\) such that for u is large enough,

Conclusion

The inequality (5.6) and the estimates (5.7), (5.12) and (5.16), we conclude that, as soon as u is large enough,

for some \(\delta >0\). \(\square \)

Remark 5.2

Notice that in this last proof, the only limiting factor that prevents us to obtain moments of any order on \(\tau _1\) is (5.11) which describes the probability to reach a certain point at distance of order \(\log n\).

6 Zero-speed regime

In this section, let us prove Theorem 3.1. To accomplish this, we need to identify where trapping comes from.

For this, we say that a vertex \(x\in \mathbb {Z}^d\) is \(\kappa \)-elliptic if for all \(e\in U\), we have \(p^{\omega }(x,e) \in (\kappa ,1-\kappa )\). By (1.2) it clear that there exists \(\kappa _0>0\) such that \(\mathbf{P}[x\text { is }\kappa _0\text {-elliptic}]>1/2\) for any \(x\in \mathbb {Z}^d\). To be concise, we will say that a vertex is regular if it is \(\kappa _0\)-elliptic.

Let us introduce the sets

and

It is plain to see that

-

(1)

\({\mathcal {A}}\cup {\mathcal {B}}\) is connected,

-

(2)

\({\mathcal {A}}\) contains \(\partial {\mathfrak {H}}_{de_1}\),

-

(3)

\({\mathcal {A}}\subset {\mathcal {H}}^+(0)\) (defined at (2.1)). This can be seen easily from (1.1).

Let us introduce the event

it is clear that \(\mathbf{P}[{\mathcal {R}}]>0\).

The general idea is to investigate the probability of events such that some unit hypercube is surrounded by regular points, but transition probabilities inside the hypercube are not conditioned. Thus, on such an event, the walker moves easily around the hypercube but could get trapped in it, as the exit time of the hypercube is not conditioned and is independent of the environment outside it (see Fig. 8).

The following lemma shows that tail estimates on the exit time of hypercubes can be used to find lower bounds on regeneration times.

Lemma 6.1

Let us consider a RWRE in an elliptic i.i.d. environment. We have, for some constant \(c>0\),

Proof

We fix \(x_0\in {\mathfrak {H}}\) which realizes the maximum \(\max _{x\in {\mathfrak {H}}} \mathbb {P}_x[T^{\text {ex}}_{{\mathfrak {H}}}\ge n]\).

Let us now describe an event which slows the walk down and which can happen on \(\{0-\text {regen}\}\). On \({\mathcal {R}}\), consider the following chain of events

-

(1)

\(X_1=e_1, X_2=2e_1,\ldots , X_d=(d-1)e_1\),

-

(2)

from there \(X_n\) takes the shortest path inside \({\mathcal {A}}\cup {\mathcal {B}}\) to \(de_1+x_0\), this can be done in at most C(d) steps (where C(d) depends only on d).

-

(3)

Then, we stay on \({\mathfrak {H}}_{de_1}\) for a time \(T^{\text {ex}}_{{\mathfrak {H}}_{de_1}}\circ \theta _{T_{de_1+x_0}} \ge n\), where \(\theta _{\cdot }\) is a shift operator,

-

(4)

after exiting \({\mathfrak {H}}_{de_1}\), the walk has to be in \({\mathcal {A}}\). From there, the walk takes the shortest path to \(e_1\) inside \({\mathcal {A}}\cup {\mathcal {B}}\) and then the shortest path from \(e_1\) to \(de_1+2\sum _{i=1}^d e_i\) (which has never been visited) inside \({\mathcal {A}}\cup {\mathcal {B}}\). All this can be accomplished in less than C(d) steps (where C(d) depends only on d). This step ensures that \(\tau _1\) occurs after \(T^{\text {ex}}_{{\mathfrak {H}}_{de_1}}\circ \theta _{T_{de_1+x_0}}\).

-

(5)

Finally, the walk makes one step to \((d+1)e_1+2\sum _{i=1}^d e_i\), and from there never backtracks, creating a new regeneration time.

Let us denote \(F_n\) the chain of events described above (in (1), (2), (3), (4), (5)). We can see that on \(F_n\), we have

-

(1)

\(D=\infty \),

-

(2)

\(\tau _1\ge T^{\text {ex}}_{{\mathfrak {H}}_{de_1}}\circ \theta _{T_{de_1+x_0}} +T_{de_1+x_0}\ge n\).

This implies that

Now, we want to give a lower bound of \(P^{\omega }[F_n]\) on the event \({\mathcal {R}}\). This can be done by applying several times the strong Markov property at the times \(d-1\), \(T_{de_1+x_0}\), \(T^{\text {ex}}_{{\mathfrak {H}}_{de_1}}\circ \theta _{T_{de_1+x_0}}+T_{de_1+x_0}\), \(T_{de_1+2\sum _{i=1}^d e_i}\). This leads us to lower-bound the five terms described above.

-

(1)

On \({\mathcal {R}}\), we have that

$$\begin{aligned} P_0^{\omega }[X_1=e_1, X_2=2e_1,\ldots , X_{d-1}=(d-1)e_1] \ge \kappa _0^{d-1}. \end{aligned}$$ -

(2)

Let us denote \(C_1\) the event that the walk takes the shortest path from \((d-1)e_1\) to \(x_0+de_1\) inside \({\mathcal {A}}\cup {\mathcal {B}}\). On \({\mathcal {R}}\), we have that

$$\begin{aligned} P_{(d-1)e_1}^{\omega }[C_1] \ge \kappa _0^{C(d)}. \end{aligned}$$ -

(3)

After applying the Markov property, the third term becomes \(P_{de_1+x_0}^{\omega }[T^{\text {ex}}_{{\mathfrak {H}}_{de_1}}\ge n]\).

-

(4)

Let us denote \(C_2\) the event that the walk takes the shortest path to \(e_1\) inside \({\mathcal {A}}\cup {\mathcal {B}}\) and then the shortest path from \(e_1\) to \(de_1+2\sum _{i=1}^d e_i\) inside \({\mathcal {A}}\cup {\mathcal {B}}\). It is easy to see, on \({\mathcal {R}}\), that

$$\begin{aligned} \min _{y\in \partial {\mathfrak {H}}} P_y^{\omega }[C_2]\ge \kappa _0^{C(d)}. \end{aligned}$$ -

(5)

Finally, we see that, on \({\mathcal {R}}\),

$$\begin{aligned}&P_{ de_1+2\sum _{i=1}^d e_i}^{\omega }\left[ X_1=(d+1)e_1+2\sum _{i=1}^d e_i,\ D\circ \theta _{1}=\infty \right] \\&\quad \ge \kappa _0 P_{(d+1)e_1+2\sum _{i=1}^d e_i}^{\omega }[D=\infty ]. \end{aligned}$$

As mentioned, those estimates combined with the strong Markov property imply that on \({\mathcal {R}}\), we have

This estimate combined with (6.2) implies that

Note that by independence of the transition probabilities, the random variables \(P_{de_1+x_0}^{\omega }[T^{\text {ex}}_{{\mathfrak {H}}_{de_1}}\ge n]\), \({\mathbf 1}{\{{\mathcal {R}}\}}\) and \(P_{(d+1)e_1+2\sum _{i=1}^d e_i}^{\omega }[D=\infty ]\) are all \(\mathbf{P}\)-independent. This means that

where we used translation invariance and the fact that \(\mathbf{P}[{\mathcal {R}}]>0\) in the last line. The result follows from the definition of \(x_0\) and (2.2). \(\square \)

Let us now prove Theorem 3.1.

Proof of Theorem 3.1

It is easy to see from Lemma 6.1 that a RWRE in an elliptic i.i.d. environment verifies

This means that for a walk verifying (E) and \((H)_{\alpha }\) for some \(\alpha >0\) we have

Theorem 3.1 follows from the previous equation and Theorem 2.2. \(\square \)

References

Berger, N., Drewitz, A., Ramírez, A.: Effective polynomial ballisticity condition for random walk in random environment. Commun. Pure Appl. Math. arXiv:1206.6377 (accepted for publication) (2013)

Berger, N., Zeitouni, O.: A quenched invariance principle for certain ballistic random walks in i.i.d. environments, In and out of equilibrium. 2. Birkhäuser 60, 137–160 (2008)

Bouchet, E.: Sub-ballistic random walk in Dirichlet environment. Electron. J. Probab. 18(58), 1–25 (2013)

Bouchet, E., Ramírez, A., Sabot, C.: Sharp ellipticity conditions for ballistic behavior of random walks in random environment. arXiv:1310.6281 (2013)

Bouchet, E., Sabot, C., Soares Dos Santos, R.: A quenched functional central limit theorem for random walks in random environments under \((T)_\gamma \). arXiv:1409.5528 (2014)

Campos, D., Ramírez, A.: Ellipticity criteria for ballistic behavior of random walks in random environment. Probab. Theory Relat. Fields. arXiv:1212.4020 (accepted for publication) (2013)

Drewitz, A., Ramírez, A.: Ballisticy conditions for random walks in random environment. Probab. Theory Relat. Fields. 150(1–2), 61–75 (2011)

Drewitz, A., Ramírez, A.: Quenched exit estimates and ballisticity conditions for higher dimensional random walk in random environment. Ann. Probab. 40(2), 459–534 (2012)

Enriquez, N., Sabot, C., Zindy, O.: Limit laws for transient random walks in random environment on \({\mathbb{Z}}\). Ann. Inst. Fourier (Grenoble) 59(6), 2469–2508 (2009)

Fribergh, A.: Biased random walk in positive random conductances on \({\mathbb{Z}}^d\). Ann. Probab. 41(6), 3910–3972 (2013)

Rassoul-Agha, F., Seppäläinen, T.: Almost sure functional central limit theorem for ballistic random walk in random environment. Ann. Inst. Henri Poincaré Probab. Stat. 45(2), 373–420 (2009)

Sabot, C.: Random walks in random Dirichlet environment are transient in dimension \(d\ge 3\). Probab. Theory Relat. Fields 151, 297–317 (2011)

Sabot, C.: Random Dirichlet environment viewed from the particle in dimension \(d\ge 3\). Ann. Probab. 41(2), 722–743 (2013)

Sabot, C., Tournier, L.: Reversed Dirichlet environment and directional transience of random walks in Dirichlet random environment. Ann. Inst. H. Poincar Probab. Stat. 47(1), 1–8 (2011)

Simenhaus, F.: Asymptotic direction for random walks in random environment. Ann. Inst. Henri Poincaré Probab. 47(1), 1–8 (2007)

Solomon, F.: Random walks in a random environment. Ann. Probab. 3, 1–31 (1975)

Sznitman, A.-S.: Slowdown estimates and central limit theorem for random walks in random environment. J. Eur. Math. Soc. (JEMS) 2(2), 93–143 (2000)

Sznitman, A.S.: On a class of transient random walks in random environment. Ann. Probab. 29(2), 724–765 (2001)

Sznitman, A.S.: An effective criterion for ballistic behavior of random walks in random environment. Probab. Theory Relat. Fields. 122, 509–544 (2002)

Sznitman, A.-S.: Topics in random walks in random environment. School and Conference on Probability Theory, ICTP Lecture Notes Series, Trieste, vol. 17, pp. 203–266 (2004)

Sznitman, A.-S., Zerner, M.: A law of large numbers for random walks in random environment. Ann. Probab. 27(4), 1851–1869 (1999)

Zerner, M.: A non-ballistic law of large numbers for random walks in i.i.d. random environment. Electron. Commun. Probab. 7, 191–197 (2002)

Zeitouni, O.: Random Walks in Random Environment, XXXI summer school in probability, St Flour (2001), Lecture Notes in Mathematics, vol. 1837, pp. 193–312. Springer, Berlin (2004)

Acknowledgments

We would like to thank Alejandro Ramírez for useful discussions. The authors are also grateful to the Université de Toulouse, which they were both affiliated to at the time when this work was done.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article