Abstract

Objectives

To assess whether a computer-aided detection (CADe) system could serve as a learning tool for radiology residents in chest X-ray (CXR) interpretation.

Methods

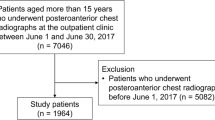

Eight radiology residents were asked to interpret 500 CXRs for the detection of five abnormalities, namely pneumothorax, pleural effusion, alveolar syndrome, lung nodule, and mediastinal mass. After interpreting 150 CXRs, the residents were divided into 2 groups of equivalent performance and experience. Subsequently, group 1 interpreted 200 CXRs from the “intervention dataset” using a CADe as a second reader, while group 2 served as a control by interpreting the same CXRs without the use of CADe. Finally, the 2 groups interpreted another 150 CXRs without the use of CADe. The sensitivity, specificity, and accuracy before, during, and after the intervention were compared.

Results

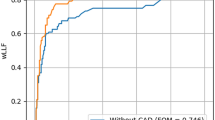

Before the intervention, the median individual sensitivity, specificity, and accuracy of the eight radiology residents were 43% (range: 35–57%), 90% (range: 82–96%), and 81% (range: 76–84%), respectively. With the use of CADe, residents from group 1 had a significantly higher overall sensitivity (53% [n = 431/816] vs 43% [n = 349/816], p < 0.001), specificity (94% [i = 3206/3428] vs 90% [n = 3127/3477], p < 0.001), and accuracy (86% [n = 3637/4244] vs 81% [n = 3476/4293], p < 0.001), compared to the control group. After the intervention, there were no significant differences between group 1 and group 2 regarding the overall sensitivity (44% [n = 309/696] vs 46% [n = 317/696], p = 0.666), specificity (90% [n = 2294/2541] vs 90% [n = 2285/2542], p = 0.642), or accuracy (80% [n = 2603/3237] vs 80% [n = 2602/3238], p = 0.955).

Conclusions

Although it improves radiology residents’ performances for interpreting CXRs, a CADe system alone did not appear to be an effective learning tool and should not replace teaching.

Clinical relevance statement

Although the use of artificial intelligence improves radiology residents’ performance in chest X-rays interpretation, artificial intelligence cannot be used alone as a learning tool and should not replace dedicated teaching.

Key Points

• With CADe as a second reader, residents had a significantly higher sensitivity (53% vs 43%, p < 0.001), specificity (94% vs 90%, p < 0.001), and accuracy (86% vs 81%, p < 0.001), compared to residents without CADe.

• After removing access to the CADe system, residents’ sensitivity (44% vs 46%, p = 0.666), specificity (90% vs 90%, p = 0.642), and accuracy (80% vs 80%, p = 0.955) returned to that of the level for the group without CADe.

Similar content being viewed by others

Abbreviations

- AI:

-

Artificial intelligence

- CADe:

-

Computer-aided detection

- CT:

-

Computed tomography

- CXR:

-

Chest X-rays

- DL:

-

Deep learning

- FN:

-

False negative

- FP:

-

False positive

- TN:

-

True negative

- TP:

-

True positive

References

Tadavarthi Y, Vey B, Krupinski E et al (2020) The state of radiology AI: considerations for purchase decisions and current market offerings. Radiol Artif Intell 2:e200004. https://doi.org/10.1148/ryai.2020200004

Chassagnon G, Vakalopoulou M, Paragios N, Revel M-P (2020) Artificial intelligence applications for thoracic imaging. Eur J Radiol 123:108774. https://doi.org/10.1016/j.ejrad.2019.108774

Chassagnon G, Vakalopoulou M, Régent A et al (2020) Deep learning–based approach for automated assessment of interstitial lung disease in systemic sclerosis on CT images. Radiol Artif Intell 2:e190006. https://doi.org/10.1148/ryai.2020190006

Chassagnon G, Vakalopoulou M, Battistella E et al (2021) AI-driven quantification, staging and outcome prediction of COVID-19 pneumonia. Med Image Anal 67:101860. https://doi.org/10.1016/j.media.2020.101860

Yoo H, Kim KH, Singh R et al (2020) Validation of a deep learning algorithm for the detection of malignant pulmonary nodules in chest radiographs. JAMA Netw Open 3:e2017135. https://doi.org/10.1001/jamanetworkopen.2020.17135

Sim Y, Chung MJ, Kotter E et al (2020) Deep convolutional neural network–based software improves radiologist detection of malignant lung nodules on chest radiographs. Radiology 294:199–209. https://doi.org/10.1148/radiol.2019182465

Sundaram TA, Gee JC (2005) Towards a model of lung biomechanics: pulmonary kinematics via registration of serial lung images. Med Image Anal 9:524–537. https://doi.org/10.1016/j.media.2005.04.002

Bruls RJM, Kwee RM (2020) Workload for radiologists during on-call hours: dramatic increase in the past 15 years. Insights Imaging 11:1–7. https://doi.org/10.1186/s13244-020-00925-z

Raoof S, Feigin D, Sung A et al (2012) Interpretation of plain chest roentgenogram. Chest 141:545–558. https://doi.org/10.1378/chest.10-1302

Chassagnon G, Dohan A (2020) Artificial intelligence: from challenges to clinical implementation. Diagn Interv Imaging 101:763–764. https://doi.org/10.1016/j.diii.2020.10.007

Kang J, Yoon JS, Lee B (2022) How AI-based training affected the performance of professional Go players. CHI Conference on Human Factors in Computing Systems. ACM, New Orleans LA USA, pp 1–12

Fabre C, Proisy M, Chapuis C et al (2018) Radiology residents’ skill level in chest x-ray reading. Diagn Interv Imaging 99(6):361–370

Eisen LA, Berger JS, Hegde A, Schneider RF (2006) Competency in chest radiography. J Gen Intern Med 21:460–465. https://doi.org/10.1111/j.1525-1497.2006.00427.x

Salajegheh A, Jahangiri A, Dolan-Evans E, Pakneshan S (2016) A combination of traditional learning and e-learning can be more effective on radiological interpretation skills in medical students: a pre- and post-intervention study. BMC Med Educ 16:46. https://doi.org/10.1186/s12909-016-0569-5

Ende J (1983) Feedback in clinical medical education. JAMA 250:777–781

Solomon AJ, England RW, Kolarich AR, Liddell RP (2021) Disrupting the education paradigm: an opportunity to advance simulation training in radiology— Radiology in training. Radiology 298:292–294. https://doi.org/10.1148/radiol.2020203534

Burns CL (2015) Using debriefing and feedback in simulation to improve participant performance: an educator’s perspective. Int J Med Educ 6:118–120. https://doi.org/10.5116/ijme.55fb.3d3a

de Hoop B, De Boo DW, Gietema HA et al (2010) Computer-aided detection of lung cancer on chest radiographs: effect on observer performance. Radiology 257:532–540. https://doi.org/10.1148/radiol.10092437

Sung J, Park S, Lee SM et al (2021) Added value of deep learning–based detection system for multiple major findings on chest radiographs: a randomized crossover study. Radiology 299:450–459. https://doi.org/10.1148/radiol.2021202818

Wu JT, Wong KCL, Gur Y et al (2020) Comparison of chest radiograph interpretations by artificial intelligence algorithm vs radiology residents. JAMA Netw Open 3:e2022779. https://doi.org/10.1001/jamanetworkopen.2020.22779

Wentzell S, Moran L, Dobranowski J et al (2018) E-learning for chest x-ray interpretation improves medical student skills and confidence levels. BMC Med Educ 18:256. https://doi.org/10.1186/s12909-018-1364-2

Funding

The authors state that this work has not received any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Guarantor

The scientific guarantor of this publication is GC.

Conflict of interest

GC was occasionally paid by Gleamer to label radiographs. Additionally, GC is a member of European Radiology Scientific Editorial Board and has therefore not taken part in review or selection process of this article. JV works for Gleamer as consultant. NER works for Gleamer as co-founder. SB works for Gleamer as consultant. The other authors of this manuscript declare no relationships with any companies whose products or services may be related to the subject matter of the article.

Statistics and biometry

JG has significant statistical expertise.

Informed consent

Written informed consent was waived by the Institutional Review Board.

Ethical approval

This was approved by our local ethics committee (decision n°AAA-2021–08054).

Methodology

• retrospective

• diagnostic

• multicenter study

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chassagnon, G., Billet, N., Rutten, C. et al. Learning from the machine: AI assistance is not an effective learning tool for resident education in chest x-ray interpretation. Eur Radiol 33, 8241–8250 (2023). https://doi.org/10.1007/s00330-023-10043-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00330-023-10043-1