Abstract

The experimental research described in this manuscript proposes a complete network system for distributed multimedia acquisition by mobile remote nodes, streaming to a central unit, and centralized real-time processing of the collected signals. Particular attention is placed on the hardware structure of the system and on the research of the best network performances for an efficient and secure streaming. Specifically, these acoustic and video sensors, microphone arrays and video cameras respectively, can be employed in any robotic vehicles and systems, both mobile and fixed. The main objective is to intercept unidentified sources, like any kind of vehicles or robotic vehicles, drones, or people whose identity is not a-priory known whose instantaneous location and trajectory are also unknown. The proposed multimedia network infrastructure is analysed and studied in terms of efficiency and robustness, and experiments are conducted on the field to validate it. The hardware and software components of the system were developed using suitable technologies and multimedia transmission protocols to meet the requirements and constraints of computation performance, energy efficiency, and data transmission security.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The utilization of multimedia IoT (Internet of Things) sensors, like microphone arrays and cameras, in operational conditions is rising exponentially in a wide range of civil applications like monitoring, identifying and classifying visual and acoustic events, search and rescue missions even in hostile environments, surveillance of environments, urban places, people, or objects, vehicles and motoring of disaster areas. Depending on the specific application, appropriate acquisition sensors are employed. Moreover, these sensors can be applied to fixed indoor and outdoor installations, to enhance the sensing capabilities of vehicles and robots as well as for educational purposes.

Recently, multimedia data streaming architectures have been investigated in multiple node scenarios. In [18], the authors identify the main building blocks of a multi-node system as sensing, communication, and coordination modules as part of a networked platform.

An experimental analysis of multipoint-to-point communications with IEEE 802.11n and 802.11ac is presented in [3] where throughput results and transmission range performance are analysed. The 802.11 is also suggested by the authors in [17] where this protocol is especially used for one-hop wireless transmissions in centralized networks with small sensor nodes which transmit the environmental sensing data to a remote destination station. However, to the best of our knowledge, in the literature a complete streaming architecture, from acquisition to transmission and reception of data with multiple remote nodes and multiple data flows from different sensors, has not been presented and validated in detail for practical and real-life applications.

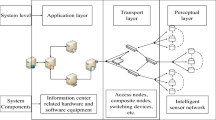

In this work, the wireless sensor system that consists of a network of distributed heterogeneous multimedia nodes for audiovisual data acquisition is managed by the proposed streaming and communication infrastructure for both the transmission of the data collected by the remote nodes towards the central unit, or control station (CS), and the exchange of control commands necessary to establish the connections between the remote sensors and the central unit in the network.

The major feature of the proposed network architecture is the multi-stream transmission by which multiple multimedia data streams from different sensors and from different nodes can be sent to the control station simultaneously. These streams share the same streaming and communication infrastructure with the constraint of real-time and with a reduced amount of information losses.

The multi-stream data received by the main station can be analyzed in real-time with high computational resources in a similar way as the Service Model developed and described in [5]. Only small-size multimedia IoT sensors have been used in this work, in order to focus our application mainly in IoT and embedded system applications, where a great optimization is required from the point of view of spatial dimensions, and for the management of computational and energy resources point of view. The proposed architecture is mainly conceived for security applications as introduced in [13, 14]. It can be employed in acoustic source localization (ASL) [10, 15], multiple acoustic source localization in outdoor noisy environments for audio surveillance and scene analysis using acoustic direction of arrivals (DoAs) estimations in farfield conditions [11], and image analysis for multi-class object recognition, identification and real-time and continuous tracking [6]. The distributed wireless video sensors can also be used for automatic security-oriented event analysis and recognition [9] and person reidentification [7], for example, placed in a urban environment. These sensors can also be used in a synergical way in order to match the audio signatures and identifications to the video ones improving the detection and the recognition of the entities. In addition to the sensor and data streaming architecture, a software component has also been implemented for reading the multimedia streams and their transmission and reception. The overall network architecture and each part of it are described throughout the sections of the manuscript. Several tests were conducted running different configurations and trying to find the most reliable one that guaranteed a multi-node multiple-stream configuration with the highest network performance requirements.

To summarize, this manuscript mainly contributes to the development and analysis of the hardware and software design of a distributed system with multiple heterogeneous sensor nodes. It introduces an advancement in the architecture capability of simultaneous acquisition from the acoustic and video scene and real-time wireless transmission of multiple data streams from the remote sensor nodes to a centralized control station for signal processing with high-complexity algorithms.

The remaining part of the manuscript is organized as follows: the multi-node streaming system architecture is presented in Section 2 with a detailed description of both each component of the structure and the whole architecture, the experiments and the analysis of performance are discussed in Section 3 where the outcome of this work is highlighted. Finally, Section 4 concludes the manuscript with a sight towards future directions.

2 System architecture

In this section the architecture of the calculation, sensor and transmission systems for the acquisition, delivery and processing of the data collected by the distributed sensors, will be described in detail.

In the proposed system macro-structure all the distributed nodes are connected to the CS by sharing the same sub-network. The CS is capable of sending commands for the management of the multimedia data flows, it controls the remote Single Board Computers (SBCs) via SSH, it monitors the network traffic and the RF parameters, it runs complex algorithms for signal processing, analysis and classification, and it manages the Wireless Access Point (WAP) that interconnects all the wireless nodes. The system employs a cluster of heterogeneous sensors whose task is to collect multimedia data in different scenarios and to transmit it to the CS for a de-localized signal processing. In the literature, a similar infrastructure is described in [1] where the authors developed a multi-sensor data acquisition system for person detection. The multimedia devices and the computing platform were similar to our implemented system but, differently from their work, we have used more compact acquisition devices and with a more organized system setup. The whole multi-node configuration is managed and coordinated by the CS that is connected to all the nodes via a shared wireless infrastructure.

Three different sensing platforms have been developed and equipped with different sensors and computing engines, whose characteristics were chosen according to the tasks and the complexity of the data collected. The system is composed as follows: no.1 spherical microphone from Zylia [19], no.2 compact multimedia interfaces (PSEye peripherals from Sony), and no.1 custom sensing device with A/V capabilities, shaped as a round plate and mounting a circular microphone array and a video camera. They will described in detail in Section 2.1

The architecture is orchestrated by a set of acquisition, streaming and processing algorithms written in Python, in order to manage devices and data flows quickly and in a dynamic way, having a universal compatibility on all computing platforms.

2.1 The heterogeneous multimedia sensing system

In this subsection, the multimedia sensor architecture is described. Each sensing platform is composed by a heterogeneous sensing device which collects data from the surrounding environment and allows to extract the necessary acoustic and visual information. We have implemented three different acquisition platforms that have been developed to be used in specific environments, robotic entities and vehicles.

The Plate sensors module (Fig. 1a) is equipped, at the bottom, with a circular plastic frame with a microphone array of four Semitron seMODADMP441 microphone modules, each one hosting a pair of digital MEMS microphones in stereo configuration, forming a 8-channel circular microphone array. The MEMS microphone has a flat frequency response from 60 Hz to 15 kHz, connected to a MiniDSP USBStreamer I2S-to-USB audio acquisition interface [10]. At the centre of the plate, a Raspberry Camera is installed in order to capture video and images. A Raspberry Pi 4 is mounted on the top of the plate and provides the computing capabilities for data acquisition, onboard processing, and transmission. The system is powered by a dedicated LiPo battery pack. The SBC captures uncompressed multichannel PCM audio (with a samplerate of 48KHz and with a resolution of 16bit) and the video feed in standard definition (SD), and transmits both streams to the CS.

The Zylia sensor (Fig. 1b) is a USB spherical array with 19 microphone omnidirectional digital MEMS capsules distributed on a sphere with a radius of 4.5 cm. It is able to capture the whole surrounding acoustic field in 3D with 48 kHz sample rate and resolution of 24 bit [14]. It is connected and managed by an Intel-based SBC: a Mini PC Stick NV41S with Windows 10 Pro.

The two PSEye peripherals (Fig. 1c) are small devices composed of a linear microphone array of 4 equidistant capsules, with a bandwidth of 8KHz, a samplerate of 16KHz, a resolution of 16bit per sample and a SD camera. They are controlled by a Raspberry Pi 3B.

The audio feed from each platform is sent to the CS uncompressed. On the other hand, a frame-by-frame compression in jpeg is applied to the video frames, suitable for a embedded system, as it is not computationally demanding.

2.2 Network architecture

One of the fundamental criteria for the design of the multimedia data streaming infrastructure was that the transmission system had to be encrypted, digital, robust, and had to operate in a free and accessible radio spectrum without disturbing the surrounding devices and coexisting with other radio systems. It was also necessary to maintain a sufficient bandwidth for multiple multimedia streams and a dedicated bandwidth to connection-oriented protocols for the re-transmissions of packets, in case they were corrupted or missing.

According to García et al. in [2] there are several wireless technologies for data transmission in the IoT field, thus for our project it was necessary to find the wireless technology that could support multimedia streaming. The authors made an accurate analysis of the main wireless transmission technologies, of which the reasons for their use for our application will be given below. Zigbee and LoRa were considered not ideal for multimedia transmission because of the low datarate and also because the transmission is regulated by legislative rules. WiMax was also not considered because it is necessary to rely on an infrastructure managed by a provider and there is no general coverage, especially in ad-hoc areas. WiMax can also have some difficulties with high definition multimedia traffic. Analog UHF transmissions were discarded because they are not immune to interference and does not have encryption at the MAC level. Mobile communications, despite the high bandwidth and datarate, were not considered suitable because an external provider is needed and a secure data transmission service is necessary in VPN or in the cloud. mmWaves communications are very limited on signal strength and therefore distance or coverage, due to high hardware costs for transmission and several transmission restrictions. Bluetooth was also discarded because it is a technology suitable for PANs. After having done this analysis, our choice fell on Wi-Fi, which is a fair compromise to establish hi-reliable connections among nodes in a unlicensed frequency spectrum and also in terms of signal range, bandwidth, availability of frequencies and cost of the hardware. We used and analyzed Wi-Fi under certain protocols and transmission aspects. The performance comparison between Wi-Fi IEEE 802.11ac and Wi-Fi IEEE 802.11n with multimedia streaming at different distances is evaluated by Kaewkiriya et al. in [4] by measuring the throughput and streaming rate and by Newell et al. in [8] where they studied the theoretical and practical performances of the two Wi-Fi IEEE 802.11 aforementioned standards using an approach similar to the one we conducted in our work, where we reached better results that will be described later.

The system we developed has a centralized and a de-localized data processing station. All the data collected by the sensors is transmitted in real-time to the powerful control station and processed with complex and advanced algorithms.

An experimental bi-directional network infrastructure has been designed and developed in order to manage and stream all the data collected by the sensing platforms and to carry signalling and control messages for the SBC and flows controls. The developed network infrastructure relies on a high-performing and encrypted 5GHz Wi-Fi connection that joins all the nodes each other for cooperative communications, and also with the CS, in the same subnet. The wireless connection is managed by a TP-Link EAP-225-outdoor: a wireless Multi-User Multiple-Input and Multiple Output (MU-MIMO) Gigabit indoor/outdoor Access Point, with Dual-Band Wi-Fi based on the 802.11ac standard (Fig. 2b) and it operates on the C band (5250MHz - 5850MHz), with a bandwidth up to 80MHz. Despite this band is more affected by oxygen absorption, scattering and reflections, it allows to use narrower beams, an higher bandwidth and it is more immune to interference. As showed in Fig. 2a, the AP is connected to the CS via a shielded Gigabit Ethernet interface. The datarate for the 802.11ac goes from 6.5 Mbps to 867 Mbps [16].

The software and the protocols for the network management were developed in Python using Python sockets. This has led to efficient, low-level connection management. This also brings numerous advantages: I) the communication is very fast: there is no large overhead due to further protocols II) communication management is minimal and adapts very well to embedded system with low computational resources, III) they permit a complete customization of the network flow. A progressive TCP streaming without adaptive encoding and bandwidth was used. Being an IoT-based distributed system with compact and small devices, our architecture therefore focuses on low-cost systems that are not demanding in computational resources. For this, it was necessary to send multimedia data at the highest quality without degradation and delay due to compression. Despite TCP is not frequently used for multimedia streaming and, as there was a need for reliable package delivery, this protocol was a good compromise in terms of latency and network performances. As claimed by Taha et al. in [12], basing on a full-duplex connection and having full control of the protocol, it was possible to use TCP for our high-reliable real-time multimedia application. This allowed to have the possibility of recovering lost or degraded datagrams [5, 13, 14] avoiding, for example, glitches and scratches in the received audio and dropped frames in the video feed.

3 Transmission scenarios

Different configurations for the transmission infrastructure have been investigated and the corresponding network and bandwidth performance for each distributed node are reported in this section. The development process of the transmission infrastructure is described, starting from a single-node configuration to a multi-node one, taking into account the signal transmission and reception quality and the tx/rx antennas. The aim of this project was to find the network architecture that guarantees the necessary high quality performance in a multi-stream and a multi-node scenario. The tests were performed outdoor, in a rugby field, situated outside our laboratory, and consisted of RSSI and channel bandwidth measurements, in relation to a certain distance of the SBC from the CS antenna, during a multimedia streaming. We started with a mono-node and omni-antennas configuration up to a multi-node and high performing sectorial antenna setup.

3.1 Tests with a single-node and Omni antennas

The purpose of the preliminary tests was to verify the actual suitability of the network infrastructure for receiving multimedia streams (multi-channel audio and video feeds) preserving a high bandwidth channel over long distances. The received signal strength indication (RSSI) and the bandwidth at the SBC side were measured. In this experiment, the SBC Raspberry Pi 4 with an embedded Wi-Fi antenna and a PSEye peripheral were used. The remote node was connected to the CS through the custom Wi-Fi network managed by the EAP-225, as showed in Fig. 2a. The network showed in the figure is composed by the AP, powered by a PoE injector, an optional switch, to connect and use one or more computers for the flow management and reception, or other access points to increase the coverage of the system, and the CS to receive the multimedia streams. Two 5dB standard omnidirectional antennas were mounted on the AP (Fig. 2b) with 2 × 4 dBi @ 5 GHz. The radiation patterns are reported in Fig. 2c from the EAP-225’s datasheet and they are relative to the 5.25GHz frequency. It shows the azimuth 2D diagram for two different elevation angles (horizontal and vertical planes), the 2D elevation diagram for three azimuth angles, and the mapped 3D representation of the whole antenna radiation pattern. From the graphs it is evident the omnidirectionality of the pattern given by the combination of the two antennas.

Four audio channels and a SD video feed were transmitted to the CS (Fig. 3a) from the Raspberry Pi 4 along the rugby field. The RF parameters over pre-defined distances, referred here to as checkpoints and showed in Fig. 3b, were sampled during the multimedia streaming. The WAP with the antenna were placed outside the laboratory, facing the field.

The radio channel parameters were measured at each checkpoint by the SBC and transmitted via SSH to the CS. The commands iwconfig and the package Wavemon were used to extract and monitor the RF link parameters. The incoming traffic was also captured at the CS with Wireshark, checking the datarate fluctuations and verifying the amount of lost and retransmitted packets. In Fig. 4, the RF parameters captured at each checkpoint are plotted. The measurements were carried out in two ways: LOW in which the SBC is about 1.5m in height, and HIGH where the SBC is about 3m above the ground. The values were acquired for about one minute with a 10 seconds step sampling interval. Then, the average was calculated and the resulting values reported in the graphs.

The reduction of the link quality in relation of the distance is evident. A signal level of -70 dBm (Fig. 4a) is noted at a distance of about 73 meters from the AP, where the bitrate is 60 Mbits/sec (Fig. 4b). Within 73 meters, it is also noted that the values captured in LOW mode are comparable with the values of the HIGH mode. From the results of the measurements of this first survey, it is therefore possible to assert that with this configuration of the nodes, the transmission is reliable for distances between AP and SBC within 70 meters. Beyond this limit, the communication of the A/V flows becomes less reliable until the connection is lost at 156 meters of distance. From a deeper analysis of the datarate, Wireshark showed that the data flow begins to become unstable starting from 50m (Fig. 5), at least in the geographical area where the tests were carried out. Beyond this limit, it is possible to notice that the communication of the A/V streams becomes less reliable. Indeed, the number of the packet loss increases with the distance and, consequently, higher data-rate peaks happen more frequently at higher distances due to the re-transmissions. This for example can be seen in the graph of Fig. 5, where it was necessary to restart the streaming for three times when we reached a distance of the remote node above 90m.

3.2 Tests with a single-node and the sectorial antenna

Improvements were made to the transmission infrastructure by connecting MU-MIMO antennas to the SBCs and using a high gain sectorial extender at the CS (Fig. 6). The MU-MIMO functionality of the 802.11ac Wave 2 standard allows nodes to send data simultaneously (from up to four radio clients) optimizing the process of addressing the signal to the selected devices only. This technology improves the connectivity coverage by changing dynamically the directions of radio frequency emissions based on the position of clients, optimizing thus the available Wi-Fi resources by equally allocating them between the nodes. Conversely, an access point or a client based on the previous Wi-Fi protocols, can communicate with only one client at a time, introducing latency, bottlenecks and packet loss.

Instead the standard omnidirectional antennas, a MikroTik MTAS-5G-15D120 15dBi 2x2 MIMO sectorial antenna (Fig. 6a) was used as EAP-225 antenna extension for the ground station, whose radiation patterns are visible in Fig. 6c. Both the horizontal polarization patters (leftmost graphs) and vertical polarization patters (rightmost graphs) are shown. The azimuth patterns highlight the broad beam with 120 degree aperture of the sectorial antenna where we can see the high directionality of the beam, while a narrower beam can be seen in the elevation patterns. An omnidirectional MU-MIMO USB 3.0 TP-Link AC1300 Archer T3U Plus antenna (Fig. 6b) was also installed in each SBC, with the exception of the intel-based SBC that has its integrated external omnidirectional antenna. For this second test phase using the new configuration, the measurements of the RF parameters were performed in a similar way to the experiment in Section 3.1, except that they were sampled in the LOW mode and the checkpoint path was extended, as in Fig. 7. To the new checkpoint path several measurements points were added, thus increasing the distance between the nodes and the antenna. Three points in non-Line-of-Sight (N-LoS) were added (no. 15-17) in order to test a multimedia transmission under a different condition. The distance in Line of Sight (LoS), using Google Maps, from the antenna to each checkpoint was measured. The RSSI of the SBC signal, calculated by the CS access point, was also considered. It was extracted from the web interface of the access point, where it gives the access to several RF and settings parameters for each connected node. In Fig. 8a, b and c there are three graphs related to the samples of the RF parameters sampled at each checkpoint.

From the aforementioned graphs, a significant improvement given by both the sectorial extender and the USB omnidirectional antenna is evident. Indeed, the MU-MIMO technology allows to focus the beams more precisely and to increase not only the datarate, but also the signal strength. The extension of the checkpoints also confirmed that with this equipment we obtained an excellent transmission link, even in unfavorable conditions and not in LoS, as in points 16 and 17. The lowest datarate value recorded was at point 17th, not in LoS, with a value of 234Mbps. Nevertheless, a sufficient margin of use of the channel for multiple multimedia streams, even from other devices is still guaranteed. Packet reception (Fig. 9) was also linear and much more stable than the first configuration analyzed in Fig. 5.

3.3 A comparison with a 2.4GHz sectorial antenna

In order to verify the robustness and the efficiency of the 5GHz system, we compared it with a 2.4GHz Wi-Fi infrastructure using a TP-Link TL-ANT2415MS 2.4GHz 15dBi 2x2 MIMO extender as CS antenna (Fig. 10a) whose radiation patterns are visible in Fig. 10b. Both the horizontal polarization patters (leftmost graphs) and vertical polarization patters (rightmost graphs) are shown. The azimuth patterns highlight the broad beam with 120 degree aperture of the sectorial antenna where we can see the high directionality of the beam, while a narrower beam can be seen in the elevation patterns. MU-MIMO USB external antennas are employed for the SBCs. A Raspberry Pi 4 with a PSEye peripheral was used for data acquisition. As in the previous experiment described in Section 3.2, the antenna was mounted on a stand (Fig. 10a) and placed outside the laboratory, facing the rugby field. The testing procedure and the checkpoints were conducted and determined respectively in the same way. The graphs in Fig. 11 show a comparison of the performances reached by the 5GHz sectorial antenna and the 2.4GHz one. The latter had a lower performance than that the former, resulting in a dropped connection in several points. This was also caused by a drastic decrease of the channel datarate at the farthest points. Packets sent on the 2.4GHz band arrived degraded or were lost in transmission. The retransmission requests and the missed acknowledgments caused a fatal bottleneck preventing the tx/rx of new frames. This occurred starting from 128 meters (point no.6 of Fig. 7). Transmission in N-LoS was also not possible with this configuration. In fact, it can be seen that from point no.15 it was not possible to transmit any type of data. Using a setup similar to the 2.4GHz just explained, it would not allow a multi-node multiple-stream configuration with a sufficient datarate of the communication channel.

3.4 Tests with a multi-node and the sectorial antenna

Once the behavior and coverage of the new infrastructure with streaming from a single node was verified, a new test session for a multi-node configuration was performed. We used 4 distributed nodes with heterogeneous sensors and streams, as listed in Section 2.1.

Already having an integrated external antenna, no MU-MIMO antenna was connected to NV41S SBC, and its integrated external antenna was used. From the graphs of Fig. 12, despite the previous experiment, it is possible to notice a considerable increase in network performances, especially for the MU-MIMO nodes which have approximately the same network characteristics, guaranteeing fairness in the use of the communication channel. Indeed, in Fig. 12a the RSSI measured at the WAP decreases from around -55 dBm at 28 m to around -70 dBm at 146 m for the Raspberry Pi nodes. The SBC Intel node is seen with lower RSSI values, from -63 dBm at 28 m to -80 dBm at 146 m. The RSSI values measured by the remote Raspberry Pi nodes are from -40 dBm to around -63 dBm as shown in Fig. 12b. The corresponding data-rates are in Fig. 12c where the the SBC Intel guarentees 433 Mbps when close to the AP down to 175 Mbps at the farthest checkpoint. Again, the Raspberry Pi nodes present better performance with data-rates from 866 Mbps to 460 Mbps. The NV41S presented lower network performances, due to the due to the lack of an integrated Wi-Fi 5 802.11ac standard compliant device. Despite this, at the farthest point (146m), where the bandwidth is generally reduced, the communication channel of the PC-Stick had a datarate of 175Mbps, more than enough to transmit an uncompressed audio stream of 19 channels simultaneously.

4 Conclusions

In this paper several tests were executed in order to develop and evaluate the performances of a networked multimedia system composed of four heterogeneous embedded optical-acoustic nodes with high quality sensing capabilities. We started studying the infrastructure from a single-node configuration with omnidirectional antennas, up to a multi-node one with a high-performing sectorial antenna. The objective was to obtain the maximum network and RF performances possible for a reliable and effective connection and communication between nodes. As demonstrated, despite the intrinsic sensitivity to the environment, in which the experiments were carried out due to reflection and attenuation in the propagation of waves from surrounding objects, the Wi-Fi technology with IEEE 802.11ac protocol in the 5.2 GHz band guarantees a higher data rate than the 2.4GHz band. This also guaranteed greater immunity to interference also improved by the MU-MIMO antennas used at both receiving and transmitting nodes. Future work will extend the proposed architecture and the network with multi-AP configurations for nodes mobility allowing the sensing platforms to be integrated in mobile agents.

References

Barello P, Hossain MS (2021) Multimodal person detection system. Multimedia Tools and Applications Springer 80(9):13389–13406

Garcia L, Jimenez J, Taha M, Lloret J (2018) Wireless technologies for iot in smart cities. Network Protocols and Algorithms 10:23. https://doi.org/10.5296/npa.v10i1.12798

Hayat S, Yanmaz E, Bettstetter C (2015) Experimental analysis of multipoint-to-point UAV communications with IEEE 802.11n and 802.11ac. In: 2015 IEEE 26th annual international symposium on personal, indoor, and mobile radio communications (PIMRC), pp 1991–1996. https://doi.org/10.1109/PIMRC.2015.7343625

Kaewkiriya T (2017) Performance comparison of Wi-Fi IEEE 802.11ac and Wi-Fi IEEE 802.11n. In: 2017 2nd international conference on communication systems, computing and it applications (CSCITA), pp 235–240. https://doi.org/10.1109/CSCITA.2017.8066560

Kim D-Y, Park J-H, Lee Y, Kim S (2020) Network virtualization for real-time processing of object detection using deep learning. Multimedia Tools and Applications, Springer, pp 1–19

Leong WL, Martinel N, Huang S, Micheloni C, Foresti GL, Teo RSH (2021) An intelligent auto-organizing aerial robotic sensor network system for urban surveillance. Journal of Intelligent & Robotic Systems 102(2):33. https://doi.org/10.1007/s10846-021-01398-y

Martinel N, Foresti GL, Micheloni C (2017) Person reidentification in a distributed camera network framework. IEEE Trans Cybern 47 (11):3530–3541. https://doi.org/10.1109/TCYB.2016.2568264

Newell D, Davies P, Wade R, Decaux P, Sharma M (2017) Comparison of theoretical and practical performances with 802.11n and 802.11ac wireless networking, pp 1710–715. https://doi.org/10.1109/WAINA.2017.113

Piciarelli C, Foresti GL (2011) Surveillance-oriented event detection in video streams. IEEE Intell Syst 26(3):32–41. https://doi.org/10.1109/MIS.2010.38

Salvati D, Drioli C, Ferrin G, Foresti GL (2020) Acoustic source localization from multirotor UAVs. IEEE Trans Ind Electron 67(10):8618–8628. https://doi.org/10.1109/TIE.2019.2949529

Salvati D, Drioli C, Foresti G.L (2014) Incoherent frequency fusion for broadband steered response power algorithms in noisy environments. IEEE Signal Process Lett 21(5):581–585. https://doi.org/10.1109/LSP.2014.2311164

Taha M, Lloret J, Canovas A, Garcia L (2017) Survey of transportation of adaptive multimedia streaming service in internet. Network Protocols and Algorithms 9:85. https://doi.org/10.5296/npa.v9i1-2.12412

Toma A, Cecchinato N, Drioli C, Foresti GL, Ferrin G (2021) CNN-based processing of radio frequency signals for augmenting acoustic source localization and enhancement in UAV security applications. In: 2021 international conference on military communication and information systems (ICMCIS), pp 1–5. https://doi.org/10.1109/ICMCIS52405.2021.9486424

Toma A, Cecchinato N, Drioli C, Foresti GL, Ferrin G (2021) Towards drone recognition and localization from flying UAVs through processing of multi-channel acoustic and radio frequency signals: a deep learning approach. In: IST-190 Symposium on AI, ML and BD for Hybrid Military Operations (AI4HMO), IN PRESS

Toma A, Salvati D, Drioli C, Foresti GL (2021) CNN-based processing of acoustic and radio frequency signals for speaker localization from MAVs. In: Proceedings of the 22th conference of the international speech communication association (INTERSPEECH), Brno, Czechia, pp 2147–2151

TP-Link Technologies Co. Ltd., EAP Datasheet. https://static.tp-link.com/upload/product-overview/2021/202107/20210730/EAP%20Datasheet.pdf

Waharte S, Boutaba R, Iraqi Y, Ishibashi B (2006) Routing protocols in wireless mesh networks: challenges and design considerations. Multimedia tools and Applications, Springer 29(3):285–303

Yanmaz E, Yahyanejad S, Rinner B, Hellwagner H, Bettstetter C (2018) Drone networks: communications, coordination, and sensing. Ad Hoc Netw 68:1–15. https://doi.org/10.1016/j.adhoc.2017.09.001. Advances in Wireless Communication and Networking for Cooperating Autonomous Systems

ZYLIA ZM-1 Microphone. https://www.zylia.co/zylia-zm-1-microphone.html

Acknowledgements

This research was partially supported by ONRG - U.S. Department of Defense research project N62909-20-1-2075 “Target Re-Association for Autonomous Agents” (TRAAA).

Funding

Open access funding provided by Università degli Studi di Udine within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cecchinato, N., Toma, A., Drioli, C. et al. Performance evaluation of a Wi-Fi-based multi-node network for distributed audio-visual sensors. Multimed Tools Appl 82, 29753–29768 (2023). https://doi.org/10.1007/s11042-023-14677-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14677-7