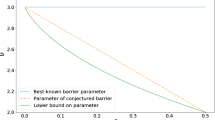

Abstract

In recent work, we provide computational arguments for expanding the class of proper cones recognized by conic optimization solvers, to permit simpler, smaller, more natural conic formulations. We define an exotic cone as a proper cone for which we can implement a small set of tractable (i.e. fast, numerically stable, analytic) oracles for a logarithmically homogeneous self-concordant barrier for the cone or for its dual cone. Our extensible, open-source conic interior point solver, Hypatia, allows modeling and solving any conic problem over a Cartesian product of exotic cones. In this paper, we introduce Hypatia’s interior point algorithm, which generalizes that of Skajaa and Ye (Math. Program. 150(2):391–422, 2015) by handling exotic cones without tractable primal oracles. To improve iteration count and solve time in practice, we propose four enhancements to the interior point stepping procedure of Skajaa and Ye: (1) loosening the central path proximity conditions, (2) adjusting the directions using a third order directional derivative barrier oracle, (3) performing a backtracking search on a curve, and (4) combining the prediction and centering directions. We implement 23 useful exotic cones in Hypatia. We summarize the complexity of computing oracles for these cones and show that our new third order oracle is not a bottleneck. From 37 applied examples, we generate a diverse benchmark set of 379 problems. Our computational testing shows that each stepping enhancement improves Hypatia’s iteration count and solve time. Altogether, the enhancements reduce the geometric means of iteration count and solve time by over 80% and 70% respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Any convex optimization problem may be represented as a conic problem that minimizes a linear function over the intersection of an affine subspace with a Cartesian product of primitive proper cones (i.e. irreducible, closed, convex, pointed, and full-dimensional conic sets). Under certain conditions, a conic problem has a simple and easily checkable certificate of optimality, primal infeasibility, or dual infeasibility [60]. Most commercial and open-source conic solvers (such as CSDP [8], CVXOPT [3], ECOS [24, 63], MOSEK [42], SDPA [68], Alfonso [56]) implement primal-dual interior point methods (PDIPMs) based on the theory of logarithmically homogeneous self-concordant barrier (LHSCB) functions. Compared to first order conic methods (see [54] on SCS solver), idealized PDIPMs typically exhibit higher per-iteration cost, but have a lower iteration complexity of \({\mathcal {O}}(\sqrt{\nu } \log (1 / \varepsilon ))\) iterations to converge to \(\varepsilon \) tolerance, where \(\nu \) is the barrier parameter of the LHSCB. We limit the scope of this paper to conic PDIPMs, but note that there are other notions of duality and PDIPMs for convex problems outside of the conic realm (see e.g. [37]).

1.1 Conic optimization with Hypatia solver

Hypatia [20] is an open-source, extensible conic primal-dual interior point solver.Footnote 1 Hypatia is written in the Julia language [7] and is accessible through a flexible, low-level native interface or the modeling tool JuMP [25]. A key feature of Hypatia is a generic cone interface that allows users to define new exotic cones. An exotic cone is a proper cone for which we can implement a small set of tractable LHSCB oracles (listed in Sect. 1.2) for either the cone or its dual cone. Defining a new cone through Hypatia’s cone interface makes both the cone and its dual available for use in conic formulations. We have already predefined 23 useful exotic cone types (some with multiple variants) in Hypatia. Several cones are new and required the development of LHSCBs and efficient procedures for oracle evaluations (see [18, 35]).

Advanced conic solvers such as MOSEK 9 currently recognize at most only a handful of standard cones: nonnegative, (rotated) second order, positive semidefinite (PSD), and three-dimensional exponential and power cones. In [20], we show across seven applied examples that modeling with the much larger class of exotic cones often permits simpler, smaller, more natural conic formulations. Our computational experiments with these examples demonstrate the potential advantages, especially in terms of solve time and memory usage, of solving natural formulations with Hypatia compared to solving standard conic extended formulations with either Hypatia or MOSEK 9. However, a description of Hypatia’s algorithms was outside the scope of [20]. In contrast, the main purpose of this paper is to introduce some key features of our exotic conic PDIPM that enable it to be both general and performant.

1.2 The Skajaa-Ye algorithm

Most conic PDIPM solvers use efficient algorithms specialized for symmetric cones, in particular, the nonnegative, (rotated) second order, and PSD cones. Although non-symmetric conic PDIPMs proposed in [47, 50] can handle a broader class of cones, they have several disadvantages compared to specialized symmetric methods (e.g. requiring larger linear systems, strict feasibility of the initial iterate, or conjugate LHSCB oracles). The algorithm by Skajaa and Ye [64], henceforth referred to as SY, addresses these issues by approximately tracing the central path of the homogeneous self-dual embedding (HSDE) [2, 67] to an approximate solution for the HSDE. This final iterate provides an approximate conic certificate for the conic problem, if a conic certificate exists. The SY algorithm relies on an idea by Nesterov [47] that a high quality prediction direction (enabling a long step and rapid progress towards a solution) can be computed if the current iterate is in close proximity to the central path (i.e. it is an approximate scaling point). To restore centrality after each prediction step, SY performs a series of centering steps.

By using a different definition of central path proximity to [47], SY avoids needing conjugate LHSCB oracles.Footnote 2 Indeed, a major advantage of SY is that it only requires access to a few tractable oracles for the primal cone: an initial interior point, feasibility check, and gradient and Hessian evaluations for the LHSCB. In our experience, for a large class of proper cones, these oracles can be evaluated analytically, i.e. without requiring the implementation of iterative numerical procedures (such as optimization) that can be expensive and may need numerical tuning. Conjugate LHSCB oracles in general require optimization, and compared to the analytic oracles, they are often significantly less efficient and more prone to numerical instability.

1.3 Practical algorithmic developments

For many proper cones of interest, including most of Hypatia’s non-symmetric cones, we are aware of LHSCBs with tractable oracles for either the cone or its dual cone but not both. Suppose a problem involves a Cartesian product of exotic cones, some with primal oracles implemented and some with dual oracles implemented (as in several example formulations described in [20]). In this case, SY can solve neither the primal problem nor its conic dual, as SY requires primal oracles. Our algorithm generalizes SY to allow a conic formulation over any Cartesian product of exotic cones.

The focus of [64] is demonstrating that SY has the best known iteration complexity for conic PDIPMs. This complexity analysis was corrected by Papp and Yıldız [56], who implemented SY in their recent MATLAB solver Alfonso [58, 59]. It is well known that performant PDIPM implementations tend to violate assumptions used in iteration complexity analysis, so in this paper we are not concerned with iteration complexity. Our goal is to reduce iteration counts and solve times in practice, by enhancing the performance of the interior point stepping procedure proposed by SY and implemented by Alfonso.

The basic SY-like stepping procedure computes a prediction or centering direction by solving a large structured linear system, performs a backtracking line search in the direction, and steps as far as possible given a restrictive central path proximity condition. We propose a sequence of four practical performance enhancements.

-

Less restrictive proximity. We use a relaxed central path proximity condition, allowing longer prediction steps and fewer centering steps.

-

Third order adjustments. After computing the prediction or centering direction, we compute a third order adjustment (TOA) direction using a new third order oracle (TOO) for exotic cones. We use a line search in the unadjusted direction to determine how to combine it with the TOA direction, before performing a second line search and stepping in the new adjusted direction.

-

Curve search. Due to the central path proximity checks, each backtracking line search can be quite expensive. Instead of performing two line searches, we use a single backtracking search along a particular quadratic curve of combinations of the unadjusted and TOA directions.

-

Combined directions. Unlike SY, most conic PDIPMs do not use separate prediction and centering phases. We compute the prediction and centering directions and their associated TOA directions, then perform a backtracking search along a quadratic curve of combinations of all four directions.

Our TOA approach is distinct from the techniques in [21, 41] that also use higher order LHSCB information.Footnote 3 Unlike these techniques, we derive adjustments (using the TOO) for both the prediction and centering directions. Our TOO has a simpler and more symmetric structure than the third order term used in [21], and we leverage this for fast and numerically stable evaluations. Whereas the method of [41] only applies to symmetric cones, and the technique in [21] is tested only for the standard exponential cone, we implement and test our TOO for all of Hypatia’s 23 predefined cones. In our experience, requiring a tractable TOO is only as restrictive as requiring tractable gradient and Hessian oracles. We show that the time complexity of the TOO is no higher than that of the other required oracles for each of our cones. To illustrate, we describe efficient and numerically stable TOO procedures for several cones that can be characterized as intersections of slices of the PSD cone.

Although this paper is mainly concerned with the stepping procedures, we also outline our implementations of other key algorithmic components. These include preprocessing of problem data, finding an initial iterate, the solution of structured linear systems for search directions, and efficient backtracking searches with central path proximity checks. We note that Hypatia has a variety of algorithmic options for these components; these different options can have a dramatic impact on overall solve time and memory usage, but in most cases they have minimal effect on the iteration count. For the purposes of this paper, we only describe and test one set of (default) options for these components.

1.4 Benchmark instances and computational testing

We implement and briefly describe 37 applied examples (available in Hypatia’s examples folder), each of which has options for creating formulations of different types and sizes. From these examples, we generate 379 problem instances of a wide range of sizes. Since there is currently no conic benchmark storage format that recognizes more than a handful of cone types, we generate all instances on the fly using JuMP or Hypatia’s native interface. All of Hypatia’s predefined cones are represented in these instances, so we believe this is the most diverse conic benchmark set available.

On this benchmark set, we run five different stepping procedures: the basic SY-like procedure (similar to Alfonso) and the sequence of four cumulative enhancements to this procedure. Our results show that each enhancement tends to improve Hypatia’s iteration count and solve time, with minimal impact on the number of instances solved. We do not enforce time or iteration limits, but we note that under strict limits the enhancements would greatly improve the number of instances solved. The TOA enhancement alone leads to a particularly consistent improvement of around 45% for iteration counts. Overall, the enhancements together reduce the iterations and solve time by more than 80% and 70% respectively. For instances that take more iterations or solve time, the enhancements tend to yield greater relative improvements in these measures.

1.5 Overview

In Sect. 2, we define our mathematical notation. In Sect. 3, we define exotic cones, LHSCBs, and our required cone oracles (including the TOO). In Sect. 4, we describe Hypatia’s general primal-dual conic form, associated conic certificates, and the HSDE. In Sect. 5, we define the central path of the HSDE and central path proximity measures, and we outline Hypatia’s high level algorithm. We also derive the prediction and centering directions and our new TOA directions, and we describe the SY-like stepping procedure and our series of four enhancements to this procedure. In Appendices A to B, we discuss advanced procedures for preprocessing and initial point finding, solving structured linear systems for directions, and performing efficient backtracking searches and proximity checks. In Sect. 6, we briefly introduce Hypatia’s predefined exotic cones and show that our TOO is relatively cheap to compute, and in Appendix C we describe some TOO implementations. In Sect. 7, we summarize our applied examples and exotic conic benchmark instances, and finally we present our computational results demonstrating the practical efficacy of our stepping enhancements.

2 Notation

For a natural number d, we define the index set \(\llbracket d \rrbracket :=\{1, 2, \ldots , d\}\). Often we construct vectors with round parentheses, e.g. (a, b, c), and matrices with square brackets, e.g. \({\begin{matrix} a &{} b \\ c &{} d \end{matrix}}\). For a set \({\mathcal {C}}\), \({{\,\mathrm{cl}\,}}({\mathcal {C}})\) and \({{\,\mathrm{int}\,}}({\mathcal {C}})\) denote the closure and interior of \({\mathcal {C}}\), respectively.

\({\mathbb {R}}\) denotes the space of reals, and \({\mathbb {R}}_{\ge }\), \({\mathbb {R}}_>\), \({\mathbb {R}}_{\le }\), \({\mathbb {R}}_<\) denote the nonnegative, positive, nonpositive, and negative reals. \({\mathbb {R}}^d\) is the space of d-dimensional real vectors, and \({\mathbb {R}}^{d_1 \times d_2}\) is the \(d_1\)-by-\(d_2\)-dimensional real matrices. The vectorization operator \({{\,\mathrm{vec}\,}}: {\mathbb {R}}^{d_1 \times d_2} \rightarrow {\mathbb {R}}^{d_1 d_2}\) maps matrices to vectors by stacking columns, and its inverse operator is \({{\,\mathrm{mat}\,}}_{d_1, d_2} : {\mathbb {R}}^{d_1 d_2} \rightarrow {\mathbb {R}}^{d_1 \times d_2}\).

\({\mathbb {S}}^d\) is the space of symmetric matrices with side dimension d, and \({\mathbb {S}}^d_{\succeq }\) and \({\mathbb {S}}^d_{\succ }\) denote the positive semidefinite and positive definite symmetric matrices. The inequality \(S \succeq Z\) is equivalent to \(S - Z \in {\mathbb {S}}^d_{\succeq }\) (and similarly for the strict inequality \(\succ \) and \({\mathbb {S}}^d_{\succ }\)). We let \({{\,\mathrm{sd}\,}}(d) :=d (d + 1) / 2\) be the dimension of the vectorized upper triangle of \({\mathbb {S}}^d\). We overload the vectorization operator \({{\,\mathrm{vec}\,}}: {\mathbb {S}}^d \rightarrow {\mathbb {R}}^{{{\,\mathrm{sd}\,}}(d)}\) to perform an svec transformation, which rescales off-diagonal elements by \(\sqrt{2}\) and stacks columns of the upper triangle (or equivalently, rows of the lower triangle). For example, for \(S \in {\mathbb {S}}^3\) we have \({{\,\mathrm{sd}\,}}(3) = 6\) and \({{\,\mathrm{vec}\,}}(S) = ( S_{1,1}, \sqrt{2} S_{1,2}, S_{2,2}, \sqrt{2} S_{1,3}, \sqrt{2} S_{2,3}, S_{3,3} ) \in {\mathbb {R}}^{{{\,\mathrm{sd}\,}}(3)}\). The inverse mapping \({{\,\mathrm{mat}\,}}: {\mathbb {R}}^{{{\,\mathrm{sd}\,}}(d)} \rightarrow {\mathbb {S}}^d\) is well-defined.

For a vector or matrix A, the transpose is \(A'\) and the trace is \({{\,\mathrm{tr}\,}}(A)\). I(d) is the identity matrix in \({\mathbb {R}}^{d \times d}\). We use the standard inner product on \({\mathbb {R}}^d\), i.e. \(s' z = {\textstyle \sum _{i \in \llbracket d \rrbracket }} s_i z_i\) for \(s, z \in {\mathbb {R}}^d\), which equips \({\mathbb {R}}^d\) with the standard norm \(\Vert s \Vert = (s' s)^{1/2}\). The linear operators \({{\,\mathrm{vec}\,}}\) and \({{\,\mathrm{mat}\,}}\) preserve inner products, e.g. \({{\,\mathrm{vec}\,}}(S)' {{\,\mathrm{vec}\,}}(Z) = {{\,\mathrm{tr}\,}}(S' Z)\) for \(S, Z \in {\mathbb {R}}^{d_1 \times d_2}\) or \(S, Z \in {\mathbb {S}}^d\). \({{\,\mathrm{Diag}\,}}: {\mathbb {R}}^d \rightarrow {\mathbb {S}}^d\) is the diagonal matrix of a given vector, and \({{\,\mathrm{diag}\,}}: {\mathbb {S}}^d \rightarrow {\mathbb {R}}^d\) is the vector of the diagonal of a given matrix. For dimensions implied by context, e is a vector of 1s, \(e_i\) is the ith unit vector, and 0 is a vector or matrix of 0s.

\(|x |\) is the absolute value of \(x \in {\mathbb {R}}\) and \(\log (x)\) is the natural logarithm of \(x > 0\). \(\det (X)\) is the determinant of \(X \in {\mathbb {S}}^d\), and \({{\,\mathrm{logdet}\,}}(X)\) is the log-determinant of \(X \succ 0\). For a vector \(x \in {\mathbb {R}}^d\), \(\Vert x \Vert _\infty = \max _{i \in \llbracket d \rrbracket } |x_i |\) is the \(\ell _\infty \) norm and \(\Vert x \Vert _1 = \sum _{i \in \llbracket d \rrbracket } |x_i |\) is the \(\ell _1\) norm.

Suppose the function \(f : {{\,\mathrm{int}\,}}({\mathcal {C}}) \rightarrow {\mathbb {R}}\) is strictly convex and three times continuously differentiable on the interior of a set \({\mathcal {C}}\subset {\mathbb {R}}^d\). For a point \(p \in {{\,\mathrm{int}\,}}({\mathcal {C}})\), we denote the gradient and Hessian of f at p as \(\nabla f(p) \in {\mathbb {R}}^d\) and \(\nabla ^2 f(p) \in {\mathbb {S}}^d_{\succ }\). Given an \(h \in {\mathbb {R}}^d\), the first, second, and third order directional derivatives of f at p in direction h are \(\nabla f(p) [h] \in {\mathbb {R}}\), \(\nabla ^2 f(p) [h, h] \in {\mathbb {R}}_{\ge }\), and \(\nabla ^3 f(p) [h, h, h] \in {\mathbb {R}}\).

3 Exotic cones and oracles

Let \({\mathcal {K}}\) be a proper cone in \({\mathbb {R}}^q\), i.e. a conic subset of \({\mathbb {R}}^q\) that is closed, convex, pointed, and full-dimensional (see [64]). Note that requiring \({\mathcal {K}}\) to be a subset of \({\mathbb {R}}^q\) simplifies our notation but is not restrictive, e.g. for the PSD cone, we use the standard svec vectorization (see Sect. 2). The dual cone of \({\mathcal {K}}\) is \({\mathcal {K}}^*\), which is also a proper cone in \({\mathbb {R}}^q\):

Following [49, Sections 2.3.1 and 2.3.3], \(f : {{\,\mathrm{int}\,}}({\mathcal {K}}) \rightarrow {\mathbb {R}}\) is a \(\nu \)-LHSCB for \({\mathcal {K}}\), where \(\nu \ge 1\) is the LHSCB parameter, if it is three times continuously differentiable, strictly convex, satisfies \(f(s_i) \rightarrow \infty \) along every sequence \(s_i \in {{\,\mathrm{int}\,}}({\mathcal {K}})\) converging to the boundary of \({\mathcal {K}}\), and:

Following [61, Section 3.3], we define the conjugate of f, \(f^*: {{\,\mathrm{int}\,}}({\mathcal {K}}^*) \rightarrow {\mathbb {R}}\), as:

which is a \(\nu \)-LHSCB for \({\mathcal {K}}^*\).

A Cartesian product \({\mathcal {K}}= {\mathcal {K}}_1 \times \cdots \times {\mathcal {K}}_K\) of K proper cones is a proper cone, and its dual cone is \({\mathcal {K}}^*= {\mathcal {K}}_1^*\times \cdots \times {\mathcal {K}}_K^*\). In this case, if \(f_k\) is a \(\nu _k\)-LHSCB for \({\mathcal {K}}_k\), then \(\sum _{k \in \llbracket K \rrbracket } f_k\) is an LHSCB for \({\mathcal {K}}\) with parameter \(\sum _{k \in \llbracket K \rrbracket } \nu _k\) [49, Proposition 2.3.3]. We call \({\mathcal {K}}\) a primitive cone if it cannot be written as a Cartesian product of two or more lower-dimensional cones (i.e. K must equal 1). Note \({\mathcal {K}}^*\) is primitive if and only if \({\mathcal {K}}\) is primitive. Primitive proper cones are the fundamental building blocks of conic formulations.

We call a proper cone \({\mathcal {K}}\) an exotic cone if we can implement a particular set of tractable oracles for either \({\mathcal {K}}\) or \({\mathcal {K}}^*\). Suppose we have tractable oracles for \({\mathcal {K}}\subset {\mathbb {R}}^q\) and let \(f : {{\,\mathrm{int}\,}}({\mathcal {K}}) \rightarrow {\mathbb {R}}\) denote the \(\nu \)-LHSCB for \({\mathcal {K}}\). The oracles for \({\mathcal {K}}\) that we require in this paper are as follows.

-

Feasibility check. The strict feasibility oracle checks whether a given point \(s \in {\mathbb {R}}^q\) satisfies \(s \in {{\,\mathrm{int}\,}}({\mathcal {K}})\).

-

Gradient and Hessian evaluations. Given a point \(s \in {{\,\mathrm{int}\,}}({\mathcal {K}})\), the gradient oracle g and Hessian oracle H evaluated at s are:

$$\begin{aligned} g(s)&:=\nabla f(s) \in {\mathbb {R}}^q, \end{aligned}$$(4a)$$\begin{aligned} H(s)&:=\nabla ^2 f(s) \in {\mathbb {S}}^q_{\succ }. \end{aligned}$$(4b) -

Third order directional derivative. Given a point \(s \in {{\,\mathrm{int}\,}}({\mathcal {K}})\) and a direction \(\delta _s \in {\mathbb {R}}^q\), our new third order oracle (TOO), denoted by \(\mathrm {T}\), is a rescaled third order directional derivative vector:

$$\begin{aligned} \mathrm {T}(s, \delta _s) :=-\tfrac{1}{2} \nabla ^3 f(s) [\delta _s, \delta _s] \in {\mathbb {R}}^q. \end{aligned}$$(5) -

Initial interior point. The initial interior point \(t \in {{\,\mathrm{int}\,}}({\mathcal {K}})\) is an arbitrary point in the interior of \({\mathcal {K}}\) (which is nonempty since \({\mathcal {K}}\) is proper).

In Sect. 6, we introduce Hypatia’s predefined cones and discuss the time complexity of computing the feasibility check, gradient, Hessian, and TOO oracles. In Appendix C, we describe efficient and numerically stable techniques for computing these oracles for a handful of our cones. Although Hypatia’s generic cone interface allows specifying additional oracles that can improve speed and numerical performance (e.g. a dual cone feasibility check, Hessian product, and inverse Hessian product), these optional oracles are outside the scope of this paper.

For the initial interior point (which Hypatia only calls once, when finding an initial iterate), we prefer to use the central point of \({\mathcal {K}}\). This is the unique point satisfying \(t \in {{\,\mathrm{int}\,}}({\mathcal {K}}) \cap {{\,\mathrm{int}\,}}({\mathcal {K}}^*)\) and \(t = -g(t)\) [21]. For the nonnegative cone \({\mathcal {K}}= {\mathbb {R}}_{\ge }\), \(f(s) = -\log (s)\) is an LHSCB with \(\nu = 1\), and we have \(g(s) = -s^{-1}\) and the central point \(t = 1 = -g(1)\). For some of Hypatia’s cones, we are not aware of a simple analytic expression for the central point, in which case we typically use a non-central interior point.

4 General conic form and certificates

Hypatia uses the following primal conic form over variable \(x \in {\mathbb {R}}^n\):

where \(c \in {\mathbb {R}}^n\), \(b \in {\mathbb {R}}^p\), and \(h \in {\mathbb {R}}^q\) are vectors, \(A : {\mathbb {R}}^n \rightarrow {\mathbb {R}}^p\) and \(G : {\mathbb {R}}^n \rightarrow {\mathbb {R}}^q\) are linear maps, and \({\mathcal {K}}\subset {\mathbb {R}}^q\) is a Cartesian product \({\mathcal {K}}= {\mathcal {K}}_1 \times \cdots \times {\mathcal {K}}_K\) of exotic cones. For \(k \in \llbracket K \rrbracket \), we let \(q_k = \dim ({\mathcal {K}}_k)\), so \(\sum _{k \in \llbracket K \rrbracket } q_k = q = \dim ({\mathcal {K}})\). Henceforth we use n, p, q to denote respectively the variable, equality, and conic constraint dimensions of a conic problem.

Once a proper cone \({\mathcal {K}}_k\) is defined through Hypatia’s generic cone interface, both \({\mathcal {K}}_k\) and \({\mathcal {K}}_k^*\) may be used in any combination with other cones recognized by Hypatia to construct the Cartesian product cone \({\mathcal {K}}\) in (6c). The primal form (6) matches CVXOPT’s form, however CVXOPT only recognizes symmetric cones [66]. Unlike the conic form used in [59, 64], which recognizes conic constraints of the form \(x \in {\mathcal {K}}\), our form does not require introducing slack variables to represent a more general constraint \(h - G x \in {\mathcal {K}}\).

The conic dual problem of (6), over variables \(y \in {\mathbb {R}}^p\) and \(z \in {\mathbb {R}}^q\) associated with (6b) and (6c), is:

where (7b) is associated with the primal variable \(x \in {\mathbb {R}}^n\).

Under certain conditions, there exists a simple conic certificate providing an easily verifiable proof of infeasibility of the primal (6) or dual (7) problem (via the conic generalization of Farkas’ lemma) or optimality of a given primal-dual solution (via conic weak duality).

-

A primal improving ray x is a feasible direction for the primal along which the objective improves (hence it certifies dual infeasibility):

$$\begin{aligned} c' x < 0, \quad -A x = 0, \quad -G x \in {\mathcal {K}}. \end{aligned}$$(8) -

A dual improving ray (y, z) is a feasible direction for the dual along which the objective improves (hence it certifies primal infeasibility):

$$\begin{aligned} -b' y - h' z > 0, \quad A' y + G' z = 0, \quad z \in {\mathcal {K}}^*. \end{aligned}$$(9) -

A complementary solution (x, y, z) satisfies the primal-dual feasibility conditions (6b), (6c), (7b) and (7c), and has equal and attained primal and dual objective values:

$$\begin{aligned} c' x = -b' y - h' z. \end{aligned}$$(10)

One of these certificates exists if neither the primal nor the dual is ill-posed. Intuitively, according to [42, Section 7.2], a conic problem is ill-posed if a small perturbation of the problem data can change the feasibility status of the problem or cause arbitrarily large perturbations to the optimal solution (see [60] for more details).

The homogeneous self-dual embedding (HSDE) is a self-dual conic feasibility problem in variables \(x \in {\mathbb {R}}^n, y \in {\mathbb {R}}^p, z \in {\mathbb {R}}^q, \tau \in {\mathbb {R}}, s \in {\mathbb {R}}^q, \kappa \in {\mathbb {R}}\) (see [66, Section 6]), derived from a homogenization of the primal-dual optimality conditions (6b), (6c), (7b), (7c) and (10):

For convenience we let \(\omega :=(x, y, z, \tau , s, \kappa ) \in {\mathbb {R}}^{n + p + 2q + 2}\) represent a point. We define the structured \(4 \times 6\) block matrix \(E \in {\mathbb {R}}^{(n + p + q + 1) \times \dim (\omega )}\) such that (11a) is equivalent to \(E \omega = 0\). Here we assume E has full row rank; in Appendix A we discuss preprocessing techniques that handle linearly dependent rows. Note that \(\omega = 0\) satisfies (11), so the HSDE is always feasible. A point \(\omega \) is called an interior point if it is strictly feasible for (11b), i.e. \((z, \tau , s, \kappa ) \in {{\,\mathrm{int}\,}}\bigl ( {\mathcal {K}}^*\times {\mathbb {R}}_{\ge } \times {\mathcal {K}}\times {\mathbb {R}}_{\ge } \bigr )\).

Suppose a point \(\omega \) is feasible for the HSDE (11). From skew symmetry of the square \(4 \times 4\) block matrix in (11a), we have \(s' z + \kappa \tau = 0\). From (11b) and the dual cone inequality (1), we have \(s' z \ge 0\) and \(\kappa \tau \ge 0\). Hence \(s' z = \kappa \tau = 0\). We consider an exhaustive list of cases below [66, Section 6.1].

-

Optimality. If \(\tau > 0, \kappa = 0\), then \((x, y, z) / \tau \) is a complementary solution.

-

Infeasibility. If \(\tau = 0, \kappa > 0\), then \(c' x + b' y + h' z < 0\).

-

Of dual. If \(c' x < 0\), then x is a primal improving ray.

-

Of primal. If \(b' y + h' z < 0\), then (y, z) is a dual improving ray.

-

-

No information. If \(\tau = \kappa = 0\), then \(\omega \) provides no information about the feasibility or optimal values of the primal or dual.

According to Skajaa and Ye [64, Section 2], if the primal and dual problems are feasible and have zero duality gap, SY (their algorithm) finds an HSDE solution with \(\tau > 0\) (yielding a complementary solution), and if the primal or dual (possibly both) is infeasible, SY finds an HSDE solution with \(\kappa > 0\) (yielding an infeasibility certificate). This implies that if SY finds a solution with \(\kappa = \tau = 0\), then \(\kappa = \tau = 0\) for all solutions to the HSDE; in this case, no complementary solution or improving ray exists, and the primal or dual (possibly both) is ill-posed [60]. The algorithm we describe in Sect. 5 is an extension of SY that inherits these properties.

5 Central path following algorithm

In Sect. 5.1, we describe the central path of the HSDE, and in Sect. 5.2 we define central path proximity measures. In Sect. 5.3, we outline a high level PDIPM that maintains iterates close to the central path, and we give numerical convergence criteria for detecting approximate conic certificates. In Sect. 5.4, we derive prediction and centering directions and our corresponding TOA directions using the TOO. Finally in Sect. 5.5, we summarize an SY-like stepping procedure and describe our sequence of four enhancements to this procedure.

5.1 Central path of the HSDE

We define the HSDE in (11). Recall that \({\mathcal {K}}\) in our primal conic form (6) is a Cartesian product \({\mathcal {K}}= {\mathcal {K}}_1 \times \cdots \times {\mathcal {K}}_K\) of K exotic cones. We partition the exotic cone indices \(\llbracket K \rrbracket \) into two sets: \(K_\text {pr}\) for cones with primal oracles (i.e. for \({\mathcal {K}}_k\)) and \(K_\text {du}\) for cones with dual oracles (i.e. for \({\mathcal {K}}_k^*\)). For convenience, we append the \(\tau \) and \(\kappa \) variables onto the s and z variables. Letting \({\bar{K}}= K + 1\), we define for \(k \in \llbracket {\bar{K}} \rrbracket \):

For a given initial interior point \(\omega ^0 = (x^0, y^0, z^0, \tau ^0, s^0, \kappa ^0)\), the central path of the HSDE is the trajectory of solutions \(\omega _\mu = (x_\mu , y_\mu , z_\mu , \tau _\mu , s_\mu , \kappa _\mu )\), parameterized by \(\mu > 0\), satisfying:

When all exotic cones have primal oracles (i.e. \(K_\text {du}\) is empty), our definition (13) exactly matches the central path defined in [66, Equation 32], and only differs from the definition in [64, Equations 7-8] in the affine form (i.e. the variable names and affine constraint structure). Unlike SY, our central path condition (13b) allows cones with dual oracles (\(K_\text {du}\) may be nonempty).

To obtain an initial point \(\omega ^0\), we first let:

where \(t_k \in {{\,\mathrm{int}\,}}\bigl ( \bar{{\mathcal {K}}}_k \bigr )\) is the initial interior point oracle (note that \(\tau ^0 = \kappa ^0 = 1\)). Although \(x^0\) and \(y^0\) can be chosen arbitrarily, we let \(x^0\) be the solution of:

and we let \(y^0\) be the solution of:

In Appendix A, we outline a QR-factorization-based procedure for preprocessing the affine data of the conic model and solving for \(\omega ^0\).

Like [64, Section 4.1], we define the complementarity gap function:

where \(\nu _k\) is the LHSCB parameter of the LHSCB \(f_k\) for \(\bar{{\mathcal {K}}}_k\) (see (2b)). Note that \(\mu (\omega ) > 0\) if \(({\bar{z}}, {\bar{s}}) \in {{\,\mathrm{int}\,}}(\bar{{\mathcal {K}}}^*) \times {{\,\mathrm{int}\,}}(\bar{{\mathcal {K}}})\), by a strict version of the dual cone inequality (1). From (14), \(\mu (\omega ^0) = 1\), since in (17) we have \(({\bar{s}}^0)' {\bar{z}}^0 = {\textstyle \sum _{k \in \llbracket {\bar{K}} \rrbracket }} t_k' (-g_k (t_k))\), and \(t_k' (-g_k (t_k)) = \nu _k\) by logarithmic homogeneity of \(f_k\) [49, Proposition 2.3.4]. Hence \(\omega ^0\) satisfies the central path conditions (13) for parameter value \(\mu = 1\). The central path is therefore a trajectory that starts at \(\omega ^0\) with complementarity gap \(\mu = 1\) and approaches a solution for the HSDE as \(\mu \) decreases to zero.

5.2 Central path proximity

Given a point \(\omega \), we define the central path proximity \(\pi _k\) for exotic cone \(k \in \llbracket {\bar{K}} \rrbracket \) as:

Hence \(\pi _k\) is a measure of the distance from \({\bar{s}}_k\) and \({\bar{z}}_k\) to the surface defined by the central path condition (13b) (compare to [64, Equation 9] and [52, Section 4]).

In Lemma 1, we show that for exotic cone \(k \in \llbracket {\bar{K}} \rrbracket \), if \(\pi _k(\omega ) < 1\), then \({\bar{s}}_k \in {{\,\mathrm{int}\,}}\bigl ( \bar{{\mathcal {K}}}_k \bigr )\) and \({\bar{z}}_k \in {{\,\mathrm{int}\,}}\bigl ( \bar{{\mathcal {K}}}^*_k \bigr )\). This condition is sufficient but not necessary for strict cone feasibility. If it holds for all \(k \in \llbracket {\bar{K}} \rrbracket \), then \(\omega \) is an interior point (by definition) and (13c) is satisfied. From (18), \(\pi _k(\omega )\) can be computed by evaluating the feasibility check, gradient, and Hessian oracles for \(\bar{{\mathcal {K}}}_k\) at \({\bar{s}}_k\).

Lemma 1

Given a point \(\omega \), for each \(k \in \llbracket {\bar{K}} \rrbracket \), \(\pi _k(\omega ) < 1\) implies \({\bar{s}}_k \in {{\,\mathrm{int}\,}}\bigl ( \bar{{\mathcal {K}}}_k \bigr )\) and \({\bar{z}}_k \in {{\,\mathrm{int}\,}}\bigl ( \bar{{\mathcal {K}}}^*_k \bigr )\).

Proof

We adapt the proof of [56, Lemma 15]. Fix \(\mu = \mu (\omega )\) for convenience, and suppose \(\pi _k(\omega ) < 1\) for exotic cone \(k \in \llbracket {\bar{K}} \rrbracket \). Then by (18), \(\mu > 0\) and \({\bar{s}}_k \in {{\,\mathrm{int}\,}}\bigl ( \bar{{\mathcal {K}}}_k \bigr )\). By [56, Theorem 8], \({\bar{s}}_k \in {{\,\mathrm{int}\,}}\bigl ( \bar{{\mathcal {K}}}_k \bigr )\) implies \(-g_k ({\bar{s}}_k) \in {{\,\mathrm{int}\,}}\bigl ( \bar{{\mathcal {K}}}^*_k \bigr )\). Let \(f_k\) be the LHSCB for \(\bar{{\mathcal {K}}}_k\), and let \(H^*_k :=\nabla ^2 f^*_k\) denote the Hessian operator for the conjugate \(f^*_k\) (see (3)) of \(f_k\). By [56, Equation 13], \(H^*_k (-g_k ({\bar{s}}_k)) = (H_k ({\bar{s}}_k))^{-1}\), so:

So by [56, Definition 1], \({\bar{z}}_k / \mu \in {{\,\mathrm{int}\,}}\bigl ( \bar{{\mathcal {K}}}^*_k \bigr )\), hence \({\bar{z}}_k \in {{\,\mathrm{int}\,}}\bigl ( \bar{{\mathcal {K}}}^*_k \bigr )\). \(\square \)

We now define a proximity function that aggregates the exotic cone central path proximity values \(\pi _k(\omega ) \ge 0, \forall k \in \llbracket {\bar{K}} \rrbracket \). SY aggregates by taking the \(\ell _2\) norm:

An alternative aggregated proximity uses the \(\ell _{\infty }\) norm (maximum):

Clearly, \(0 \le \pi _k (\omega ) \le \pi _{\ell _\infty }(\omega ) \le \pi _{\ell _2}(\omega ), \forall k \in \llbracket {\bar{K}} \rrbracket \). Both conditions \(\pi _{\ell _2}(\omega ) < 1\) and \(\pi _{\ell _\infty }(\omega ) < 1\) guarantee by Lemma 1 that \(\omega \) is an interior point, however using \(\pi _{\ell _2}\) leads to a more restrictive condition on \(\omega \).

5.3 High level algorithm

We describe a high level algorithm for approximately solving the HSDE. The method starts at the initial interior point \(\omega ^0\) with complementarity gap \(\mu (\omega ^0) = 1\) and approximately tracks the central path trajectory (13) through a series of iterations. It maintains feasibility for the linear equality conditions (13a) and strict cone feasibility conditions (13c), but allows violation of the nonlinear equality conditions (13b). On the ith iteration, the current interior point is \(\omega ^{i-1}\) satisfying \(\pi _k(\omega ^{i-1}) < 1, \forall k \in \llbracket {\bar{K}} \rrbracket \), and the complementarity gap is \(\mu (\omega ^{i-1})\). The method searches for a new point \(\omega ^i\) that maintains the proximity condition \(\pi _k(\omega ^i) < 1, \forall k \in \llbracket {\bar{K}} \rrbracket \) (and hence is an interior point) and either has a smaller complementarity gap \(\mu (\omega ^i) < \mu (\omega ^{i-1})\) or a smaller aggregate proximity value \(\pi (\omega ^i) < \pi (\omega ^{i-1})\) (where \(\pi \) is \(\pi _{\ell _2}\) or \(\pi _{\ell _\infty }\)), or both. As the complementarity gap decreases towards zero, the RHS of (13a) approaches the origin, so the iterates approach a solution of the HSDE (11).

To detect an approximate conic certificate and terminate the iterations, we check whether the current iterate \(\omega \) satisfies any of the following numerical convergence criteria. These conditions use positive tolerance values for feasibility \(\varepsilon _f\), infeasibility \(\varepsilon _i\), absolute gap \(\varepsilon _a\), relative gap \(\varepsilon _r\), and ill-posedness \(\varepsilon _p\). The criteria and default tolerance values are similar to those described by MOSEK in [43, Section 13.3.2] and CVXOPT in [44], and implemented in Alfonso [58]. In Sect. 7.2, we describe the tolerance values we use for computational testing in this paper.

-

Optimality. We terminate with a complementary solution \((x, y, z) / \tau \) approximately satisfying the primal-dual optimality conditions (6b), (6c), (7b), (7c) and (10) if:

$$\begin{aligned} \max \biggl ( \frac{ \Vert A' y + G' z + c \tau \Vert _{\infty } }{ 1 + \Vert c \Vert _{\infty } }, \frac{ \Vert -A x + b \tau \Vert _{\infty } }{ 1 + \Vert b \Vert _{\infty } }, \frac{ \Vert -G x + h \tau - s \Vert _{\infty } }{ 1 + \Vert h \Vert _{\infty } } \biggr ) \le \varepsilon _f \tau , \nonumber \\ \end{aligned}$$(22a)and at least one of the following two conditions holds:

$$\begin{aligned} s' z&\le \varepsilon _a, \end{aligned}$$(22b)$$\begin{aligned} \min ( s' z / \tau , |c' x + b' y + h' z |)&\le \varepsilon _r \max ( \tau , \min ( |c' x |, |b' y + h' z |) ). \end{aligned}$$(22c)Note that (22b) and (22c) are absolute and relative optimality gap conditions respectively.

-

Primal infeasibility. We terminate with a dual improving ray (y, z) approximately satisfying (9) if:

$$\begin{aligned} b' y + h' z < 0, \qquad \Vert A' y + G' z \Vert _{\infty } \le -\varepsilon _i ( b' y + h' z ). \end{aligned}$$(23) -

Dual infeasibility. We terminate with a primal improving ray x approximately satisfying (8) if:

$$\begin{aligned} c' x < 0, \qquad \max ( \Vert A x \Vert _{\infty }, \Vert G x + s \Vert _{\infty } ) \le -\varepsilon _i c' x. \end{aligned}$$(24) -

Ill-posed primal or dual. If \(\tau \) and \(\kappa \) are approximately 0, the primal and dual problem statuses cannot be determined. We terminate with an ill-posed status if:

$$\begin{aligned} \mu (\omega ) \le \varepsilon _p, \qquad \tau \le \varepsilon _p \min (1, \kappa ). \end{aligned}$$(25)

The high level path following algorithm below computes an approximate solution to the HSDE. In Sect. 5.5, we describe specific stepping procedures for Line 5.

5.4 Search directions

At a given iteration of the path following method, let \(\omega \) be the current interior point and fix \(\mu = \mu (\omega )\) for convenience. The stepping procedures we describe in Sect. 5.5 first compute one or more search directions, which depend on \(\omega \). We derive the centering direction in Sect. 5.4.1 and the prediction direction in Sect. 5.4.2. The goal of centering is to step to a point with a smaller aggregate central path proximity than the current point, i.e. to step towards the central path. The goal of prediction is to step to a point with a smaller complementarity gap, i.e. to step closer to a solution of the HSDE. The centering and prediction directions match those used by SY. We associate with each of these directions a new third order adjustment (TOA) direction, which depends on the TOO and helps to correct the corresponding unadjusted direction (which must be computed before the TOA direction). Hence we derive four types of directions here.

Each direction is computed as the solution to a linear system with a structured square block matrix left hand side (LHS) and a particular right hand side (RHS) vector. The LHS, which depends only on \(\omega \) and the problem data, is the same for all four directions at a given iteration. We let \(r :=(r_E, r_1, \ldots , r_{{\bar{K}}}) \in {\mathbb {R}}^{\dim (\omega )}\) represent an RHS, where \(r_E \in {\mathbb {R}}^{n + p + q + 1}\) corresponds to the linear equalities (13a) and \(r_k \in {\mathbb {R}}^{q_k}, \forall k \in \llbracket {\bar{K}} \rrbracket \) corresponds to the nonlinear equalities (13b). The direction \(\delta :=(\delta _x, \delta _y, \delta _z, \delta _\tau , \delta _s, \delta _\kappa ) \in {\mathbb {R}}^{\dim (\omega )}\) corresponding to r is the solution to:

Since E is assumed to have full row rank and each \(H_k\) is positive definite, this square system is nonsingular and hence has a unique solution. In Appendix A, we describe a particular method for solving (26).

5.4.1 Centering

The centering direction \(\delta ^c\) is analogous to the definition of [64, Section 3.2]. It reduces the violation on the central path nonlinear equality condition (13b) (and can be interpreted as a Newton step), while keeping the complementarity gap \(\mu \) (approximately) constant. We denote the centering TOA direction \(\delta ^{ct}\). To maintain feasibility for the linear equality condition (13a), we ensure \(E \delta ^c = E \delta ^{ct} = 0\) in (26a).

Dropping the index \(k \in \llbracket {\bar{K}} \rrbracket \) for conciseness, recall that (13b) expresses \({\bar{z}}+ \mu g({\bar{s}}) = 0\). A first order approximation of this condition gives:

which matches the form of (26b). Hence we let the centering direction \(\delta ^c\) be the solution to:

Similarly, a second order approximation of \({\bar{z}}+ \mu g({\bar{s}}) = 0\) gives:

where (29b) uses the definition of the TOO in (5). Note that the RHSs of (27b) and (29b) differ only by \(\mu \mathrm {T}({\bar{s}}, \delta _{{\bar{s}}})\), which depends on \(\delta _{{\bar{s}}}\). To remove this dependency, we substitute the centering direction \(\delta ^c\), which we assume is already computed, into the RHS of (29b). Hence we let the centering TOA direction \(\delta ^{ct}\), which adjusts the centering direction, be the solution to:

We note that for a rescaling factor \(\alpha \in (0,1)\), the TOA direction corresponding to \(\alpha \delta ^c\) (a rescaling of the centering direction) is \(\alpha ^2 \delta ^{ct}\) (a rescaling of the centering TOA direction).

5.4.2 Prediction

The prediction direction \(\delta ^p\) reduces the complementarity gap and is analogous to the definition of [64, Section 3.1]. We derive \(\delta ^p\) and its corresponding TOA direction \(\delta ^{pt}\) by considering the central path conditions (13) as a dynamical system parametrized by \(\mu > 0\), and differentiating the linear and nonlinear equalities (13a) and (13b).

Differentiating (13a) once gives:

Rescaling (31) by \(-\mu \) and substituting (13a) gives:

Dropping the index \(k \in \llbracket {\bar{K}} \rrbracket \) for conciseness, we differentiate \({\bar{z}}_{\mu } + \mu g({\bar{s}}_{\mu }) = 0\) from (13b) once to get:

Rescaling (33) by \(-\mu \) and substituting \({\bar{z}}_{\mu } = -\mu g({\bar{s}}_{\mu })\) from (13b) gives:

The direction \({\dot{\omega }}_\mu \) is tangent to the central path. Like SY, we interpret the prediction direction as \(\delta ^p = -\mu {\dot{\omega }}_{\mu }\), so (32) and (34) become:

which matches the form (26). So we let \(\delta ^p\) be the solution to:

Differentiating (13a) twice and rescaling by \(\frac{1}{2} \mu ^2\) gives:

Differentiating \({\bar{z}}_{\mu } + \mu g({\bar{s}}_{\mu }) = 0\) twice gives:

Rescaling (38) by \(\frac{1}{2} \mu ^2\) and substituting the TOO definition (5), we have:

We interpret the prediction TOA direction, which adjusts the prediction direction, as \(\delta ^{pt} = \frac{1}{2} \mu ^2 {\ddot{\omega }}\). The RHS of (39b) depends on \({\dot{{\bar{s}}}}_{\mu }\), so we remove this dependency by substituting the prediction direction \(\delta ^p = -\mu {\dot{\omega }}_{\mu }\), which we assume is already computed. Hence using (37) and (39b), we let \(\delta ^{pt}\) be the solution to:

We note that the RHS in (40b) differs from the ‘higher order corrector’ RHS of Dahl and Andersen [21, Equation 16], which has the form \(\frac{1}{2} \nabla ^3 f_k \bigl [ \delta _{{\bar{s}},k}^p, (H_k({\bar{s}}_k))^{-1} \delta _{{\bar{z}},k}^p \bigr ]\). For example, our form does not satisfy all of the properties in [21, Lemmas 3 and 4].

5.5 Stepping procedures

A stepping procedure computes one or more directions from Sect. 5.4 and uses the directions to search for a new interior point. Recall from Line 5 of the high level PDIPM in Sect. 5.3 that on iteration i with current iterate \(\omega ^{i-1}\), Step computes \(\omega ^i\) satisfying \(\pi (\omega ^i) < 1\) and either \(\mu (\omega ^i) < \mu (\omega ^{i-1})\) (prediction) or \(\pi (\omega ^i) < \pi (\omega ^{i-1})\) (centering) or both. In Sect. 5.5.1, we describe a baseline stepping procedure mirroring that of Alfonso, which is an implementation of the SY algorithm with worst-case polynomial time iteration complexity. This procedure, which we call basic, alternates between prediction and centering steps and does not use the TOA directions. In Sects. 5.5.2 to 5.5.5, we describe a sequence of four cumulative enhancements to the basic procedure. The goal is to improve iteration counts or per-iteration computational efficiency in practice, without regard for theoretical iteration complexity guarantees. Our computational testing in Sect. 7 assesses the value of these enhancements on a diverse set of benchmark instances.

5.5.1 Basic stepping procedure

First, we decide whether to perform a centering step or a prediction step. If the current iterate \(\omega ^{i-1}\) (at the ith iteration) is very close to the central path, i.e. if the sum proximity (20) does not exceed \(\eta = 0.0332\), or if the most recent \(N = 4\) steps have all been centering steps, then we compute the prediction direction \(\delta ^p\) (note these parameter values are taken directly from Alfonso and are based on the theoretical analysis of [56]). Otherwise, we compute the centering direction \(\delta ^c\) from (28). Letting j be the number of consecutive centering steps taken immediately before the current ith iteration, the search direction is:

Next, we perform a backtracking line search in the direction \(\delta \). The search finds a step length \({\hat{\alpha }} \in (0, 1)\) from a fixed schedule of decreasing values \({\mathcal {A}} = \{ \alpha _l \}_{l \in \llbracket L \rrbracket }\), where \(L = 18\), \(\alpha _1 = 0.9999\), and \(\alpha _L = 0.0005\). The next iterate \(\omega ^i = \omega ^{i-1} + {\hat{\alpha }} \delta \) becomes the first point in the backtracking line search that satisfies \(\pi _{\ell _2}(\omega ^i) \le \beta _1\) for \(\beta _1 = 0.2844\), which guarantees interiority by Lemma 1 (note \(\beta _1\) is again taken directly from Alfonso and is based on the theoretical analysis of [56]). If the backtracking search terminates without a step length satisfying the proximity condition (i.e. \(\alpha _L\) is too large), the PDIPM algorithm terminates without a solution. In Appendix B we discuss our implementation of the proximity check that we run for each candidate point in the backtracking search.

The basic stepping procedure is summarized as follows. Note the centering step count j is initialized to zero before the first iteration \(i = 1\). Since \(\omega ^0\) is exactly on the central path (i.e. the proximity is zero), the first iteration uses a prediction step.

5.5.2 Less restrictive proximity

The basic stepping procedure in Sect. 5.5.1 requires iterates to remain in close proximity to the central path and usually only takes prediction steps from iterates that are very close to the central path. Although conservative proximity conditions are used to prove polynomial iteration complexity in [56], they may be too restrictive from the perspective of practical performance. To allow prediction steps from a larger neighborhood of the central path, we use the \(\pi _{\ell _\infty }\) proximity measure from (21) instead of \(\pi _{\ell _2}\) to compute the proximity of \(\omega ^{i-1}\), though we do not change the proximity bound \(\eta \). To allow the step length to be as large as possible, we use \(\pi _{\ell _\infty }\) instead of \(\pi _{\ell _2}\) for the backtracking search proximity checks and we replace \(\beta _1\) from Sect. 5.5.1 by a larger proximity bound \(\beta _2 = 0.99\). By Lemma 1, \(\beta _2 < 1\) guarantees interiority, and our offline sensitivity analysis on \(\beta _2\) suggests that 0.99 is a reasonable choice.Footnote 4

The prox stepping procedure, which enhances the basic stepping procedure by relaxing the proximity conditions somewhat, is summarized as follows.

5.5.3 Third order adjustments

We modify the prox stepping procedure in Sect. 5.5.2 to incorporate the new TOA directions associated with the prediction and centering directions. In symmetric conic IPMs, it is common to compute a step length in the unadjusted prediction direction, use this step length to compute an adjusted direction, and then compute a step length in this final direction (see e.g. [66, Section 5.1] for CVXOPT’s approach using the Mehrotra correction). Our approach is similar.

After deciding whether to predict or center (using the same criteria as prox), we compute the unadjusted direction \(\delta ^u\) (i.e. \(\delta ^p\) or \(\delta ^c\)) and its associated TOA direction \(\delta ^t\) (i.e. \(\delta ^{pt}\) or \(\delta ^{ct}\)). We perform a backtracking line search in direction \(\delta ^u\) (like prox) and use this unadjusted step length \({\hat{\alpha }}^u \in (0,1)\) to scale down the TOA direction, letting the adjusted direction be \(\delta ^u + {\hat{\alpha }}^u \delta ^t\). We perform a second backtracking line search in this final direction to compute the final step length \({\hat{\alpha }}\), using the same techniques and proximity condition as the first line search. If we think of \({\hat{\alpha }}^u\) as an approximation of \({\hat{\alpha }}\), then essentially the final step applies an adjustment of \({\hat{\alpha }}^2 \delta ^t\) to \({\hat{\alpha }} \delta ^u\). Our derivations of the adjustment directions in Sect. 5.4 (particularly the centering direction) suggest that this is a reasonable heuristic for adjustment.

The TOA stepping procedure, which enhances the prox stepping procedure by incorporating the TOA directions, is summarized as follows.

5.5.4 Curve search

The TOA stepping procedure in Sect. 5.5.3 performs two backtracking line searches, which can be quite expensive. We propose using a single backtracking search along a curve that is quadratic in the step parameter \(\alpha \) and linear in the unadjusted and TOA directions. Recall from Line 12 of the TOA procedure that we compute a direction \(\delta \) as a linear function of the step parameter from the first line search. Substituting this \(\delta \) function into the usual linear trajectory gives the curved trajectory \(\omega ^{i-1} + \alpha ( \delta ^u + \alpha \delta ^t )\) for \(\alpha \in (0,1)\), where \(\delta ^u\) and \(\delta ^t\) are the unadjusted and TOA directions (as in the TOA procedure). Intuitively, a backtracking search along this curve achieves a more dynamic rescaling of the TOA direction.

The curve stepping procedure, which enhances the TOA stepping procedure by using a search on a curve instead of two line searches, is summarized as follows.

5.5.5 Combined directions

Unlike [59, 64], most conic PDIPMs combine the prediction and centering phases (e.g. [21, 66]). We propose using a single search on a curve that is quadratic in the step parameter \(\alpha \) and linear in all four directions \(\delta ^c, \delta ^{ct}, \delta ^p, \delta ^{pt}\) from Sect. 5.5.3. Intuitively, we can step further in a convex combination of the prediction and centering directions than we can in just the prediction direction. In practice, a step length of one is usually ideal for the centering phase, so we can imagine performing a backtracking search from the point obtained from a pure prediction step (with step length one) towards the point obtained from a pure centering step, terminating when we are close enough to the centering point to satisfy the proximity condition. This approach fundamentally differs from the previous procedures we have described because the search trajectory does not finish at the current iterate \(\omega ^{i-1}\). If \({\hat{\omega }}^p (\alpha )\) and \({\hat{\omega }}^c (\alpha )\) are the prediction and centering curve search trajectories from Line 11 of the curve procedure, then we define the combined trajectory as \({\hat{\omega }} (\alpha ) = {\hat{\omega }}^p (\alpha ) + {\hat{\omega }}^c (1 - \alpha )\). Note that \(\alpha = 1\) corresponds to a full step in the adjusted prediction direction \(\delta ^p + \delta ^{pt}\), and \(\alpha = 0\) corresponds to a full step in the adjusted centering direction \(\delta ^c + \delta ^{ct}\).

The comb stepping procedure, which enhances the curve stepping procedure by combining the prediction and centering phases, is summarized as follows. Note that unlike the previous procedures, there is no parameter j counting consecutive centering steps. Occasionally in practice, the backtracking search on Line 4 below fails to find a positive step value, in which case we perform a centering step according to Lines 11 to 13 of the curve procedure.

6 Oracles for predefined exotic cones

Below we list 23 exotic cone types that we have predefined through Hypatia’s generic cone interface (see Sect. 3). Each of these cones is represented in the benchmark set of conic instances that we introduce in Sect. 7.1. Recall that we write any exotic cone \({\mathcal {K}}\) in vectorized form, i.e. as a subset of \({\mathbb {R}}^q\), where \(q = \dim ({\mathcal {K}}) \ge 1\) is the cone dimension. For cones typically defined using symmetric matrices, we use the standard svec vectorization (see Sect. 2) to ensure the vectorized cone is proper, to preserve inner products, and to simplify the dual cone definition. Each cone is parametrized by at least one dimension and several cones have additional parameters such as numerical data; for convenience, we drop these parameters from the symbols we use to represent cone types. For each cone, we define the LHSCB Hypatia uses below, and we list the associated barrier parameter \(\nu \) in Table 1. For several cones, we have implemented additional variants over complex numbers (for example, a Hermitian PSD cone), but we omit these definitions here for simplicity.

-

Nonnegative cone. \({\mathcal {K}}_{\ge }:={\mathbb {R}}_{\ge }^d\) is the (self-dual) nonnegative real vectors (note for \(d > 1\), \({\mathcal {K}}_{\ge }\) is not a primitive cone). We use the LHSCB \(f(w) = -\log (w)\) [51, Section 2.1].

-

PSD cone. \({\mathcal {K}}_{\succeq }:=\bigl \{ w \in {\mathbb {R}}^{{{\,\mathrm{sd}\,}}(d)} : {{\,\mathrm{mat}\,}}(w) \in {\mathbb {S}}^d_{\succeq } \bigr \}\) is the (self-dual) PSD matrices of side dimension d. We use the LHSCB \(f(w) = -{{\,\mathrm{logdet}\,}}({{\,\mathrm{mat}\,}}(w))\) [51, Section 2.2].

-

Doubly nonnegative cone. \({\mathcal {K}}_{{{\,\mathrm{DNN}\,}}}:={\mathcal {K}}_{\ge }\cap {\mathcal {K}}_{\succeq }\) is the PSD matrices with all nonnegative entries of side dimension d. We use the LHSCB \(f(w) = -{{\,\mathrm{logdet}\,}}({{\,\mathrm{mat}\,}}(w)) - {\textstyle \sum _{j \in \llbracket d \rrbracket , i \in \llbracket j-1 \rrbracket }} \log ({{\,\mathrm{mat}\,}}(w)_{i,j})\).

-

Sparse PSD cone. \({\mathcal {K}}_{{{\,\mathrm{sPSD}\,}}}\) is the PSD matrices of side dimension s with a fixed sparsity pattern \({\mathcal {S}}\) containing \(d \ge s\) nonzeros (including all diagonal elements); see Appendix C.4. The dual cone \({\mathcal {K}}_{{{\,\mathrm{sPSD}\,}}}^*\) is the symmetric matrices with pattern \({\mathcal {S}}\) for which there exists a PSD completion, i.e. an assignment of the elements not in \({\mathcal {S}}\) such that the full matrix is PSD. For simplicity, the complexity estimates in Table 1 assume the nonzeros are grouped under \(J \ge 1\) supernodes, each containing at most l nodes, and the monotone degree of each node is no greater than a constant D [4]. We use the LHSCB in Appendix C.4.

-

Linear matrix inequality cone. \({\mathcal {K}}_{{{\,\mathrm{LMI}\,}}}:=\bigl \{ w \in {\mathbb {R}}^d : {\textstyle \sum _{i \in \llbracket d \rrbracket }} w_i P_i \in {\mathbb {S}}^s_{\succeq } \bigr \}\) are the vectors for which the matrix pencil of d matrices \(P_i \in {\mathbb {S}}^s, \forall i \in \llbracket d \rrbracket \) is PSD. We assume \(P_1 \succ 0\) so that we can use the initial interior point \(e_1\). We use the LHSCB in Appendix C.2.

-

Infinity norm cone. \(\mathcal {K}_{\ell _{\infty }} :=\{ (u, w) \in {\mathbb {R}}_{\ge } \times {\mathbb {R}}^d : u \ge \Vert w \Vert _\infty \}\) is the epigraph of the \(\ell _\infty \) norm on \({\mathbb {R}}^d\). The dual cone \(\mathcal {K}_{\ell _\infty }^*\) is the epigraph of the \(\ell _1\) norm. We use the LHSCB \(f(u, w) = (d - 1) \log (u) - {\textstyle \sum _{i \in \llbracket d \rrbracket }} \log (u^2 - w_i^2)\) [32, Section 7.5].

-

Euclidean norm cone. \(\mathcal {K}_{\ell _2} :=\{ (u, w) \in {\mathbb {R}}_{\ge } \times {\mathbb {R}}^d : u \ge \Vert w \Vert \}\) is the (self-dual) epigraph of the \(\ell _2\) norm on \({\mathbb {R}}^d\) (AKA second-order cone). We use the LHSCB \(f(u, w) = -\log (u^2 - \Vert w \Vert ^2)\) [51, Section 2.3].

-

Euclidean norm square cone. \({\mathcal {K}}_{{{\,\mathrm{sqr}\,}}}:=\{ (u, v, w) \in {\mathbb {R}}_{\ge } \times {\mathbb {R}}_{\ge } \times {\mathbb {R}}^d : 2 u v \ge \Vert w \Vert ^2 \}\) is the (self-dual) epigraph of the perspective of the square of the \(\ell _2\) norm on \({\mathbb {R}}^d\) (AKA rotated second-order cone). We use the LHSCB \(f(u, v, w) = -\log (2 u v - \Vert w \Vert ^2)\) [51, Section 2.3].

-

Spectral norm cone. \(\mathcal {K}_{\ell _{{{\,\mathrm{spec}\,}}}} :=\{ (u, w) \in {\mathbb {R}}_{\ge } \times {\mathbb {R}}^{r s} : u \ge \sigma _1(W) \}\), where \(W :={{\,\mathrm{mat}\,}}(w)\) and \(\sigma _1\) is the largest singular value function, is the epigraph of the spectral norm on \({\mathbb {R}}^{r \times s}\), assuming \(r \le s\) without loss of generality. Similarly, \(\mathcal {K}_{\ell _{{{\,\mathrm{spec}\,}}}}^*\) is the epigraph of the matrix nuclear norm (i.e. the sum of singular values). We use the LHSCB \(f(u, w) = (r - 1) \log (u) - {{\,\mathrm{logdet}\,}}(u^2 I(r) - W W')\) [49, Section 5.4.6].

-

Matrix square cone. \({\mathcal {K}}_{{{\,\mathrm{matsqr}\,}}}:=\bigl \{ (u, v, w) \in {\mathbb {R}}^{{{\,\mathrm{sd}\,}}(r)} \times {\mathbb {R}}_{\ge } \times {\mathbb {R}}^{r s} : U \in {\mathbb {S}}^r_{\succeq }, 2 U v \succeq W W' \bigr \}\), where \(U :={{\,\mathrm{mat}\,}}(u)\) and \(W :={{\,\mathrm{mat}\,}}(w) \in {\mathbb {R}}^{r \times s}\), is the homogenized symmetric matrix epigraph of the symmetric outer product, assuming \(r \le s\) without loss of generality [33]. We use the LHSCB \(f(u, v, w) = (r - 1) \log (v) - {{\,\mathrm{logdet}\,}}(2 v U - W W')\) [5].

-

Generalized power cone. \({\mathcal {K}}_{{{\,\mathrm{gpow}\,}}}:=\bigl \{ (u, w) \in {\mathbb {R}}^r_{\ge } \times {\mathbb {R}}^s : {\textstyle \prod _{i \in \llbracket r \rrbracket }} u_i^{\alpha _i} \ge \Vert w \Vert \bigr \}\), parametrized by exponents \(\alpha \in {\mathbb {R}}^r_>\) with \(e' \alpha = 1\), is the generalized power cone [16, Section 3.1.2]. We use the LHSCB \(f(u, w) = -\log \bigl ( {\textstyle \prod _{i \in \llbracket r \rrbracket }} u_i^{2 \alpha _i} - \Vert w \Vert ^2 \bigr ) - {\textstyle \sum _{i \in \llbracket r \rrbracket }} (1 - \alpha _i) \log (u_i)\) [62].

-

Power mean cone. \({\mathcal {K}}_{{{\,\mathrm{pow}\,}}}:=\bigl \{ (u, w) \in {\mathbb {R}}\times {\mathbb {R}}^d_{\ge } : u \le {\textstyle \prod _{i \in \llbracket d \rrbracket }} w_i^{\alpha _i} \bigr \}\), parametrized by exponents \(\alpha \in {\mathbb {R}}^d_>\) with \(e' \alpha = 1\), is the hypograph of the power mean on \({\mathbb {R}}_{\ge }^d\). We use the LHSCB \(f(u, w) = -\log \bigl ( {\textstyle \prod _{i \in \llbracket d \rrbracket }} w_i^{\alpha _i} - u \bigr ) - {\textstyle \sum _{i \in \llbracket d \rrbracket }} \log (w_i)\) [48, Section 5.4.7].

-

Geometric mean cone. \({\mathcal {K}}_{{{\,\mathrm{geo}\,}}}\) is the hypograph of the geometric mean on \({\mathbb {R}}_{\ge }^d\), a special case of \({\mathcal {K}}_{{{\,\mathrm{pow}\,}}}\) with equal exponents.

-

Root-determinant cone. \({\mathcal {K}}_{{{\,\mathrm{rtdet}\,}}}:=\bigl \{ (u, w) \in {\mathbb {R}}\times {\mathbb {R}}^{{{\,\mathrm{sd}\,}}(d)} : W \in {\mathbb {S}}^d_{\succeq }, u \le (\det (W))^{1/d} \bigr \}\), where \(W :={{\,\mathrm{mat}\,}}(w)\), is the hypograph of the dth-root-determinant on \({\mathbb {S}}_{\succeq }^d\). We use the LHSCB \(f(u, w) = -\log ((\det (W))^{1 / d} - u) - {{\,\mathrm{logdet}\,}}(W)\) [18, Proposition 7.1].

-

Logarithm cone. \({\mathcal {K}}_{\log }:={{\,\mathrm{cl}\,}}\bigl \{ (u, v, w) \in {\mathbb {R}}\times {\mathbb {R}}_> \times {\mathbb {R}}^d_> : u \le {\textstyle \sum _{i \in \llbracket d \rrbracket }} v \log ( w_i / v ) \bigr \}\) is the hypograph of the perspective of the sum of logarithms on \({\mathbb {R}}_>^d\). We use the LHSCB \(f(u, v, w) = -\log \bigl ( {\textstyle \sum _{i \in \llbracket d \rrbracket }} v \log (w_i / v) - u \bigr ) - \log (v) - {\textstyle \sum _{i \in \llbracket d \rrbracket }} \log (w_i)\) [18, Proposition 6.1].

-

Log-determinant cone. \({\mathcal {K}}_{{{\,\mathrm{logdet}\,}}}:={{\,\mathrm{cl}\,}}\bigl \{ (u, v, w) \in {\mathbb {R}}\times {\mathbb {R}}_> \times {\mathbb {R}}^{{{\,\mathrm{sd}\,}}(d)} : W \in {\mathbb {S}}^d_{\succ }, u \le v {{\,\mathrm{logdet}\,}}( W / v ) \bigr \}\), where \(W :={{\,\mathrm{mat}\,}}(w)\), is the hypograph of the perspective of the log-determinant on \({\mathbb {S}}_{\succ }^d\). We use the LHSCB \(f(u, v, w) = -\log (v {{\,\mathrm{logdet}\,}}(W / v) - u) - \log (v) - {{\,\mathrm{logdet}\,}}(W)\) [18, Proposition 6.1].

-

Separable spectral function cone. \({\mathcal {K}}_{{{\,\mathrm{sepspec}\,}}}:={{\,\mathrm{cl}\,}}\{ (u, v, w) \in {\mathbb {R}}\times {\mathbb {R}}_> \times {{\,\mathrm{int}\,}}({\mathcal {Q}}) : u \ge v \varphi (w / v) \}\), where \({\mathcal {Q}}\) is \({\mathcal {K}}_{\ge }\) or \({\mathcal {K}}_{\succeq }\) (a cone of squares of a Jordan algebra), is the epigraph of the perspective of a convex separable spectral function \(\varphi : {{\,\mathrm{int}\,}}({\mathcal {Q}}) \rightarrow {\mathbb {R}}\), such as the sum or trace of the negative logarithm, negative entropy, or power in (1, 2] (see [18] for more details). The complexity estimates in Table 1 depend on whether \({\mathcal {Q}}\) is \({\mathcal {K}}_{\ge }\) or \({\mathcal {K}}_{\succeq }\). We use the LHSCB \(f(u, v, w) = -\log (u - v \varphi (w / v)) - \log (v) - {{\,\mathrm{logdet}\,}}(w)\) [18, Proposition 6.1].

-

Relative entropy cone. \({\mathcal {K}}_{{{\,\mathrm{relent}\,}}}:={{\,\mathrm{cl}\,}}\bigl \{ (u, v, w) \in {\mathbb {R}}\times {\mathbb {R}}^d_> \times {\mathbb {R}}^d_> : u \ge {\textstyle \sum _{i \in \llbracket d \rrbracket }} w_i \log (w_i / v_i) \bigr \}\) is the epigraph of vector relative entropy. We use the LHSCB \(f(u, v, w) = -\log \bigl ( u - {\textstyle \sum _{i \in \llbracket d \rrbracket }} w_i \log (w_i / v_i) \bigr ) - {\textstyle \sum _{i \in \llbracket d \rrbracket }} (\log (v_i) + \log (w_i))\) [36, Appendix E].

-

Matrix relative entropy cone. \({\mathcal {K}}_{{{\,\mathrm{matrelent}\,}}} :={{\,\mathrm{cl}\,}}\bigl \{ (u, v, w) \in {\mathbb {R}}\times {\mathbb {R}}^{{{\,\mathrm{sd}\,}}(d)} \times {\mathbb {R}}^{{{\,\mathrm{sd}\,}}(d)} : V \in {\mathbb {S}}^d_{\succ }, W \in {\mathbb {S}}^d_{\succ }, u \ge {{\,\mathrm{tr}\,}}(W (\log (W) - \log (V))) \bigr \}\), where \(V :={{\,\mathrm{mat}\,}}(v)\) and \(W :={{\,\mathrm{mat}\,}}(w)\), is the epigraph of matrix relative entropy. We use the LHSCB \(f(u, v, w) = -\log (u - {{\,\mathrm{tr}\,}}(W (\log (W) - \log (V)))) - {{\,\mathrm{logdet}\,}}(V) - {{\,\mathrm{logdet}\,}}(W)\) [27, Theorem 1.5].

-

Weighted sum-of-squares (WSOS) cones. An interpolant basis represents a polynomial implicitly by its evaluations at a fixed set of d points. Given a basic semialgebraic domain defined by r polynomial inequalities, the four WSOS cones below are parameterized by matrices \(P_l \in {\mathbb {R}}^{d \times s_l}\) for \(l \in \llbracket r \rrbracket \). Each \(P_l\) is constructed by evaluating \(s_l\) independent polynomials (columns) at the d points (rows), following [57]. For simplicity, the complexity estimates in Table 1 assume \(s_l = s, \forall l \in \llbracket r \rrbracket \). Note that \(s < d \le s^2\). We define \({\mathcal {K}}_{{{\,\mathrm{SOS}\,}}}\) and \({\mathcal {K}}_{{{\,\mathrm{matSOS}\,}}}\) in [20], and \({\mathcal {K}}_{\ell _1 \! {{\,\mathrm{SOS}\,}}}\) and \({\mathcal {K}}_{\ell _2 \! {{\,\mathrm{SOS}\,}}}\) in [35, Equations 2.7 and 4.9].

We use LHSCBs for the dual cones of these WSOS cones. For \({\mathcal {K}}_{{{\,\mathrm{SOS}\,}}}^*\) and \({\mathcal {K}}_{{{\,\mathrm{matSOS}\,}}}^*\), we discuss the LHSCBs in Appendix C.3. For \({\mathcal {K}}_{\ell _1 \! {{\,\mathrm{SOS}\,}}}^*\) and \({\mathcal {K}}_{\ell _2 \! {{\,\mathrm{SOS}\,}}}^*\), the LHSCBs require more complex notation, so we refer the reader to [35].

-

Scalar WSOS cone. \({\mathcal {K}}_{{{\,\mathrm{SOS}\,}}}\) is a cone of polynomials that are guaranteed to be nonnegative pointwise on the domain.

-

Symmetric matrix WSOS cone. \({\mathcal {K}}_{{{\,\mathrm{matSOS}\,}}}\) is a cone of polynomial symmetric matrices (in an svec-like format) of side dimension t that are guaranteed to belong to \({\mathbb {S}}_{\succeq }\) pointwise on the domain. We let \(m :=s t + d\) in Table 1 for succinctness.

-

\(\ell _1\) epigraph WSOS cone. \({\mathcal {K}}_{\ell _1 \! {{\,\mathrm{SOS}\,}}}\) is a cone of polynomial vectors of length \(1 + t\) that are guaranteed to belong to \(\mathcal {K}_{\ell _\infty }^*\) pointwise on the domain.

-

\(\ell _2\) epigraph WSOS cone. \({\mathcal {K}}_{\ell _2 \! {{\,\mathrm{SOS}\,}}}\) is a cone of polynomial vectors of length \(1 + t\) that are guaranteed to belong to \(\mathcal {K}_{\ell _2}\) pointwise on the domain.

For each cone, we have an analytic form for the feasibility check, gradient, Hessian, and TOO oracles defined in Sect. 3. That is, we always avoid iterative numerical procedures such as optimization, which are typically slow, numerically unstable, and require tuning. Hypatia’s algorithm always evaluates the feasibility check before the gradient, Hessian, and TOO (which are only defined at strictly feasible points), and the gradient is evaluated before the Hessian and TOO. For most of these cones, the feasibility check and gradient oracles compute values and factorizations that are also useful for computing the Hessian and TOO, so this data is cached in the cone data structures and reused where possible. In Table 1, we estimate the time complexities (ignoring constants) of these four oracles for each cone, counting the cost of cached values and factorizations only once (for the oracle that actually computes them). Table 1 shows that the TOO is never more expensive than the feasibility check, gradient, and Hessian oracles (i.e. the oracles needed by SY). Indeed, our computational results in Sect. 7.3 demonstrate that the TOO is very rarely an algorithmic bottleneck in practice.

Our TOO in (5) is distinct from the ‘higher order corrector’ terms proposed by Mehrotra [41] or Dahl and Andersen [21]. The method of [41] only applies to symmetric cones, and the technique in [21] is tested only for the standard exponential cone. Compared to the third order term proposed by Dahl and Andersen [21], our TOO has a simpler and more symmetric structure, as it relies on only one direction \(\delta _{{\bar{s}}}\) rather than two. Like the gradient and Hessian oracles, our TOO is additive for sums of LHSCBs, which can be useful for cones (such as \({\mathcal {K}}_{{{\,\mathrm{DNN}\,}}}\) and \({\mathcal {K}}_{{{\,\mathrm{SOS}\,}}}^*\)) that are defined as intersections of other cones. We leverage these properties to obtain fast and numerically stable TOO implementations.

To illustrate, in Appendix C.1 we define LHSCBs and derive efficient TOO procedures for a class of cones that can be characterized as intersections of slices of the PSD cone \({\mathcal {K}}_{\succeq }\). We consider \({\mathcal {K}}_{{{\,\mathrm{LMI}\,}}}\) in Appendix C.2 and \({\mathcal {K}}_{{{\,\mathrm{SOS}\,}}}^*\) and \({\mathcal {K}}_{{{\,\mathrm{matSOS}\,}}}^*\) in Appendix C.3. In Appendix C.4, we handle \({\mathcal {K}}_{{{\,\mathrm{sPSD}\,}}}\) by differentiating a procedure from [4] for computing Hessian products. In Appendix C.5 we also show how to compute the TOO for \({\mathcal {K}}_{\ell {_2}}\) and \({\mathcal {K}}_{{{\,\mathrm{sqr}\,}}}\). In [18], we derive efficient TOO procedures for a class of spectral function cones on positive domains (\({\mathcal {K}}_{{{\,\mathrm{sepspec}\,}}}\), \({\mathcal {K}}_{\log }\), \({\mathcal {K}}_{{{\,\mathrm{logdet}\,}}}\), \({\mathcal {K}}_{{{\,\mathrm{geo}\,}}}\), \({\mathcal {K}}_{{{\,\mathrm{rtdet}\,}}}\)).

7 Computational testing

In Sect. 7.1, we introduce a diverse set of exotic conic benchmark instances generated from a variety of applied examples. In Sect. 7.2, we describe our methodology for comparing the stepping procedures from Sect. 5.5, and in Sect. 7.3 we examine our computational results.

7.1 Exotic conic benchmark set

We generate 379 instances (in our primal general form (6)) from 37 applied examples in Hypatia’s examples folder. All instances are primal-dual feasible except for 12 that are primal infeasible and one that is dual infeasible. For most examples, we construct multiple formulations using different predefined exotic cones from the list in Sect. 6. Each cone from this list appears in at least one instance, so we consider our benchmark set to be the most diverse collection of conic instances available.

We generate most instances using JuMP, but for some we use Hypatia’s native model interface. Due to the size of some instances and the lack of a standard instance storage format recognizing our cone types, we generate all instances on the fly in Julia. For instances that use random data, we set random seeds to ensure reproducibility. Figure 1 shows the distributions of instance dimensions and exotic cone counts. All instances have at least one cone (note any \({\mathcal {K}}_{\ge }\) cones are concatenated together, so \({\mathcal {K}}_{\ge }\) is counted at most once) and take at least one iteration to solve with Hypatia.

Histograms summarizing the benchmark instances in the primal conic form (6). Instance size (log scale) is the sum of the primal variable, equality, and conic constraint dimensions. Exotic cone count (log scale) is the number of exotic cones comprising the Cartesian product cone

Below we briefly introduce each example. In Table 2, we summarize for each example the number of corresponding instances and the cone types represented in at least one of the instances. We do not distinguish dual cones and primal cones in this summary (for example, instances that use \(\mathcal {K}_{\ell _\infty }^*\) are only listed as using \({\mathcal {K}}_{\ell {_\infty }}\)). For some examples, we describe a subset of formulations in [18, 20, 35]. Our benchmark set includes ten instances from CBLIB (a conic benchmark instance library, see [30]). We chose to avoid running a larger sample of instances from CBLIB so that the relatively few cone types supported by CBLIB version 3 are not over-represented in our benchmark set.

-

Central polynomial matrix. Minimize a spectral function of a gram matrix of a polynomial.

-

Classical-quantum capacity. Compute the capacity of a classical-to-quantum channel (adapted from [26, Section 3.1]).

-

Condition number. Minimize the condition number of a matrix pencil subject to a linear matrix inequality (adapted from [10, Section 3.2]).

-

Contraction analysis. Find a contraction metric that guarantees global stability of a dynamical system (adapted from [6, Section 5.3]). Six instances are primal infeasible.

-

Convexity parameter. Find the strong convexity parameter of a polynomial function over a domain.

-

Covariance estimation. Estimate a covariance matrix that satisfies some given prior information and minimizes a given convex spectral function.

-

Density estimation. Find a valid polynomial density function maximizing the likelihood of a set of observations (compare to [55, Section 4.3]; see [20, Section 5.6]).

-

Discrete maximum likelihood. Maximize the likelihood of some observations at discrete points, subject to the probability vector being close to a uniform prior.

-

D-optimal design. Solve a D-optimal experiment design problem, i.e. maximize the determinant of the information matrix subject to side constraints (adapted from [11, Section 7.5]; see [20, Section 5.4]).

-

Entanglement-assisted capacity. Compute the entanglement-assisted classical capacity of a quantum channel (adapted from [26, Section 3.2]).

-

Experiment design. Solve a general experiment design problem that minimizes a given convex spectral function of the information matrix subject to side constraints (adapted from [11, Section 7.5]).

-

Linear program. Solve a simple linear program.

-

Lotka-Volterra. Find an optimal controller for a Lotka-Volterra model of population dynamics (adapted from [38, Section 7.2]).

-

Lyapunov stability. Minimize an upper bound on the root mean square gain of a dynamical system (adapted from [10, Section 6.3.2] and [9, Page 6]).

-

Matrix completion. Complete a rectangular matrix by minimizing the nuclear norm and constraining the missing entries (compare to [1, Equation 8]; see [20, Section 5.2]).

-

Matrix quadratic. Find a rectangular matrix that minimizes a linear function and satisfies a constraint on the outer product of the matrix.

-

Matrix regression. Solve a multiple-output (or matrix) regression problem with regularization terms, such as \(\ell _1\), \(\ell _2\), or nuclear norm (see [20, Section 5.3]).

-

Maximum volume hypercube. Find a maximum volume hypercube (with edges parallel to the axes) inside a given polyhedron or ellipsoid (adapted from [42, Section 4.3.2]).

-

Nearest correlation matrix. Compute the nearest correlation matrix in the quantum relative entropy sense (adapted from [28]).

-

Nearest polynomial matrix. Given a symmetric matrix of polynomials H, find a polynomial matrix Q that minimizes the sum of the integrals of its elements over the unit box and guarantees \(Q - H\) is pointwise PSD on the unit box.

-

Nearest PSD matrix. Find a sparse PSD matrix or a PSD-completable matrix (with a given sparsity pattern) with constant trace that maximizes a linear function (adapted from [65]).

-

Nonparametric distribution. Given a random variable taking values in a finite set, compute the distribution minimizing a given convex spectral function over all distributions satisfying some prior information.

-

Norm cone polynomial. Given a vector of polynomials, check a sufficient condition for pointwise membership in \(\mathcal {K}_{\ell _\infty }^*\). Four instances are primal infeasible.

-

Polynomial envelope. Find a polynomial that closely approximates, over the unit box, the lower envelope of a given list of polynomials (see [57, Section 7.2.1]).

-

Polynomial minimization. Compute a lower bound for a given polynomial over a given semialgebraic set (see [57, Section 7.3.1] and [20, Section 5.5]). Some instances use polynomials with known optimal values from [13].

-

Polynomial norm. Find a polynomial that, over the unit box, has minimal integral and belongs pointwise to the epigraph of the \(\ell _1\) or \(\ell _2\) norm of other given polynomials (see [35]).

-

Portfolio. Maximize the expected returns of a stock portfolio and satisfy various risk constraints (see [20, Section 5.1]).

-

Region of attraction. Find the region of attraction of a polynomial control system (see [34, Section 9.1]).

-

Relative entropy of entanglement. Compute a lower bound on relative entropy of entanglement with a positive partial transpose relaxation (adapted from [26, Section 4]).

-

Robust geometric programming. Bound the worst-case optimal value of an uncertain signomial function with a given coefficient uncertainty set (adapted from [15, Equation 39]).

-

Semidefinite polynomial matrix. Check a sufficient condition for global convexity of a given polynomial. Two instances are primal infeasible and one is dual infeasible.

-

Shape constrained regression. Given a dataset, fit a polynomial function that satisfies shape constraints such as monotonicity or convexity over a domain (see [20, Section 5.7]). Several instances use real datasets from [40].

-

Signomial minimization. Compute a global lower bound for a given signomial function (see [45]). Several instances use signomials with known optimal values from [14, 45].

-

Sparse LMI. Optimize over a simple linear matrix inequality with sparse data.

-

Sparse principal components. Solve a convex relaxation of the problem of approximating a symmetric matrix by a rank-one matrix with a cardinality-constrained eigenvector (see [22, Section 2]).

-

Stability number. Given a graph, solve for a particular strengthening of the theta function towards the stability number (adapted from [39, Equation 2.4]).

7.2 Methodology

We can assess the practical performance of a stepping procedure on a given benchmark instance according to several metrics: whether the correct conic certificate (satisfying our numerical tolerances, discussed below) is found, and if so, the PDIPM iteration count and solve time. Across the benchmark set, we compare performance between consecutive pairs of the five stepping procedures outlined in Sect. 5.5.

-

basic. The basic prediction or centering stepping procedure without any enhancements; described in Sect. 5.5.1, this is similar to the method in Alfonso solver [59], which is a practical implementation of the algorithm in [56, 64].

-

prox. The basic procedure modified to use a less restrictive central path proximity condition; described in Sect. 5.5.2.

-