Abstract

In this paper, we introduce two perturbations in the classical deterministic susceptible-infected-susceptible epidemic model with two correlated Brownian motions. We consider two perturbations in the deterministic SIS model and formulate the original model as a stochastic differential equation with two correlated Brownian motions for the number of infected population, based on the previous work from Gray et al. (SIAM J Appl Math 71(3):876–902, 2011) and Hening and Nguyen (J Math Biol 77:135–163, 2017. https://doi.org/10.1007/s00285-017-1192-8). Conditions for the solution to become extinction and persistence are then stated, followed by computer simulation to illustrate the results.

Similar content being viewed by others

1 Introduction

Human beings never stop fighting against deadly disease, and nowadays, epidemic models are the most common means to study population dynamics in epidemic problems. For example, the classic Kermack–Mckendrick model [1] is a sufficient model to describe simple epidemics that provide immunity for those infected people after recovery, while the classical susceptible-infected-susceptible (SIS) models (1) can be used to explain disease transmission.

with initial value \(I_0\in (0,N)\). Specifically, S(t) and I(t) are the numbers of susceptibles and infected at time t. N is the total size of the population where the disease is found. \(\mu \) is the per capita death rate, and \(\gamma \) represents the rate of infected individuals becoming cured, while \(\beta \) is the per capita disease contact rate.

Moreover, environmental noises, such as white noise and telegraph noise, are taken into consideration in deterministic models to help us understand dynamic behaviours in epidemic models. There are many examples studying the behaviour of both deterministic [1, 2] and stochastic [3,4,5,6] SIS epidemic models. Different medical means on controlling the disease are also mathematically applied in SIS model such as [7,8,9]. Also, Gray et al. [10] apply a perturbation on the disease transmission coefficient in SIS model.

However, to the best of our knowledge, there is not enough work on incorporating white noise with \(\mu +\gamma \) in the SIS epidemic model (1). Here, we suppose that the variance of estimating \(\mu +\gamma \) is proportional to the number of susceptible population. Consequently, we then add another perturbation on per capita death rate and infectious period \(\mu +\gamma \).

We then obtain that

with initial value \(I(0)=I_0 \in (0,N)\), \(B_3\) and \(B_4\) are two independent Brownian motions.

Moreover, it is necessary to consider if there is a relationship between these two perturbation. And if we use the same data in real world to construct these two Brownian motions, they are very likely to be correlated [11]. And there is the previous work focusing on correlation of Brownian motions in dynamic systems. Hu et al. [12] consider two correlative stochastic disturbances in the form of Gaussian white noise in an epidemic deterministic model constructed by Roberts and Jowett [13]. Also, Hening and Nguyen [14] construct a stochastic Lotka–Volterra food chain system by introducing n number of correlated Brownian motions into the deterministic food chain model. n is the total species number in the food chain and they use a coefficient matrix to convert the vector of correlated Brownian motions to a vector of independent standard Brownian motions. Inspired by Emmerich [11], Hu et al. [12] and Hening and Nguyen [14], we are going to replace \(B_3\) and \(B_4\) by two correlated Brownian motions to introduce correlation of noises in SIS epidemic model. Considering two correlated Brownian motions, one with linear diffusion coefficient and the other with Hölder continuous diffusion coefficient, is clearly different from other work on stochastic SIS model. Though Hölder continuous diffusion coefficient and correlations of white noises are often involved in stochastic financial and biological models [15], there is no related work based on deterministic SIS model. As a result, this paper aims to fill this gap.

We now consider \(B_3\) and \(B_4\) in our model (2) to be correlated. Replace \(B_3\) and \(B_4\) by correlated Brownian motions \(E_1\) and \(E_2\).

Note that \(E_1\) and \(E_2\) can be written as

\((B_1,B_2)\) is a vector of independent Brownian motions and A is the coefficient matrix

So we have

Also

which gives the correlation of \(E_1\) and \(E_2\)

Note that when \(\rho =0\), \(B_1\) and \(B_2\) are independent Brownian motion.

Substituting (4) into (3), we have

with initial value \(I(0)=I_0 \in (0,N)\) and this is our new model. Throughout this paper, unless otherwise specified, we let \((\Omega ,\{{\mathcal {F}}_t\}_{t\geqslant 0},{\mathbb {P}})\) be a complete probability space with a filtration \(\{{\mathcal {F}}_t\}_{t\geqslant 0}\) satisfying the usual conditions. We also define \({\mathcal {F}}_{\infty }=\sigma (\bigcup _{t\geqslant 0}{\mathcal {F}}_t)\), i.e. the \(\sigma \)-algebra generated by \(\bigcup _{t\geqslant 0}{\mathcal {F}}_t\). Let \(B(t)=(B_1(t),B_2(t)^\mathrm{T}\) be a two-dimensional Brownian motion defined on this probability space. We denote by \({\mathbb {R}}_+^2\) the positive cone in \({\mathbb {R}}^2\), that is \({\mathbb {R}}^2_+=\{x\in {\mathbb {R}}^2:x_1>0,x_2>0 \}\). We also set \(\inf \emptyset =\infty \). If A is a vector or matrix, its transpose is denoted by \(A^\mathrm{T}\). If A is a matrix, its trace norm is \(|A|=\sqrt{trace (A^\mathrm{T}A)}\) while its operator norm is \(||A||=\sup \{|Ax|:|x|=1 \}\). If A is a symmetric matrix, its smallest and largest eigenvalues are denoted by \(\lambda _\mathrm{min}(A)\) and \(\lambda _\mathrm{max}(A)\). In the following sections, we will focus on the long-time properties of the solution to model (5).

2 Existence of unique positive solution

We firstly want to know if the solution of our model (5) has a unique solution. Also, we need this solution to be positive and bounded within (0, N) because it is meaningless for the number of infected population to exceed the number of whole population. So here we give Theorem 2.1.

Theorem 2.1

If \(\mu +\gamma \ge \frac{1}{2} (a_2^2+a_3^2)\sigma _2^2 N\), then for any given initial value \(I(0)=I_0 \in (0,N)\), the SDE has a unique global positive solution \(I(t) \in (0,N)\) for all \(t\ge 0\) with probability one, namely,

Proof

By the local Lipschitz condition, there must be a unique solution for our SDE (5) for any given initial value. So there is a unique maximal local solution I(t) on \(t\in [0,\tau _e)\), where \(\tau _e\) is the explosion time [15]. Let \(k_0\ge 0\) be sufficient large to satisfy \(\frac{1}{k_0}<I_0<N-\frac{1}{k_0}\). For each integer \(k\ge k_0\), define the stopping time

Set \(\text {inf} \emptyset =\infty \). Clearly, \(\tau _k\) is increasing when \(k \rightarrow \infty \). And we set \(\tau _\infty =\lim _{k\rightarrow \infty }{\tau _k}\). It is obvious that \(\tau _\infty \le \tau _e\) almost sure. So if we can show that \(\tau _\infty =\infty \) a.s., then \(\tau _e=\infty \) a.s. and \(I(t) \in (0,N)\) a.s. for all \(t \ge 0\).

Assume that \(\tau _\infty =\infty \) is not true. Then, we can find a pair of constants \(T>0\) and \(\epsilon \in (0,1)\) such that

So we can find an integer \(k_1\ge k_0\) large enough, such that

Define a function \(V: (0,N)\rightarrow {\mathbb {R}}_+\) by

and

Let \(f(t)=\beta (N-I(t))I(t)-(\mu +\gamma )I(t)\), \(g(t)=(a_1\sigma _1I(t)(N-I(t))-a_2\sigma _2\sqrt{N-I(t)}I(t), -a_3\sigma _2I(t)\sqrt{N-I(t)})\) and \(\,{\mathrm {d}}B(t)= (\,{\mathrm {d}}B_1(t), \,{\mathrm {d}}B_2(t))\).

By Ito formula [15], we have, for any \(t\in [0,T]\) and any k

\({\mathbb {E}}\int _0^{t\wedge \tau _k} V_xg(s) \,{\mathrm {d}}B(s)=0\). Also it is easy to show that

C is a constant when \(\mu +\gamma \ge \frac{1}{2}(a_2^2+a_3^2)\sigma _2^2 N\) and \(x\in (0,N)\).

Then, we have

which yields that

Set \(\Omega _k=\{\tau _k \le T\}\) for \(k\ge k_1\) and we have \({\mathbb {P}}(\Omega _k)\ge \epsilon \). For every \(\omega \in \Omega _k\), \(I(\tau _k, \omega )\) equals either 1 / k or \(N-1/k\) and we have

Hence,

letting \(k \rightarrow \infty \) will lead to the contradiction

So the assumption is not reasonable and we must have \(\tau _\infty =\infty \) almost sure, whence the proof is now complete. Compared to the result from Gray et al. [10], the condition is now related to \((a_2^2+a_3^2)\). The square root terms are the reasons for us to give initial conditions in this section: when \(N-I(t)\rightarrow 0\), \(\sqrt{N-I(t)}\) changes rapidly. This can also be an explanation to the readers that the condition is dependent on \(a_2\) and \(a_3\) instead of \(\rho =a_1a_2\). \(\square \)

3 Extinction

The previous section has already provided us with enough evidence that our model has a unique positive bounded solution. However, we do not know under what circumstances the disease will die out or persist and they are of great importance in study of epidemic models. In this section, we will discuss the conditions for the disease to become extinction in our SDE model (5). Here, we give Theorem 3.1 and we will discuss persistence in the next section.

3.1 Theorem and proof

Theorem 3.1

Given that the stochastic reproduction number of our model

\(R_0^S := \frac{\beta N}{\mu +\gamma }- \frac{a_1^2\sigma _1^2N^2 +(a_2^2+a_3^2) \sigma _2^2N-2 \rho \sigma _1\sigma _2N^\frac{3}{2}}{2(\mu +\gamma )}<1\), then for any given initial value \(I(0)=I_0\in (0,N)\), the solution of SDE obeys

if one of the following conditions is satisfied

-

\(\frac{1}{2}(a_2^2+a_3^2)\sigma _2^2\ge \beta \) and \(-1<\rho <0\)

-

\(\frac{1}{2}(a_2^2+a_3^2)\sigma _2^2\ge \beta +\frac{3}{2}\rho \sigma _1\sigma _2 \sqrt{N}-a_1^2\sigma _1^2N^2\) and \(3a_2\sigma _2\ge 4\sqrt{N}a_1\sigma _1\)

-

\(\frac{1}{2}(a_2^2+a_3^2)\sigma _2^2< \beta \wedge (\beta +\frac{3}{2}\rho \sigma _1\sigma _2 \sqrt{N}-a_1^2\sigma _1^2N)\)

-

\(\frac{1}{2}(a_2^2+a_3^2)\sigma _2^2\ge \beta +\frac{9}{16}a_2^2\sigma _2^2\) and \(0<\rho <1\)

namely, I(t) will tend to zero exponentially a.s. And the disease will die out with probability one.

Proof

Here, we use Ito formula

and according to the large number theorem for martingales [15], we must have

So if we want to prove \(\limsup _{t\rightarrow \infty }{ \frac{1}{t}\log {I(t)}}<0\) almost sure, we need to find the conditions for \( L{\tilde{V}}(x)\) to be strictly negative in (0, N). \( L{\tilde{V}}\) is defined by

And it is clear that

and

is ensured by \(R_0^S<1\). However, we do not know the behaviour of \(L{\tilde{V}}\) in (0, N) and it is no longer quadratic as [10], which is very easy to analyse. As a result, we derive the first derivative of \( L{\tilde{V}}\).

This is a quadratic function of \(z=\sqrt{N-x}\). So by assuming \(D(z)=\frac{\,{\mathrm {d}}L{\tilde{V}}}{\,{\mathrm {d}}x}\), we have

where \(z\in (0, \sqrt{N})\). The axis of symmetry of (14) is \({\hat{z}}=\frac{3a_2\sigma _2}{4a_1\sigma _1}\)

Here, we are going to discuss different cases for (14).

Case 1 If \(\frac{1}{2}(a_2^2+a_3^2)\sigma _2^2\ge \beta \) and \(-1<\rho <0\) (\({\hat{z}}<0\))

From the behaviour of the quadratic function (14), we know that the value of this function will be always positive in (0, N). This means \( L{\tilde{V}}\) increases when x increases. As \( L{\tilde{V}}(N)<0\), We have \( L{\tilde{V}}\le L{\tilde{V}}(N)<0\). This leads to extinction.

Case 2 If \(\frac{1}{2}(a_2^2+a_3^2)\sigma _2^2\ge \beta \), \(D(\sqrt{N})\ge 0\) and \({\hat{z}}=\frac{3a_2\sigma _2}{4a_1\sigma _1}\ge \sqrt{N}\)

In this case, the value of D(z) is always positive within \(z \in (0, \sqrt{N})\), which leads to the similar result in Case 1. So we have

with \({\hat{z}}>\sqrt{N}\)

Case 3 If \(\frac{1}{2}(a_2^2+a_3^2)\sigma _2^2< \beta \) and \(D(\sqrt{N})<0\)

This condition makes sure that the value of D(z) is strictly negative in \((0,\sqrt{N})\), which indicates that \( L{\tilde{V}}\) decreases when x increases. With \( L{\tilde{V}}(N)<0\) and \( L{\tilde{V}}(0)<0\), this case results in extinction and we have

Case 4 If \(\Delta =\frac{9}{4}(a_1a_2 \sigma _1\sigma _2)^2-4a_1^2 \sigma _1^2[\frac{1}{2}(a_2^2+a_3^2)\sigma _2^2-\beta ]\le 0\)

We have \(\frac{1}{2}(a_2^2+a_3^2)\sigma _2^2\ge \beta +\frac{9}{16}a_2^2\sigma _2^2\). In this case, D(z) will be positive in \((0,\sqrt{N})\), so \(L{\tilde{V}}\) increases when x increases. Similarly, extinction still maintains in this case.

In the deterministic SIS model, we have the result that if \(R_0^D<1\), the disease will die out. However, from our results in this section, we can see that our stochastic reproduction number \(R_0^S\) is always less that the deterministic reproduction number \(R_0^D=\frac{\beta N}{\mu +\gamma }\), which indicates that the noise and correlation in our model help expand the conditions of extinction. For those parameters that will not cause the dying out of disease in the deterministic SIS model as well as Gray’s model [10], extinction will become possible if we consider the new perturbation and the correlation. \(\square \)

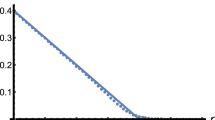

3.2 Simulation

In this section, we use Euler–Maruyama Method [10, 16] implemented in R to simulate the solutions in those 4 cases. For each case, we initially give a complete set of parameter to satisfy not only the extinction conditions, but also \(\mu +\gamma \ge \frac{1}{2} (a_2^2+a_3^2)\sigma _2^2 N\) to make sure the uniqueness and boundedness of solutions. Also, both large and small initial values are used in all 4 cases for better illustration. We then choose the step size \(\Delta =0.001\) and plot the solutions with 500 iterations. Throughout the paper, we shall assume that the unit of time is one day and the population sizes are measured in units of 1 million, unless otherwise stated (Figs. 1 2, 3, 4).

Case 1

Case 2

Case 3

Case 4

The simulation results are clearly supporting our theorem and illustrating the extinction of the disease. Note that these conditions are not all the conditions for extinction. We only consider the situation that D(z) is either strictly positive or strictly negative. Otherwise, there will be much more complicated cases when \(L{\tilde{V}}\) is not monotonic in (0, N).

4 Persistence

In this section, we firstly define persistence in this paper as there are many definitions in stochastic dynamic models to define persistence [3, 4, 10, 15, 17,18,19,20]. However, our model (5) is based on [10]. As a result, we want to give a similar definition of persistence in our model (5). So here we give Theorem 4.1 to give a condition for the solution of (5) oscillating around a positive level.

4.1 Theorem and proof

Theorem 4.1

If \(R_0^S>1\), then for any given initial value \(I(0)=I_0 \in (0,N)\), the solution of (5) follows

\(\xi \) is the only positive root of \({\tilde{L}}V=0\) in (0, N). I(t) will be above or below the level \(\xi \) infinitely often with probability one.

Proof

When \(R_0^S>1\), recall that

We have \(L{\tilde{V}}(0)>0\) which is guaranteed by \(R_0^S>1\), \(L{\tilde{V}}(N)=-(\mu +\gamma )<0\). As \(L{\tilde{V}}(x)\) is a continuous function in (0, N), there must be a positive root of \(L{\tilde{V}}(x)=0\) in (0, N). Moreover, from the behaviour of D(z), it is clear that \(L{\tilde{V}}\) will either increase to max value and then decrease to minimum, or increase to maximum, decrease to minimum and then increase to \(L{\tilde{V}}(N)<0\). So In both cases, \(L{\tilde{V}}(x)=0\) will only have one unique positive root \(\xi \) in (0, N).

Here, we recall (11)

According to the large number theorem for martingales [15], there is an \(\Omega _2\subset \Omega \) with \({\mathbb {P}}\{\Omega _2\}=1\) such that for every \(\omega \in \Omega _2\)

Now we assume that \(\limsup _{t\rightarrow \infty }{I(t)}\ge \xi \ a.s.\) is not true. Then, there must be a small \(\epsilon \in (0,1)\) such that

Let \(\Omega _1=\{\limsup _{t\rightarrow \infty }{I(t)}\le \xi -2\epsilon \}\), then for every \(\omega \in \Omega _1\), there exist \(T=T(\omega )\) large enough, such that

which means when \(t\ge T(\omega )\), \(L{\tilde{V}}(I(t,\omega ))\ge L{\tilde{V}}(\xi -\epsilon )\). Then, we have for any fixed \(\omega \in \Omega _1\cap \Omega _2\) and \(t\ge T(\omega )\)

which yields

and this contradicts with the assumption (16). So we must have \(\limsup _{t\rightarrow \infty }{I(t)}\ge \xi \) almost sure.

Similarly, if we assume that \(\liminf _{t\rightarrow \infty }{I(t)}\le \xi \ a.s.\) is not true, then there must be a small \(\delta \in (0,1)\) such that

Let \(\Omega _3=\{\liminf _{t\rightarrow \infty }{I(t)}\ge \xi +2\delta \}\), then for every \(\omega \in \Omega _3\), there exist \(T'=T'(\omega )\) large enough, such that

Now we fix any \(\omega \in \Omega _3\cap \Omega _2\) and when \(t\ge T'(\omega )\), \(L{\tilde{V}}(I(t,\omega ))\le L{\tilde{V}}(\xi +\delta )\) and so we have

which yields

and this contradicts the assumption (18). So we must have \(\liminf _{t\rightarrow \infty }{I(t)}\le \xi \) almost sure. \(\square \)

4.2 Simulation

In this section, we choose the values of our parameter as follows:

In order to prove the generality of our result, we use two different \(\rho \), one positive and the other negative.

and

In both cases, we firstly use Newton–Raphson method [21] in R to find a approximation to the roots \(\xi \) of both \(L{\tilde{V}}\), which are 7.092595 and 9.680572, respectively. Then, we use Euler–Maruyama method [10, 16] implemented in R to plot the solutions of our SDE with both large and small initial values, following by using red lines to indicate the level \(\xi \). The step size is 0.001, and 20000 iterations are used in each example. In the following figures, Theorem 4.1. is clearly illustrated and supported (Figs. 5, 6).

5 Stationary distribution

To find a stationary distribution of our SDE model (5) is of great important. From the simulation of the last section, we can clearly see that the results strongly indicate the existence of a stationary distribution. In order to complete our proof, we need to initially use a well-known result from Khasminskii as a lemma. [22]

Lemma 5.1

The SDE model (1.3) has a unique stationary distribution if there is a strictly proper subinterval (a,b) of (0,N) such that \({\mathbb {E}}(\tau )<\infty \) for all \(I_0\in (0,a]\cup [b,N)\), where

also,

for every interval \([{\bar{a}},{\bar{b}}]\subset (0,N).\)

Now we give the following Theorem 5.1 and the proof by using Lemma 6.

5.1 Theorem and proof

Theorem 5.1

If \(R_0^S>1\), then our SDE model (5) has a unique stationary distribution.

Proof

Firstly, we need to fix any (a, b) such that,

recall the discussion of \({\tilde{LV}}\) in last section, we can see that

also, recall (11)

and define

Case 1 For all \(t\ge 0\) and any \(I_0\in (0,a]\), use (20) in (11), we have

From definition of \(\tau \), we know that

Hence, we have

when \(t\rightarrow \infty \)

Case 2 For all \(t\ge 0\) and any \(I_0\in (b,N)\), use (21) in (11), we have

From definition of \(\tau \), we know that

Hence, we have

when \(t\rightarrow \infty \)

Combine the results from both Case 1 and Case 2, and we complete the proof of Theorem 5.1. Now we need to give the mean and variance of the stationary distribution. \(\square \)

Theorem 5.2

If \(R_0^S>1\) and m and v are denoted as the mean and variance of the stationary distribution of SDE model (5), then we have

Proof

For any \(I_0\in (0,N)\), we firstly recall (5) in the integral form

Dividing both sides by t and when \(t\rightarrow \infty \), applying the ergodic property of the stationary distribution [22] and also the large number theorem of martingales, we have the result that

where \(m,m_2\) are the mean and second moment of the stationary distribution. So we have

We have tried to get other equations of higher-order moment of I(t) in order to solve m and v but fail to get a result. Though we do not have an explicit formulae of mean and variance of stationary distribution like [10], simulations can still be effective to prove (22). \(\square \)

5.2 Simulation

In this section, we use the same values of our parameters in the last section

and also two cases with different values of \(\rho \)

and

Now we simulate the path of I(t) for a long run of 200,000 iterations with step size 0.001 by using the Euler–Maruyama method [10, 16] in R. And we only reserve the last 10,000 iterations for the calculations. These 10,000 iterations can be considered as stationary in the long term, so the mean and variance of this sample are the mean and variance of the stationary distribution of our solution. In both cases, the results of left side and right side of the equation (22) are 10.84587 and 10.68102, 1.743753 and 1.848107, respectively, so we can conclude that the mean and variance of the stationary distribution satisfy Eq. (22). Figures 7 and 8 are the histograms and empirical cumulative distribution plots for each case of last 10000 iterations.

6 Conclusion

In this paper, we replaced independent Brownian motions in our previous model by correlated Brownian motions which leads to not only the increasing number of noises compared to Greenhalgh’s work [4, 10], but also turning the drifting coefficient into a nonlinear term. Then, we prove that the stochastic reproduction number \(R_0^S\) is the Keynes to define the extinction and persistence of the solution. Similar to the deterministic SIS model, with \(R_0^S<1\) and extra conditions, the disease will die out. When \(R_0^S>1\), we prove that the solution will oscillate around a certain positive level. Compared to [10], our \(L{\tilde{V}}\) is not linear, which results in more general and complicated conditions to both extinction and persistence sections. Moreover, compared to [23], this paper assumes that the Brownian motions are correlated, and hence, the effects of the correlations on the behaviours of our SIS system are studied. The analytical results including the form of \(R_0^S\) and the additional restrictions indicate that the correlations between the Brownian motions do make a significant difference. Though we do not know the explicit expression of that level, numerical method are then used to find the exact value under certain circumstance. Moreover, when \(R_0^S>1\), there is a unique stationary distribution of the solution. On the other hand, we have tried to get the explicit expression of the mean and variance by deducing higher moments of I(t), but we seems not able to get results at this time. Consequently, we will leave this as a future work.

References

Capasso, V., Serio, G.: A generalization of the Kermack–McKendrick deterministic epidemic model. Math. Biosci. 42, 43–61 (1978). https://doi.org/10.1016/0025-5564(78)90006-8

Brauer, F.: Mathematical epidemiology: past, present, and future. Infect. Disease Model. 2(2), 113–127 (2017)

Gray, A., Greenhalgh, D., Mao, X., Pan, J.: The SIS epidemic model with Markovian switching. J. Math. Anal. Appl. 394(2), 496–516 (2012)

Liang, Y., Greenhalgh, D., Mao, X.: A stochastic differential equation model for the spread of HIV amongst people who inject drugs. In: Computational and Mathematical Methods in Medicine, vol. 2016, Article ID 6757928. Hindawi Publishing Corporation (2016)

Allen, E.: Modelling with Ito Stochastic Differential Equations. Texas Tech University, Lubbock (2007)

Greenhalgh, D., Liang, Y., Mao, X.: Demographic stochasticity in the SDE SIS epidemic model. Discrete and Continuous Dynamical Systems—Series B 20(9), 2859–2884 (2015)

Cao, B., Shan, M., Zhang, Q.: A stochastic SIS epidemic model with vaccination. Physica A 486, 127–143 (2017)

Economou, A., Gomez-corral, A., Lopez-garcia, M.: A stochastic SIS epidemic model with heterogeneous contacts. Physica A 421, 78–97 (2015)

Krause, A., Kurowski, L., Yawar, K.: Stochastic epidemic metapopulation models on networks: SIS dynamics and control strategies. J. Theor. Biol. 449, 35–52 (2018)

Gray, A., Greenhalgh, D., Hu, L., Mao, X., Pan, J.: A stochastic differential equation SIS epidemic model. SIAM J. Appl. Math. 71(3), 876–902 (2011)

Emmerich, C.: Modelling correlation as a stochastic process. Department of Mathematics, University of Wuppertal, Wuppertal, D-42119, Germany (2006)

Hu, G., Liu, M., Wang, K.: The asymptotic behaviours of an epidemic model with two correlated stochastic perturbations. Appl. Math. Comput. 218(21), 10520–10532 (2012)

Roberts, M.G., Jowett, J.: An SEI model with density dependent demographics and epidemiology. IMA J. Math. Appl. Med. Biol 13(4), 245–257 (1996)

Hening, A., Nguyen, D.H.: Stochastic Lotka–Volterra food chains. J. Math. Biol. 77, 135–163 (2017). https://doi.org/10.1007/s00285-017-1192-8

Mao, X.: Stochastic Differential Equations and Applications, 2nd edn. Horwood, Chichester (2008)

Higham, D.: An algorithmic introduction to numerical simulation of stochastic differential equations. SIAM Rev. 43(3), 525–546 (2001)

Mao, X., Yuan, C.: Stochastic Differential Equations with Markovian Switching. Imperial College Press, London (2006)

Greenhalgh, D., Liang, Y., Mao, X.: SDE SIS epidemic model with demographic stochasticity and varying population size. J. Appl. Math. Comput. 276, 218–238 (2016)

Cai, Y., Mao, X.: Stochastic prey–predator system with foraging arena scheme. Appl. Math. Model. 64, 357–371 (2018). ISSN 0307-904X

Cai, Y., Cai, S., Mao, X.: Stochastic delay foraging arena predator–prey system with Markov switching. Stoch. Int. J. Probab. Stoch. Process. (2019). https://doi.org/10.1080/17442508.2019.1612897

Ben-Israel, A.: A Newton–Raphson method for the solution of systems of equations. J. Math. Anal. Appl. 15(2), 243–252 (1966)

Hasminskii, R.Z.: Stochastic Stability of Differential Equations. Sijthoff & Noordhoff, Amsterdam (1980)

Cai, S., Cai, Y., Mao, X.: A stochastic differential equation SIS epidemic model with two independent Brownian motions. J. Math. Anal. Appl. 474(2), 1536–1550 (2019)

Acknowledgements

The authors would like to thank the reviewers and the editors for their very professional comments and suggestions. The first and second authors would like to thank the University of Strathclyde for the financial support. The second author would also like to thank China Scholarship Council and the MASTS pooling initiative (The Marine Alliance for Science and Technology for Scotland) for their financial support. MASTS is funded by the Scottish Funding Council (HR09011).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Cai, S., Cai, Y. & Mao, X. A stochastic differential equation SIS epidemic model with two correlated Brownian motions. Nonlinear Dyn 97, 2175–2187 (2019). https://doi.org/10.1007/s11071-019-05114-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-019-05114-2